Tiny Machine Learning (TinyML) focuses on deploying machine learning models on low-power, resource-constrained devices like microcontrollers, enabling real-time data processing at the edge without relying on cloud connectivity. Embedded AI integrates artificial intelligence directly into hardware systems, optimizing performance for specific applications such as computer vision, speech recognition, or predictive maintenance. Explore the differences and applications of TinyML and embedded AI to unlock innovations in edge computing technology.

Why it is important

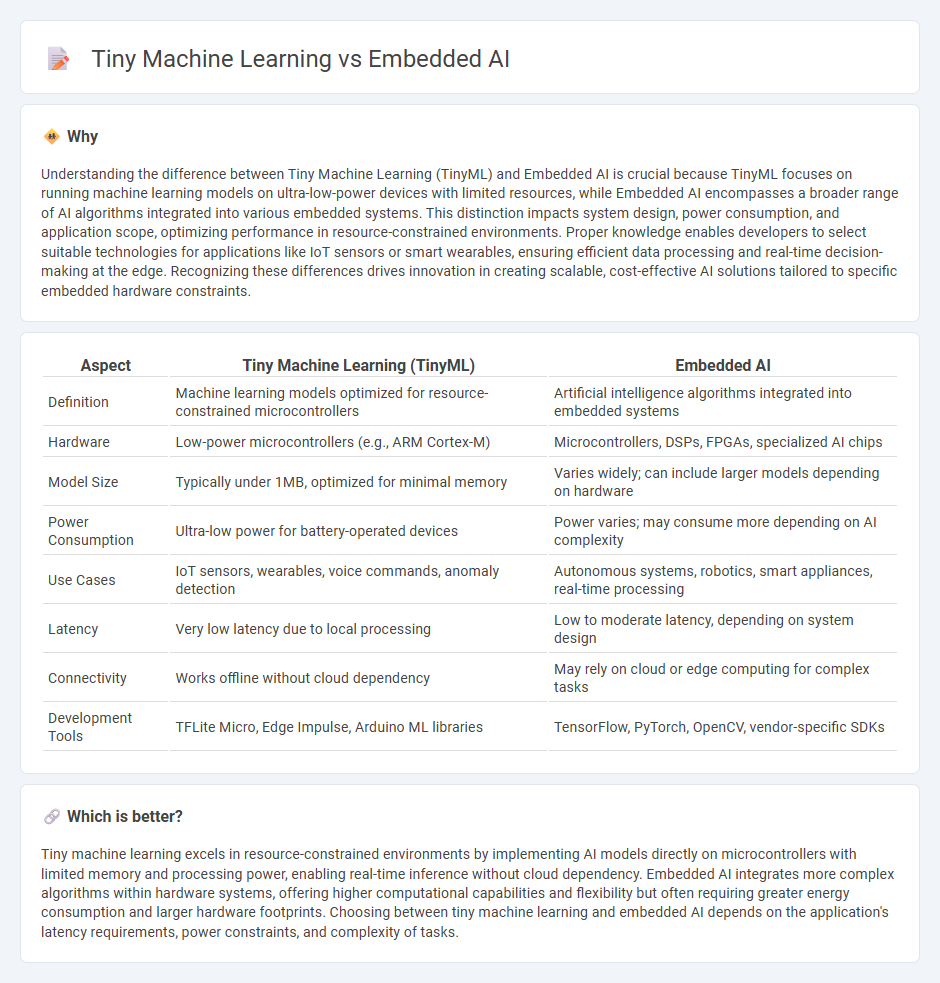

Understanding the difference between Tiny Machine Learning (TinyML) and Embedded AI is crucial because TinyML focuses on running machine learning models on ultra-low-power devices with limited resources, while Embedded AI encompasses a broader range of AI algorithms integrated into various embedded systems. This distinction impacts system design, power consumption, and application scope, optimizing performance in resource-constrained environments. Proper knowledge enables developers to select suitable technologies for applications like IoT sensors or smart wearables, ensuring efficient data processing and real-time decision-making at the edge. Recognizing these differences drives innovation in creating scalable, cost-effective AI solutions tailored to specific embedded hardware constraints.

Comparison Table

| Aspect | Tiny Machine Learning (TinyML) | Embedded AI |

|---|---|---|

| Definition | Machine learning models optimized for resource-constrained microcontrollers | Artificial intelligence algorithms integrated into embedded systems |

| Hardware | Low-power microcontrollers (e.g., ARM Cortex-M) | Microcontrollers, DSPs, FPGAs, specialized AI chips |

| Model Size | Typically under 1MB, optimized for minimal memory | Varies widely; can include larger models depending on hardware |

| Power Consumption | Ultra-low power for battery-operated devices | Power varies; may consume more depending on AI complexity |

| Use Cases | IoT sensors, wearables, voice commands, anomaly detection | Autonomous systems, robotics, smart appliances, real-time processing |

| Latency | Very low latency due to local processing | Low to moderate latency, depending on system design |

| Connectivity | Works offline without cloud dependency | May rely on cloud or edge computing for complex tasks |

| Development Tools | TFLite Micro, Edge Impulse, Arduino ML libraries | TensorFlow, PyTorch, OpenCV, vendor-specific SDKs |

Which is better?

Tiny machine learning excels in resource-constrained environments by implementing AI models directly on microcontrollers with limited memory and processing power, enabling real-time inference without cloud dependency. Embedded AI integrates more complex algorithms within hardware systems, offering higher computational capabilities and flexibility but often requiring greater energy consumption and larger hardware footprints. Choosing between tiny machine learning and embedded AI depends on the application's latency requirements, power constraints, and complexity of tasks.

Connection

Tiny machine learning (TinyML) enables the deployment of machine learning algorithms on embedded AI devices with limited computational resources. Embedded AI integrates these TinyML models into hardware such as microcontrollers and sensors to perform real-time data processing without relying on cloud connectivity. This symbiotic relationship enhances edge computing capabilities by reducing latency and power consumption while enabling intelligent decision-making at the device level.

Key Terms

Edge Computing

Embedded AI integrates artificial intelligence models directly into edge devices, enabling real-time data processing with minimal latency and enhanced privacy. Tiny machine learning (TinyML) specializes in deploying ultra-efficient neural networks on microcontrollers with extremely limited resources, optimizing power consumption and operational costs at the edge. Explore our resources to understand how these technologies revolutionize edge computing applications.

Model Compression

Embedded AI integrates advanced machine learning models directly into edge devices, necessitating efficient model compression techniques to reduce memory footprint and computational requirements. Tiny Machine Learning (TinyML) specifically emphasizes ultra-compact model architectures and aggressive compression methods such as quantization and pruning to enable real-time inference on microcontrollers with limited resources. Explore further to understand critical compression strategies that optimize on-device AI performance.

Microcontroller Unit (MCU)

Embedded AI integrates artificial intelligence directly onto Microcontroller Units (MCUs), enabling advanced data processing at the hardware level with minimal latency. Tiny Machine Learning (TinyML) specifically targets ultra-low-power MCUs, optimizing machine learning models to run efficiently within restricted memory and computational constraints. Explore how these technologies revolutionize edge computing and IoT applications.

Source and External Links

What is Embedded AI and How Does it Work? - Embedded AI integrates artificial intelligence directly into devices, enabling them to process data and make real-time decisions locally without relying on external servers.

What Is Embedded AI (EAI)? Why Do We Need EAI? - Embedded AI (EAI) is a framework built into network devices that manages AI models, data collection, and preprocessing, supporting real-time inferencing and decision-making at the edge to reduce data transmission costs and enhance security.

Edge AI: The Future of Artificial Intelligence in embedded systems - Edge AI moves AI processing from the cloud to embedded devices, enabling applications like predictive maintenance and voice assistance, though it requires optimized models and robust security for resource-constrained environments.

dowidth.com

dowidth.com