Diffusion models leverage stochastic processes to generate high-quality data by iteratively refining noise into structured outputs, excelling in applications like image synthesis. Restricted Boltzmann Machines (RBMs) employ energy-based models to discover latent features through unsupervised learning, widely used in collaborative filtering and dimensionality reduction. Explore the detailed comparison to understand their unique strengths and applications in modern machine learning.

Why it is important

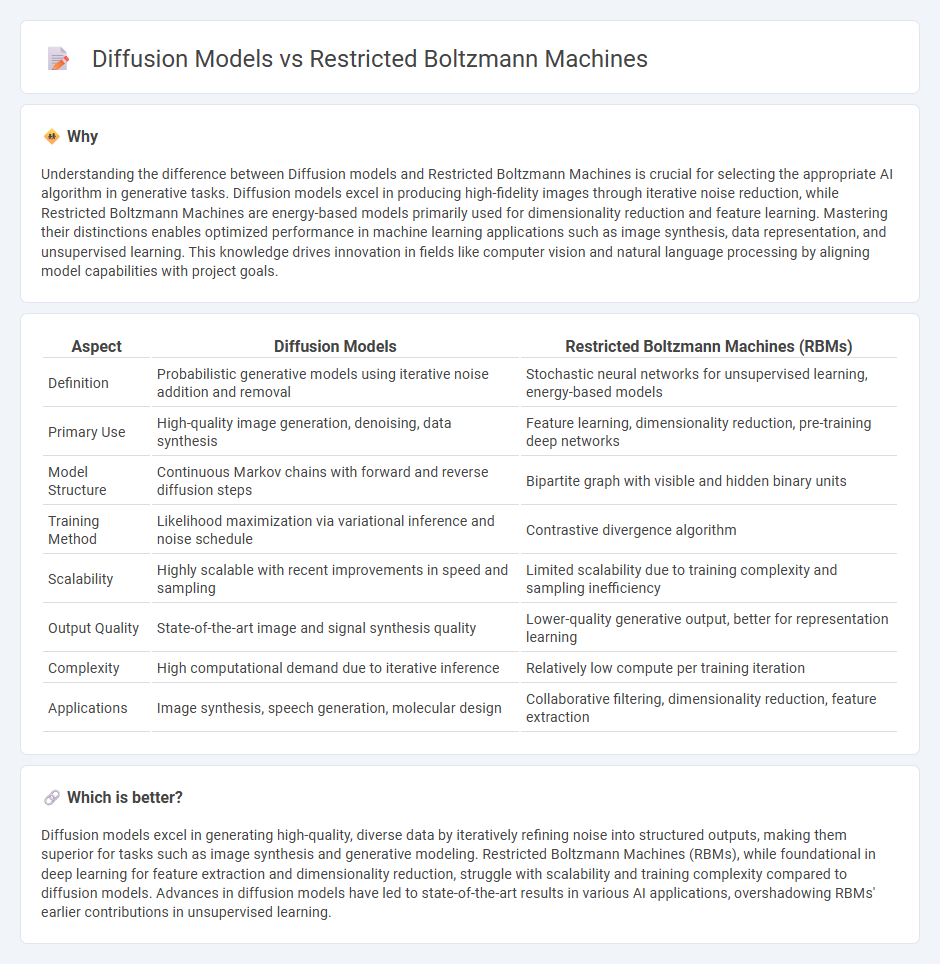

Understanding the difference between Diffusion models and Restricted Boltzmann Machines is crucial for selecting the appropriate AI algorithm in generative tasks. Diffusion models excel in producing high-fidelity images through iterative noise reduction, while Restricted Boltzmann Machines are energy-based models primarily used for dimensionality reduction and feature learning. Mastering their distinctions enables optimized performance in machine learning applications such as image synthesis, data representation, and unsupervised learning. This knowledge drives innovation in fields like computer vision and natural language processing by aligning model capabilities with project goals.

Comparison Table

| Aspect | Diffusion Models | Restricted Boltzmann Machines (RBMs) |

|---|---|---|

| Definition | Probabilistic generative models using iterative noise addition and removal | Stochastic neural networks for unsupervised learning, energy-based models |

| Primary Use | High-quality image generation, denoising, data synthesis | Feature learning, dimensionality reduction, pre-training deep networks |

| Model Structure | Continuous Markov chains with forward and reverse diffusion steps | Bipartite graph with visible and hidden binary units |

| Training Method | Likelihood maximization via variational inference and noise schedule | Contrastive divergence algorithm |

| Scalability | Highly scalable with recent improvements in speed and sampling | Limited scalability due to training complexity and sampling inefficiency |

| Output Quality | State-of-the-art image and signal synthesis quality | Lower-quality generative output, better for representation learning |

| Complexity | High computational demand due to iterative inference | Relatively low compute per training iteration |

| Applications | Image synthesis, speech generation, molecular design | Collaborative filtering, dimensionality reduction, feature extraction |

Which is better?

Diffusion models excel in generating high-quality, diverse data by iteratively refining noise into structured outputs, making them superior for tasks such as image synthesis and generative modeling. Restricted Boltzmann Machines (RBMs), while foundational in deep learning for feature extraction and dimensionality reduction, struggle with scalability and training complexity compared to diffusion models. Advances in diffusion models have led to state-of-the-art results in various AI applications, overshadowing RBMs' earlier contributions in unsupervised learning.

Connection

Diffusion models and Restricted Boltzmann Machines (RBMs) are connected through their use in generative modeling, where diffusion models generate data by gradually reversing a noise process, while RBMs learn probability distributions using stochastic neural networks with visible and hidden layers. Both approaches aim to capture complex data distributions, but diffusion models focus on iterative refinement of samples, whereas RBMs rely on energy-based learning with contrastive divergence. Integrating insights from RBMs can enhance the training efficiency and interpretability of diffusion models in high-dimensional data synthesis tasks.

Key Terms

Energy-Based Models

Restricted Boltzmann Machines (RBMs) and Diffusion Models are both core types of Energy-Based Models (EBMs), with RBMs utilizing a bipartite graph structure to model complex data distributions via energy minimization between visible and hidden units. Diffusion Models learn data distributions by iteratively reversing a diffusion process, effectively modeling probabilities through gradual denoising steps guided by an energy function. Explore in-depth comparisons of architecture, convergence behavior, and application domains to understand how these models leverage energy landscapes for generative tasks.

Latent Space

Restricted Boltzmann Machines (RBMs) utilize a probabilistic latent space composed of binary hidden units to model complex data distributions through energy-based learning, enabling unsupervised feature extraction and dimensionality reduction. Diffusion models, on the other hand, operate in continuous latent spaces, gradually transforming noise into structured data by learning the reverse of diffusion processes, which enhances generative quality and stability. Explore the differences in latent space representations and applications of RBMs and diffusion models to understand their impact on modern AI generation techniques.

Generative Process

Restricted Boltzmann Machines (RBMs) generate data through a two-layer stochastic network involving visible and hidden units that iteratively sample from each other to model joint probability distributions. Diffusion models create data by gradually reversing a noise addition process, starting from pure noise and applying learned denoising steps to reach data distribution. Explore the unique generative mechanisms and applications of RBMs and diffusion models to understand their strengths and differences.

Source and External Links

Restricted Boltzmann machine - Wikipedia - A restricted Boltzmann machine (RBM) is a generative stochastic neural network that learns a probability distribution over input data, featuring two layers (visible and hidden) with connections only between layers, enabling efficient training and applications in dimensionality reduction, classification, and deep learning architectures like deep belief networks.

Restricted Boltzmann Machines -- Computing in Physics (498CMP) - RBMs are probabilistic models defined over binary variables split into visible and hidden layers, where connections exist only between these two layers (not within), making the network "restricted"; training tunes parameters so the model's distribution matches the data distribution, applicable in tasks like image recognition on MNIST digits.

Restricted Boltzmann Machine - GeeksforGeeks - RBMs are energy-based, unsupervised neural networks invented by Geoffrey Hinton, consisting of visible and hidden layers with no intra-layer connections, capable of learning complex data distributions for tasks like feature learning and combinatoric optimization problems.

dowidth.com

dowidth.com