Neural Radiance Fields (NeRFs) offer a cutting-edge approach to 3D scene reconstruction by synthesizing high-fidelity images from sparse input views, leveraging deep learning to model volumetric radiance. Depth sensing techniques, such as LiDAR and structured light, capture precise geometric information by measuring the distance between sensors and objects, enabling real-time spatial mapping. Explore the advancements and applications of NeRFs versus depth sensing to understand their impact on immersive technologies and computer vision.

Why it is important

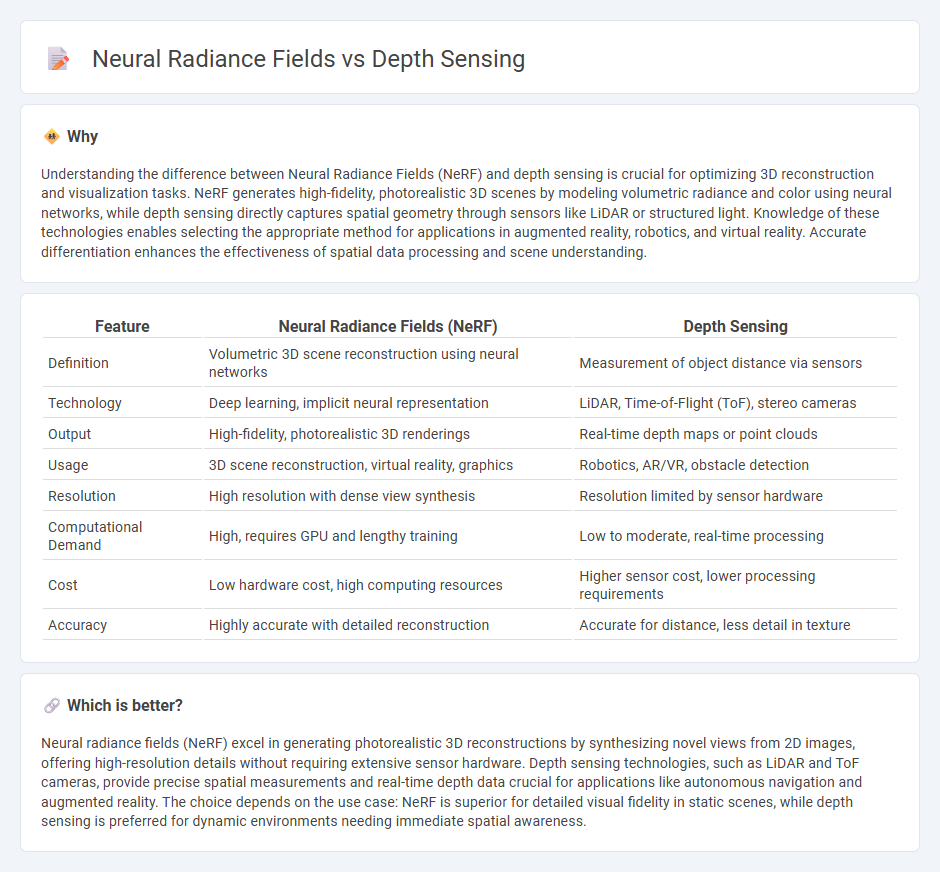

Understanding the difference between Neural Radiance Fields (NeRF) and depth sensing is crucial for optimizing 3D reconstruction and visualization tasks. NeRF generates high-fidelity, photorealistic 3D scenes by modeling volumetric radiance and color using neural networks, while depth sensing directly captures spatial geometry through sensors like LiDAR or structured light. Knowledge of these technologies enables selecting the appropriate method for applications in augmented reality, robotics, and virtual reality. Accurate differentiation enhances the effectiveness of spatial data processing and scene understanding.

Comparison Table

| Feature | Neural Radiance Fields (NeRF) | Depth Sensing |

|---|---|---|

| Definition | Volumetric 3D scene reconstruction using neural networks | Measurement of object distance via sensors |

| Technology | Deep learning, implicit neural representation | LiDAR, Time-of-Flight (ToF), stereo cameras |

| Output | High-fidelity, photorealistic 3D renderings | Real-time depth maps or point clouds |

| Usage | 3D scene reconstruction, virtual reality, graphics | Robotics, AR/VR, obstacle detection |

| Resolution | High resolution with dense view synthesis | Resolution limited by sensor hardware |

| Computational Demand | High, requires GPU and lengthy training | Low to moderate, real-time processing |

| Cost | Low hardware cost, high computing resources | Higher sensor cost, lower processing requirements |

| Accuracy | Highly accurate with detailed reconstruction | Accurate for distance, less detail in texture |

Which is better?

Neural radiance fields (NeRF) excel in generating photorealistic 3D reconstructions by synthesizing novel views from 2D images, offering high-resolution details without requiring extensive sensor hardware. Depth sensing technologies, such as LiDAR and ToF cameras, provide precise spatial measurements and real-time depth data crucial for applications like autonomous navigation and augmented reality. The choice depends on the use case: NeRF is superior for detailed visual fidelity in static scenes, while depth sensing is preferred for dynamic environments needing immediate spatial awareness.

Connection

Neural radiance fields (NeRF) utilize depth sensing data to accurately reconstruct 3D scenes by capturing spatial geometry and light intensity from multiple viewpoints. Depth sensors provide essential distance information that enhances NeRF's ability to generate realistic volumetric representations and improve scene rendering quality. Integrating depth sensing with NeRF advances applications in virtual reality, augmented reality, and 3D modeling through precise and detailed environment mapping.

Key Terms

Stereo Vision (Depth Sensing)

Stereo vision in depth sensing leverages dual cameras to capture two perspectives of a scene, calculating disparity for precise depth estimation and enabling accurate 3D reconstruction. Neural Radiance Fields (NeRF) instead use deep learning to represent scenes by modeling light fields, producing high-fidelity 3D views but requiring extensive computational resources and training data. Explore the trade-offs between stereo vision's real-time depth accuracy and NeRF's detailed scene representation to optimize applications like autonomous navigation and virtual reality.

Volumetric Rendering (Neural Radiance Fields)

Depth sensing captures precise spatial information by measuring the distance between the sensor and objects, enabling accurate 3D scene reconstruction with real-world geometry data. Neural Radiance Fields (NeRF) employ volumetric rendering to synthesize novel views by modeling scene radiance and density across a continuous 3D volume, producing photorealistic images with complex light interactions. Explore more about how NeRF revolutionizes volumetric rendering and 3D scene representation through advanced neural techniques.

3D Reconstruction

Depth sensing technologies capture precise spatial information through sensors like LiDAR or structured light, enabling accurate 3D reconstruction in real-time applications. Neural Radiance Fields (NeRFs) utilize deep learning to generate photorealistic 3D scenes by optimizing volumetric scene representations from multiple images, offering higher detail and realism but requiring significant computational resources. Explore the advantages and trade-offs of depth sensing versus NeRFs to enhance your understanding of advanced 3D reconstruction techniques.

Source and External Links

Depth Sensing definition and description - Depth sensing is a technology that measures the distance between a sensor and objects using methods like time-of-flight, stereo vision, or structured light, and is widely used in AR/VR, robotics, and autonomous vehicles for precise environmental perception.

What are depth-sensing cameras? How do they work? - Depth-sensing cameras provide a 3D perspective by measuring distances to objects in real-time, enabling autonomous machines to perform mapping, localization, path planning, and obstacle avoidance through technologies such as stereo vision, LiDAR, and time-of-flight.

Depth Sensing Overview - Stereolabs - Depth sensing replicates human binocular vision using stereo cameras that capture two perspectives to compute depth maps, enabling distance measurement up to 35 meters with applications indoors and outdoors, notably improving on traditional short-range sensors.

dowidth.com

dowidth.com