Differentiable programming leverages gradient-based methods to optimize complex models by exploiting their differentiable structures, enabling efficient learning in neural networks and other systems. Gradient-free optimization relies on techniques like evolutionary algorithms or random search, useful when gradients are unavailable or costly to compute. Explore the nuances and applications of these approaches to understand their impact on modern technology.

Why it is important

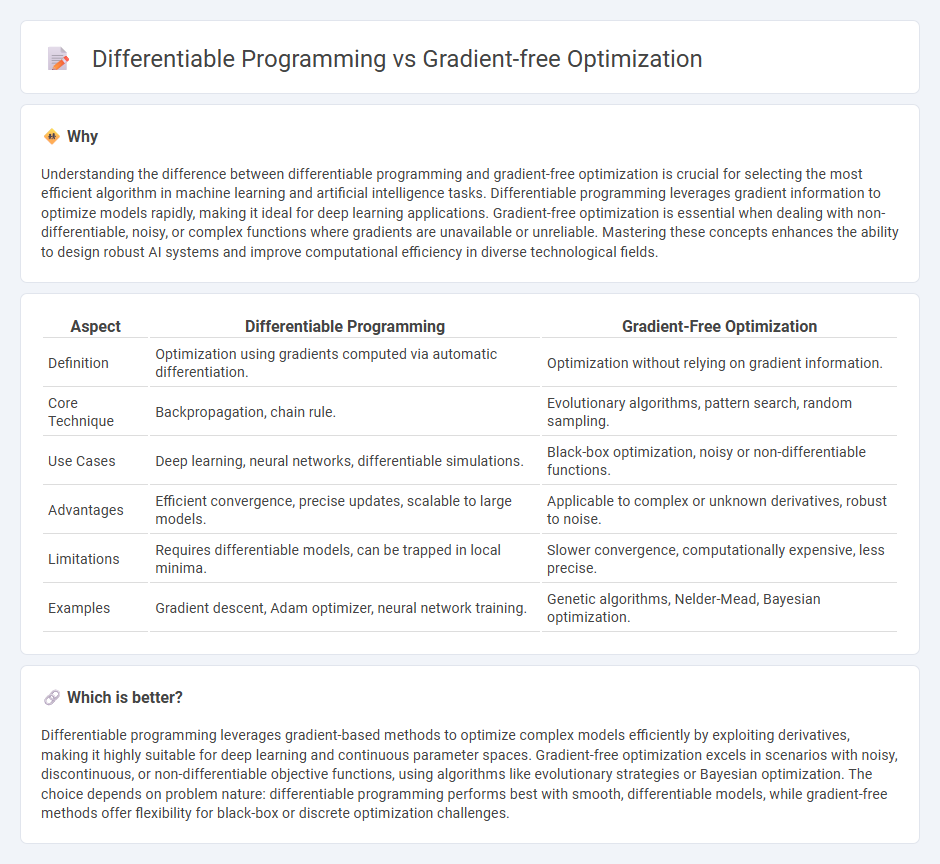

Understanding the difference between differentiable programming and gradient-free optimization is crucial for selecting the most efficient algorithm in machine learning and artificial intelligence tasks. Differentiable programming leverages gradient information to optimize models rapidly, making it ideal for deep learning applications. Gradient-free optimization is essential when dealing with non-differentiable, noisy, or complex functions where gradients are unavailable or unreliable. Mastering these concepts enhances the ability to design robust AI systems and improve computational efficiency in diverse technological fields.

Comparison Table

| Aspect | Differentiable Programming | Gradient-Free Optimization |

|---|---|---|

| Definition | Optimization using gradients computed via automatic differentiation. | Optimization without relying on gradient information. |

| Core Technique | Backpropagation, chain rule. | Evolutionary algorithms, pattern search, random sampling. |

| Use Cases | Deep learning, neural networks, differentiable simulations. | Black-box optimization, noisy or non-differentiable functions. |

| Advantages | Efficient convergence, precise updates, scalable to large models. | Applicable to complex or unknown derivatives, robust to noise. |

| Limitations | Requires differentiable models, can be trapped in local minima. | Slower convergence, computationally expensive, less precise. |

| Examples | Gradient descent, Adam optimizer, neural network training. | Genetic algorithms, Nelder-Mead, Bayesian optimization. |

Which is better?

Differentiable programming leverages gradient-based methods to optimize complex models efficiently by exploiting derivatives, making it highly suitable for deep learning and continuous parameter spaces. Gradient-free optimization excels in scenarios with noisy, discontinuous, or non-differentiable objective functions, using algorithms like evolutionary strategies or Bayesian optimization. The choice depends on problem nature: differentiable programming performs best with smooth, differentiable models, while gradient-free methods offer flexibility for black-box or discrete optimization challenges.

Connection

Differentiable programming leverages gradient information for efficient model training, while gradient-free optimization relies on heuristic or evolutionary strategies when gradients are unavailable or unreliable. Both approaches intersect in scenarios where hybrid methods use gradient approximations combined with gradient-free techniques to optimize complex functions. This synergy enhances performance in machine learning tasks involving non-differentiable models or noisy environments.

Key Terms

Black-box Optimization

Gradient-free optimization excels in black-box scenarios where the objective function lacks explicit gradients or is noisy and expensive to evaluate, relying on methods like evolutionary algorithms and Bayesian optimization. Differentiable programming depends on gradient information through techniques like automatic differentiation, making it less suitable for black-box problems but powerful in structured domains. Explore further to understand how these approaches impact black-box optimization performance and applicability.

Automatic Differentiation

Gradient-free optimization methods, such as genetic algorithms or Nelder-Mead, rely on function evaluations rather than gradient information, making them suitable for black-box problems where derivatives are unavailable. Differentiable programming leverages Automatic Differentiation (AD) to compute exact gradients efficiently, enabling gradient-based optimization techniques like gradient descent to solve complex models with high precision. Explore further to understand how integrating AD transforms optimization landscapes and accelerates machine learning workflows.

Loss Function

Gradient-free optimization techniques optimize loss functions without relying on gradient information, making them suitable for non-differentiable or noisy objective landscapes. Differentiable programming leverages gradient-based methods to minimize loss functions by computing explicit derivatives, enabling efficient training of complex models like neural networks. Explore more to understand how these approaches impact optimization strategies and model performance.

Source and External Links

Derivative-free optimization - Wikipedia - Derivative-free optimization, also called blackbox optimization, refers to methods that find optimal solutions without using derivatives, useful when derivatives are unavailable, noisy, or impractical; common algorithms include genetic algorithms, particle swarm optimization, CMA-ES, Nelder-Mead, and Bayesian optimization.

Types of gradient-free optimizers - YouTube - Gradient-free optimizers encompass various methods such as genetic algorithms, particle swarm methods, and simplex methods, with the best choice depending on the problem's nature, including multimodality and discreteness.

Gradient-free neural topology optimization - arXiv - Recent research improves the scalability and performance of gradient-free optimization in complex design problems by combining machine learning strategies, narrowing the gap with gradient-based methods in non-convex settings.

dowidth.com

dowidth.com