Neural Radiance Fields (NeRF) revolutionize 3D scene representation by encoding volumetric radiance and density using continuous functions, enabling photorealistic novel view synthesis from sparse images. Mesh reconstruction traditionally relies on explicit surface models generated from point clouds or depth data, often resulting in lower detail and difficulty capturing complex lighting effects. Explore the advancements and applications of NeRF versus mesh reconstruction to understand their impact on 3D imaging and virtual reality.

Why it is important

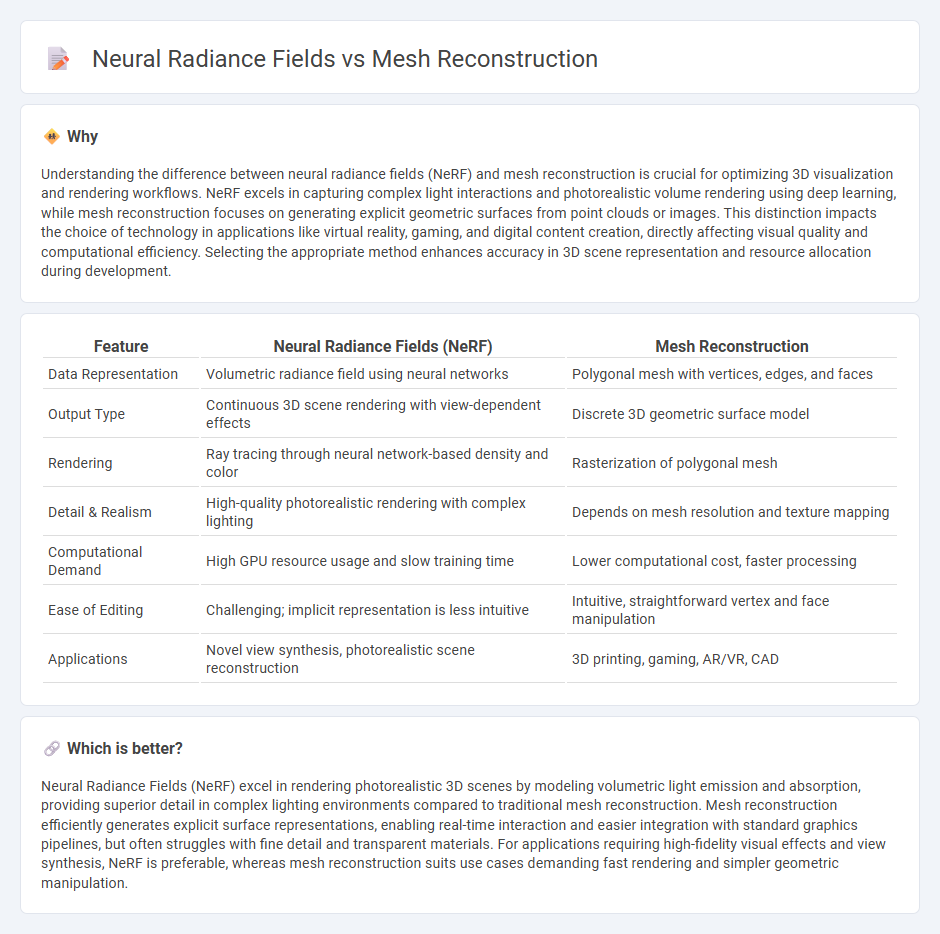

Understanding the difference between neural radiance fields (NeRF) and mesh reconstruction is crucial for optimizing 3D visualization and rendering workflows. NeRF excels in capturing complex light interactions and photorealistic volume rendering using deep learning, while mesh reconstruction focuses on generating explicit geometric surfaces from point clouds or images. This distinction impacts the choice of technology in applications like virtual reality, gaming, and digital content creation, directly affecting visual quality and computational efficiency. Selecting the appropriate method enhances accuracy in 3D scene representation and resource allocation during development.

Comparison Table

| Feature | Neural Radiance Fields (NeRF) | Mesh Reconstruction |

|---|---|---|

| Data Representation | Volumetric radiance field using neural networks | Polygonal mesh with vertices, edges, and faces |

| Output Type | Continuous 3D scene rendering with view-dependent effects | Discrete 3D geometric surface model |

| Rendering | Ray tracing through neural network-based density and color | Rasterization of polygonal mesh |

| Detail & Realism | High-quality photorealistic rendering with complex lighting | Depends on mesh resolution and texture mapping |

| Computational Demand | High GPU resource usage and slow training time | Lower computational cost, faster processing |

| Ease of Editing | Challenging; implicit representation is less intuitive | Intuitive, straightforward vertex and face manipulation |

| Applications | Novel view synthesis, photorealistic scene reconstruction | 3D printing, gaming, AR/VR, CAD |

Which is better?

Neural Radiance Fields (NeRF) excel in rendering photorealistic 3D scenes by modeling volumetric light emission and absorption, providing superior detail in complex lighting environments compared to traditional mesh reconstruction. Mesh reconstruction efficiently generates explicit surface representations, enabling real-time interaction and easier integration with standard graphics pipelines, but often struggles with fine detail and transparent materials. For applications requiring high-fidelity visual effects and view synthesis, NeRF is preferable, whereas mesh reconstruction suits use cases demanding fast rendering and simpler geometric manipulation.

Connection

Neural radiance fields (NeRF) enable high-fidelity 3D scene representation by modeling volumetric light emission and absorption, which complements mesh reconstruction techniques that extract geometric structures from these volumetric data. Integrating NeRF with mesh reconstruction enhances the accuracy and detail of 3D models by combining photorealistic rendering with precise surface geometry extraction. This synergy is pivotal in applications such as virtual reality, computer graphics, and autonomous navigation, where detailed spatial understanding is essential.

Key Terms

Polygon Mesh

Polygon mesh reconstruction provides explicit 3D geometry representation using vertices, edges, and faces, allowing efficient rendering and easy integration into traditional graphics pipelines. Neural Radiance Fields (NeRF) generate continuous volumetric scenes by learning implicit functions that model light emission and absorption, producing photorealistic views but lacking direct polygonal output. Explore how polygon mesh reconstruction compares with NeRF for applications requiring precise mesh models and real-time interaction.

Volumetric Rendering

Mesh reconstruction creates explicit 3D models by defining vertices, edges, and faces, enabling efficient rendering and editing, while neural radiance fields (NeRFs) use deep learning to represent continuous volumetric scenes through spatially-varying radiance and density functions optimized for novel view synthesis. Volumetric rendering in NeRF integrates these fields along camera rays to produce highly photorealistic images with fine details and complex lighting, outperforming traditional mesh-based approaches in rendering transparency and specular effects. Explore further to understand how volumetric rendering techniques revolutionize 3D scene representation and visualization.

Implicit Representation

Implicit representations in mesh reconstruction model 3D shapes using continuous functions, enabling smooth surfaces and detailed geometry without explicit vertex storage. Neural Radiance Fields (NeRF) use implicit functions to encode volumetric scene information with view-dependent color and density, optimizing photorealistic rendering through differentiable volumetric integration. Explore detailed comparisons to understand how these implicit approaches advance 3D vision and rendering technologies.

Source and External Links

A deep-learning approach for direct whole-heart mesh reconstruction - Proposes a novel deep-learning method using graph convolutional networks to directly predict 3D surface meshes of the whole heart from volumetric CT and MR images, improving anatomical consistency and resolution over traditional segmentation-based approaches.

Self-Supervised 3D Mesh Reconstruction From Single Images - Introduces a self-supervised method that reconstructs 3D mesh attributes from single 2D images using silhouette masks, enabling accurate shape and texture reconstruction without explicit 3D supervision.

High-Quality Mesh Generation with 3D-Guided Reconstruction Model - Presents MeshFormer, a sparse-view reconstruction model leveraging 3D structural guidance and training supervision to generate high-quality 3D meshes.

dowidth.com

dowidth.com