Homomorphic encryption enables computation on encrypted data without decrypting it, preserving privacy while allowing secure data processing in cloud computing and confidential AI applications. Federated learning trains machine learning models across decentralized devices by sharing model updates instead of raw data, ensuring data privacy and reducing communication overhead in edge computing. Explore the differences and use cases of homomorphic encryption and federated learning to better understand privacy-preserving technologies.

Why it is important

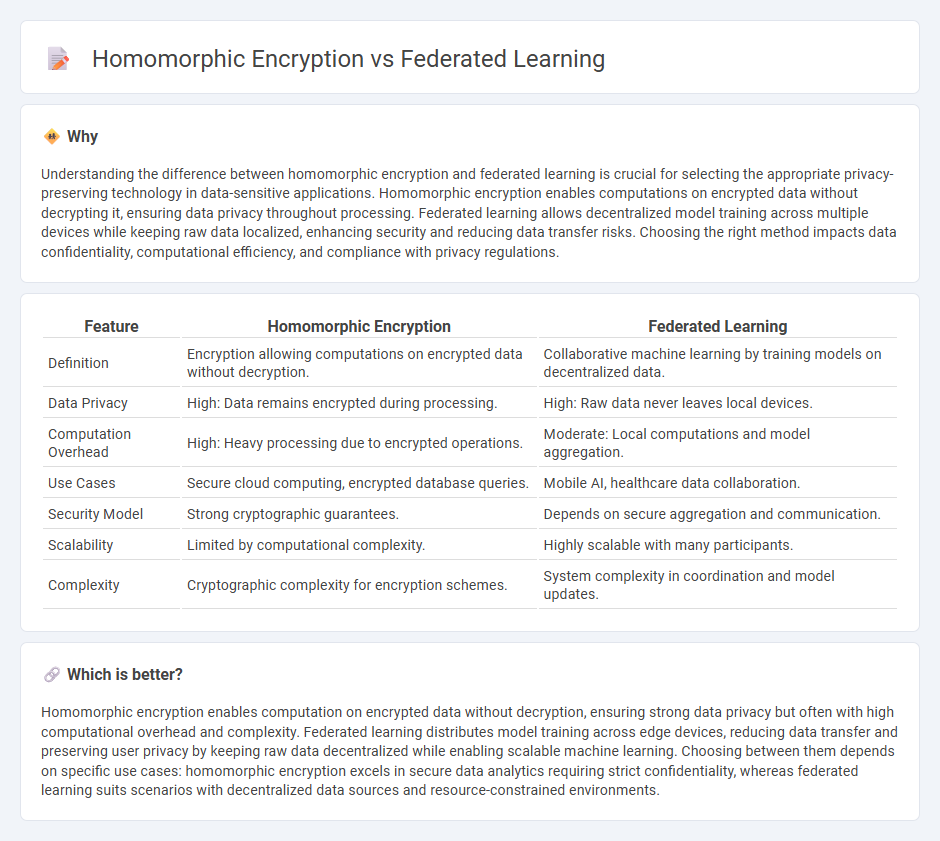

Understanding the difference between homomorphic encryption and federated learning is crucial for selecting the appropriate privacy-preserving technology in data-sensitive applications. Homomorphic encryption enables computations on encrypted data without decrypting it, ensuring data privacy throughout processing. Federated learning allows decentralized model training across multiple devices while keeping raw data localized, enhancing security and reducing data transfer risks. Choosing the right method impacts data confidentiality, computational efficiency, and compliance with privacy regulations.

Comparison Table

| Feature | Homomorphic Encryption | Federated Learning |

|---|---|---|

| Definition | Encryption allowing computations on encrypted data without decryption. | Collaborative machine learning by training models on decentralized data. |

| Data Privacy | High: Data remains encrypted during processing. | High: Raw data never leaves local devices. |

| Computation Overhead | High: Heavy processing due to encrypted operations. | Moderate: Local computations and model aggregation. |

| Use Cases | Secure cloud computing, encrypted database queries. | Mobile AI, healthcare data collaboration. |

| Security Model | Strong cryptographic guarantees. | Depends on secure aggregation and communication. |

| Scalability | Limited by computational complexity. | Highly scalable with many participants. |

| Complexity | Cryptographic complexity for encryption schemes. | System complexity in coordination and model updates. |

Which is better?

Homomorphic encryption enables computation on encrypted data without decryption, ensuring strong data privacy but often with high computational overhead and complexity. Federated learning distributes model training across edge devices, reducing data transfer and preserving user privacy by keeping raw data decentralized while enabling scalable machine learning. Choosing between them depends on specific use cases: homomorphic encryption excels in secure data analytics requiring strict confidentiality, whereas federated learning suits scenarios with decentralized data sources and resource-constrained environments.

Connection

Homomorphic encryption enables computations on encrypted data without decryption, ensuring privacy in federated learning environments where multiple devices collaboratively train models. Federated learning relies on data decentralization and privacy preservation, making homomorphic encryption essential for secure aggregation of model updates. Combining these technologies enhances secure, privacy-preserving machine learning across distributed systems.

Key Terms

Data privacy

Federated learning enhances data privacy by enabling model training directly on decentralized devices, ensuring raw data remains local and reducing risk of exposure. Homomorphic encryption protects data by allowing computations on encrypted information without decryption, maintaining confidentiality throughout processing. Explore further to understand how these technologies safeguard sensitive information in different environments.

Decentralized computation

Federated learning enables decentralized computation by allowing multiple devices to collaboratively train machine learning models without sharing raw data, enhancing privacy and reducing communication overhead. Homomorphic encryption supports decentralized computation by allowing encrypted data to be processed and analyzed directly, preserving data confidentiality throughout the computation process. Explore more to understand how each approach transforms data privacy in decentralized systems.

Secure computation

Federated learning enables collaborative model training across decentralized devices while keeping raw data local, minimizing privacy risks through secure aggregation of gradients. Homomorphic encryption allows computations directly on encrypted data without decryption, ensuring data privacy during processing but often incurring significant computational overhead. Explore how combining these techniques enhances secure computation for privacy-preserving machine learning.

Source and External Links

What Is Federated Learning? | IBM - Federated learning is a decentralized approach to training machine learning models where client nodes train a global model locally on their data and send updates to a central server for aggregation, preserving data privacy by keeping sensitive information on-device.

What is federated learning? - IBM Research - Federated learning enables multiple parties to collaboratively and iteratively train a deep learning model without sharing raw data, using encrypted model updates sent back to a cloud to improve the shared model.

Federated learning - Wikipedia - Federated learning is a machine learning technique where multiple clients train a model collaboratively while keeping training data decentralized, focusing on heterogeneous data sets and addressing privacy and data access issues.

dowidth.com

dowidth.com