Generative Adversarial Networks (GANs) consist of two neural networks contesting to generate realistic data, excelling in image synthesis and data augmentation. Recurrent Neural Networks (RNNs) specialize in sequence processing, making them ideal for natural language processing, time series analysis, and speech recognition. Explore the differences and applications of these powerful technologies to understand their impact on AI advancements.

Why it is important

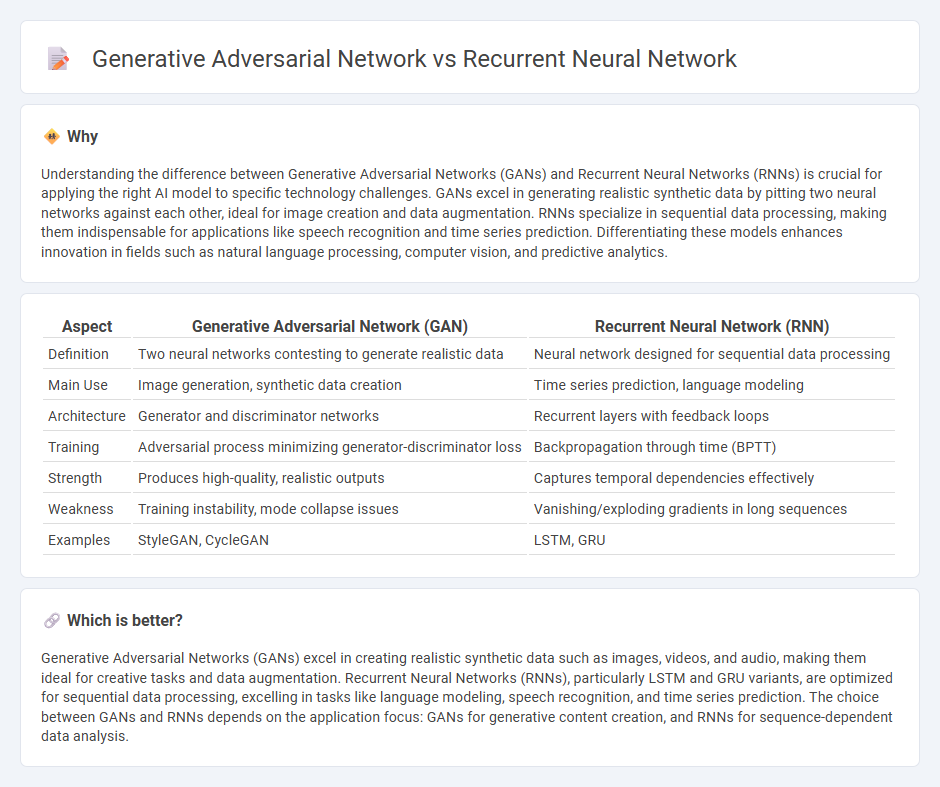

Understanding the difference between Generative Adversarial Networks (GANs) and Recurrent Neural Networks (RNNs) is crucial for applying the right AI model to specific technology challenges. GANs excel in generating realistic synthetic data by pitting two neural networks against each other, ideal for image creation and data augmentation. RNNs specialize in sequential data processing, making them indispensable for applications like speech recognition and time series prediction. Differentiating these models enhances innovation in fields such as natural language processing, computer vision, and predictive analytics.

Comparison Table

| Aspect | Generative Adversarial Network (GAN) | Recurrent Neural Network (RNN) |

|---|---|---|

| Definition | Two neural networks contesting to generate realistic data | Neural network designed for sequential data processing |

| Main Use | Image generation, synthetic data creation | Time series prediction, language modeling |

| Architecture | Generator and discriminator networks | Recurrent layers with feedback loops |

| Training | Adversarial process minimizing generator-discriminator loss | Backpropagation through time (BPTT) |

| Strength | Produces high-quality, realistic outputs | Captures temporal dependencies effectively |

| Weakness | Training instability, mode collapse issues | Vanishing/exploding gradients in long sequences |

| Examples | StyleGAN, CycleGAN | LSTM, GRU |

Which is better?

Generative Adversarial Networks (GANs) excel in creating realistic synthetic data such as images, videos, and audio, making them ideal for creative tasks and data augmentation. Recurrent Neural Networks (RNNs), particularly LSTM and GRU variants, are optimized for sequential data processing, excelling in tasks like language modeling, speech recognition, and time series prediction. The choice between GANs and RNNs depends on the application focus: GANs for generative content creation, and RNNs for sequence-dependent data analysis.

Connection

Generative Adversarial Networks (GANs) and Recurrent Neural Networks (RNNs) connect through their complementary capabilities in AI, where GANs generate high-quality synthetic data and RNNs excel at processing sequential information. This synergy is particularly effective in applications like video and speech synthesis, where temporal dependencies captured by RNNs improve the realism of data generated by GANs. Combining GANs' adversarial training with RNNs' sequential learning enhances advancements in natural language processing and time-series data modeling.

Key Terms

Sequence Modeling

Recurrent Neural Networks (RNNs) excel at sequence modeling by capturing temporal dependencies in data, making them ideal for tasks like language modeling and time series prediction. Generative Adversarial Networks (GANs), while primarily designed for generating realistic data samples, are less commonly used for sequence modeling due to challenges in training with sequential data. Explore more about how RNNs and GANs compare and complement each other in advanced sequence generation applications.

Generator-Discriminator

Recurrent Neural Networks (RNNs) excel in processing sequential data by maintaining hidden states across time steps, whereas Generative Adversarial Networks (GANs) consist of a Generator that synthesizes data and a Discriminator that evaluates authenticity in a competitive setting. The adversarial training mechanism in GANs enhances data generation quality by continuously refining the Generator based on feedback from the Discriminator, a dynamic absent in traditional RNN architectures. Explore deeper insights into the complementary strengths and specific applications of RNNs and GANs.

Temporal Dependency

Recurrent Neural Networks (RNNs) excel at capturing temporal dependencies in sequential data through their feedback loops, making them ideal for tasks such as time series prediction and natural language processing. Generative Adversarial Networks (GANs), while powerful in generating realistic data, typically lack inherent mechanisms for modeling temporal dependencies without specialized architectures like Temporal GANs. Explore more to understand the nuanced applications and architectures that leverage temporal dynamics effectively.

Source and External Links

What is a Recurrent Neural Network (RNN)? - IBM - A recurrent neural network (RNN) is a deep learning model designed to process sequential or time series data by maintaining a hidden state that acts as memory, allowing it to use information from previous steps to influence current predictions.

Recurrent neural network - Wikipedia - An RNN is an artificial neural network specialized for sequential data like text and speech, using recurrent connections that feed the output from one time step as input to the next, enabling it to capture temporal dependencies.

Recurrent Neural Network Definition | DeepAI - RNNs are neural networks with connections forming a directed graph along a temporal sequence, allowing them to process sequences of inputs by using internal state (memory) to retain context from prior elements.

dowidth.com

dowidth.com