Edge intelligence processes data locally on edge devices, reducing latency and improving real-time decision-making for applications like autonomous vehicles and smart cities. On-device AI specifically integrates machine learning models directly into hardware, enabling offline functionality and enhanced privacy in smartphones and wearable devices. Explore the distinct benefits and use cases of edge intelligence versus on-device AI to optimize your technology strategy.

Why it is important

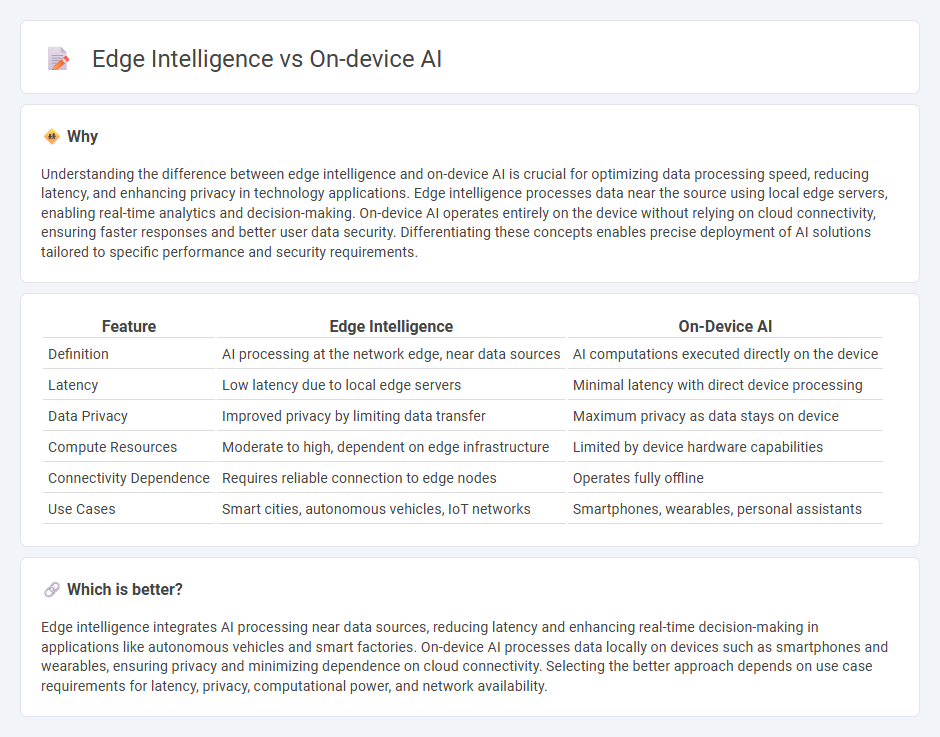

Understanding the difference between edge intelligence and on-device AI is crucial for optimizing data processing speed, reducing latency, and enhancing privacy in technology applications. Edge intelligence processes data near the source using local edge servers, enabling real-time analytics and decision-making. On-device AI operates entirely on the device without relying on cloud connectivity, ensuring faster responses and better user data security. Differentiating these concepts enables precise deployment of AI solutions tailored to specific performance and security requirements.

Comparison Table

| Feature | Edge Intelligence | On-Device AI |

|---|---|---|

| Definition | AI processing at the network edge, near data sources | AI computations executed directly on the device |

| Latency | Low latency due to local edge servers | Minimal latency with direct device processing |

| Data Privacy | Improved privacy by limiting data transfer | Maximum privacy as data stays on device |

| Compute Resources | Moderate to high, dependent on edge infrastructure | Limited by device hardware capabilities |

| Connectivity Dependence | Requires reliable connection to edge nodes | Operates fully offline |

| Use Cases | Smart cities, autonomous vehicles, IoT networks | Smartphones, wearables, personal assistants |

Which is better?

Edge intelligence integrates AI processing near data sources, reducing latency and enhancing real-time decision-making in applications like autonomous vehicles and smart factories. On-device AI processes data locally on devices such as smartphones and wearables, ensuring privacy and minimizing dependence on cloud connectivity. Selecting the better approach depends on use case requirements for latency, privacy, computational power, and network availability.

Connection

Edge intelligence integrates on-device AI to process data locally, reducing latency and improving real-time decision-making capabilities. By deploying AI algorithms directly on edge devices, systems optimize bandwidth usage and enhance privacy through minimized data transmission. This synergy enables more efficient and autonomous operations across IoT, autonomous vehicles, and smart manufacturing sectors.

Key Terms

Model Deployment

On-device AI enables models to run locally on hardware such as smartphones or IoT devices, reducing latency and enhancing privacy by eliminating the need for constant cloud connectivity. Edge intelligence expands this capability by distributing AI processing across edge servers and devices, optimizing resource utilization and enabling real-time analytics closer to data sources. Explore detailed comparisons and deployment strategies to understand which approach best suits your AI application needs.

Latency

On-device AI processes data locally on the device, minimizing latency by eliminating the need for data transmission to external servers. Edge intelligence combines local processing with edge servers, offering reduced latency but depending on network conditions for optimal performance. Explore how latency influences real-time decision-making in AI applications to understand these technologies better.

Data Privacy

On-device AI processes data locally on a user's device, significantly reducing the risk of data breaches by minimizing data transmission to external servers, thereby enhancing data privacy. Edge intelligence extends AI capabilities to edge devices like routers or gateways, balancing real-time processing with privacy concerns through decentralized data handling. Explore the differences and advantages of on-device AI and edge intelligence to understand their impact on data privacy.

Source and External Links

On-device artificial intelligence | European Data Protection Supervisor - On-device AI is AI implemented and executed directly on end devices like smartphones and wearables, enabling real-time decision-making with minimized latency, enhanced privacy, and reduced data transmission by processing data locally rather than relying on the cloud.

On-Device AI: Powering the Future of Computing | Coursera - On-device AI processes data locally to provide faster responses and more personalized interactions, closely related to edge computing, which reduces reliance on cloud servers for increased speed, privacy, and reliability.

Introduction to On-Device AI - DeepLearning.AI - This course covers deploying AI models on edge devices like smartphones by optimizing models for device compatibility, improving performance through quantization, and leveraging hardware acceleration to enable efficient, secure on-device inference.

dowidth.com

dowidth.com