Edge AI processes data locally on devices at the network's edge, enabling low latency and enhanced privacy compared to traditional cloud computing. On-device AI specifically runs artificial intelligence algorithms directly on individual devices, optimizing real-time decision-making and reducing the reliance on constant internet connectivity. Explore deeper insights into how Edge AI and on-device AI are transforming industries and reshaping digital experiences.

Why it is important

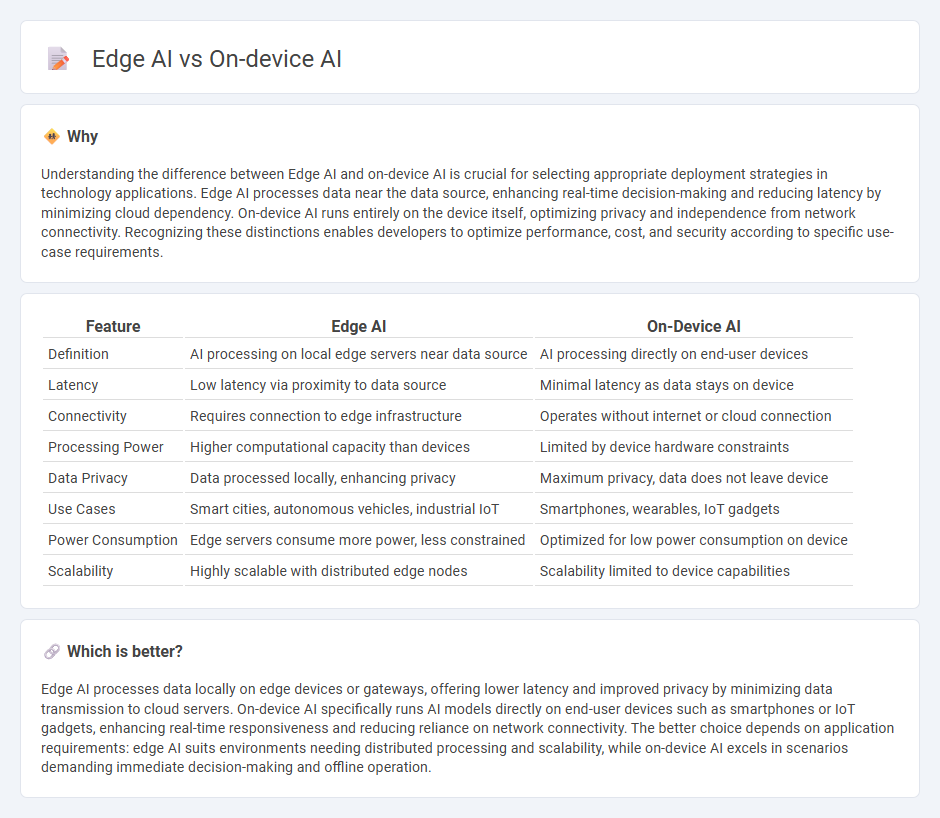

Understanding the difference between Edge AI and on-device AI is crucial for selecting appropriate deployment strategies in technology applications. Edge AI processes data near the data source, enhancing real-time decision-making and reducing latency by minimizing cloud dependency. On-device AI runs entirely on the device itself, optimizing privacy and independence from network connectivity. Recognizing these distinctions enables developers to optimize performance, cost, and security according to specific use-case requirements.

Comparison Table

| Feature | Edge AI | On-Device AI |

|---|---|---|

| Definition | AI processing on local edge servers near data source | AI processing directly on end-user devices |

| Latency | Low latency via proximity to data source | Minimal latency as data stays on device |

| Connectivity | Requires connection to edge infrastructure | Operates without internet or cloud connection |

| Processing Power | Higher computational capacity than devices | Limited by device hardware constraints |

| Data Privacy | Data processed locally, enhancing privacy | Maximum privacy, data does not leave device |

| Use Cases | Smart cities, autonomous vehicles, industrial IoT | Smartphones, wearables, IoT gadgets |

| Power Consumption | Edge servers consume more power, less constrained | Optimized for low power consumption on device |

| Scalability | Highly scalable with distributed edge nodes | Scalability limited to device capabilities |

Which is better?

Edge AI processes data locally on edge devices or gateways, offering lower latency and improved privacy by minimizing data transmission to cloud servers. On-device AI specifically runs AI models directly on end-user devices such as smartphones or IoT gadgets, enhancing real-time responsiveness and reducing reliance on network connectivity. The better choice depends on application requirements: edge AI suits environments needing distributed processing and scalability, while on-device AI excels in scenarios demanding immediate decision-making and offline operation.

Connection

Edge AI and on-device AI are interconnected technologies that process data locally on devices, minimizing latency and enhancing real-time decision-making capabilities. Both leverage advancements in hardware acceleration, such as specialized AI chips, to enable efficient machine learning model inference without relying on cloud connectivity. This synergy improves privacy, reduces bandwidth usage, and supports applications in autonomous vehicles, smart cameras, and wearable devices.

Key Terms

Processing Localization

On-device AI processes data directly within the device, minimizing latency and enhancing privacy by eliminating data transmission to external servers. Edge AI, while also localizing processing, operates on nearby edge servers or gateways to balance computational power and real-time responsiveness for complex tasks. Explore further to understand how processing localization impacts performance and security in AI applications.

Latency

On-device AI processes data directly on the user's device, minimizing latency by eliminating the need for data transmission to external servers. Edge AI operates on local edge servers close to the data source, reducing latency compared to cloud-based processing but potentially introducing slight delays due to communication between devices and edge nodes. Explore more to understand which AI solution best balances latency and performance for your applications.

Data Privacy

On-device AI processes data locally on the user's device, minimizing data exposure and significantly enhancing data privacy by eliminating the need to send sensitive information to external servers. Edge AI operates on edge servers closer to the data source, reducing latency but still involving data transfer within a network, which may present additional privacy risks. Explore the distinctions and practical implications of on-device AI versus edge AI for optimal data privacy strategies.

Source and External Links

On-device artificial intelligence | European Data Protection Supervisor - On-device AI runs AI models directly on devices like smartphones and wearables, enabling local inference and training close to data sources, reducing latency, conserving bandwidth, and enhancing privacy through federated learning techniques.

On-Device AI: Powering the Future of Computing | Coursera - On-device AI processes data locally rather than in the cloud, offering faster processing with low latency, improved personalization, and is closely linked with concepts like edge computing and machine learning.

Introduction to On-Device AI - DeepLearning.AI - This course teaches deploying optimized AI models on edge devices, covering model conversion, hardware acceleration, quantization, and real-time inference deployment to leverage device compute power efficiently and securely.

dowidth.com

dowidth.com