Edge inference processes data locally on devices, enabling real-time decision-making with reduced latency and enhanced privacy, while batch inference handles large data sets on centralized servers, optimizing computational resources for extensive model evaluations. Edge inference suits applications like autonomous vehicles and IoT devices requiring immediate responses, whereas batch inference is ideal for offline analytics and periodic updates in cloud environments. Discover how integrating both approaches can optimize performance and efficiency across diverse technological ecosystems.

Why it is important

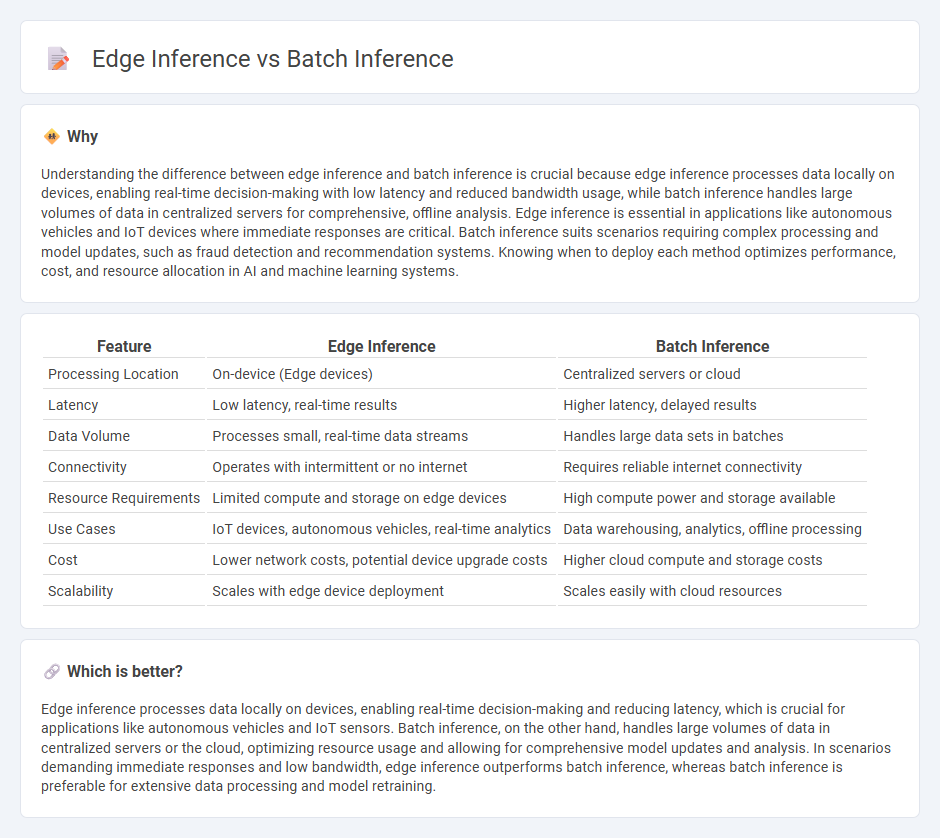

Understanding the difference between edge inference and batch inference is crucial because edge inference processes data locally on devices, enabling real-time decision-making with low latency and reduced bandwidth usage, while batch inference handles large volumes of data in centralized servers for comprehensive, offline analysis. Edge inference is essential in applications like autonomous vehicles and IoT devices where immediate responses are critical. Batch inference suits scenarios requiring complex processing and model updates, such as fraud detection and recommendation systems. Knowing when to deploy each method optimizes performance, cost, and resource allocation in AI and machine learning systems.

Comparison Table

| Feature | Edge Inference | Batch Inference |

|---|---|---|

| Processing Location | On-device (Edge devices) | Centralized servers or cloud |

| Latency | Low latency, real-time results | Higher latency, delayed results |

| Data Volume | Processes small, real-time data streams | Handles large data sets in batches |

| Connectivity | Operates with intermittent or no internet | Requires reliable internet connectivity |

| Resource Requirements | Limited compute and storage on edge devices | High compute power and storage available |

| Use Cases | IoT devices, autonomous vehicles, real-time analytics | Data warehousing, analytics, offline processing |

| Cost | Lower network costs, potential device upgrade costs | Higher cloud compute and storage costs |

| Scalability | Scales with edge device deployment | Scales easily with cloud resources |

Which is better?

Edge inference processes data locally on devices, enabling real-time decision-making and reducing latency, which is crucial for applications like autonomous vehicles and IoT sensors. Batch inference, on the other hand, handles large volumes of data in centralized servers or the cloud, optimizing resource usage and allowing for comprehensive model updates and analysis. In scenarios demanding immediate responses and low bandwidth, edge inference outperforms batch inference, whereas batch inference is preferable for extensive data processing and model retraining.

Connection

Edge inference processes data locally on devices, reducing latency and bandwidth usage by performing computations closer to data sources, whereas batch inference handles large datasets centrally, often in the cloud, for extensive analysis. Both methods optimize machine learning deployment by balancing real-time responsiveness at the edge with high-throughput processing in batch mode. Integrating edge inference with batch inference enables seamless updating of models and improved accuracy by leveraging local insights and aggregated data analytics.

Key Terms

Latency

Batch inference processes large volumes of data simultaneously, resulting in higher latency due to the time required to aggregate and process the batch. Edge inference executes data processing locally on devices, significantly reducing latency by eliminating the need for data transfer to centralized servers. Explore more about how latency impacts performance in batch and edge inference scenarios.

Scalability

Batch inference processes large volumes of data simultaneously, leveraging powerful centralized servers and cloud infrastructure to achieve high scalability for extensive datasets. Edge inference executes AI models locally on devices, offering scalability through decentralized processing and reduced latency but may be limited by hardware constraints. Explore the differences in scalability between batch and edge inference for optimized AI deployment.

Resource Constraints

Batch inference processes large datasets in centralized servers with ample computing power, optimizing throughput but requiring significant storage and energy resources. Edge inference executes models locally on devices with limited CPU, memory, and battery capacity, prioritizing low latency and reduced data transmission. Explore how resource constraints shape the choice between batch and edge inference for various applications.

Source and External Links

Batch inference - Using MLRun - Batch inference is the offline process of running machine learning models on large datasets in batches, typically on recurring schedules, to generate predictions stored for later use, often leveraging big data tools like Spark for scale.

What is a Batch Inference Pipeline? - Hopsworks - A batch inference pipeline takes batches of data and a model to produce predictions output to a sink such as a database, running on schedules to support dashboards and operational ML systems with scalable, efficient processing of large data volumes.

Automate Amazon Bedrock batch inference: Building a scalable and efficient pipeline - Batch inference enables efficient asynchronous processing of large datasets using foundation models, reducing costs and enabling large-scale tasks like text classification or embedding generation, making it ideal when real-time response is not required.

dowidth.com

dowidth.com