Neuromorphic chips mimic the neural architecture of the human brain, enabling highly efficient processing for tasks involving pattern recognition and sensory data, while GPUs excel at parallel processing for graphics rendering and general-purpose computations. Unlike GPUs, neuromorphic chips offer low power consumption and real-time adaptive learning capabilities, making them ideal for AI applications requiring dynamic responses. Explore the distinctions and innovations driving the future of computing in neuromorphic technology versus traditional GPU architectures.

Why it is important

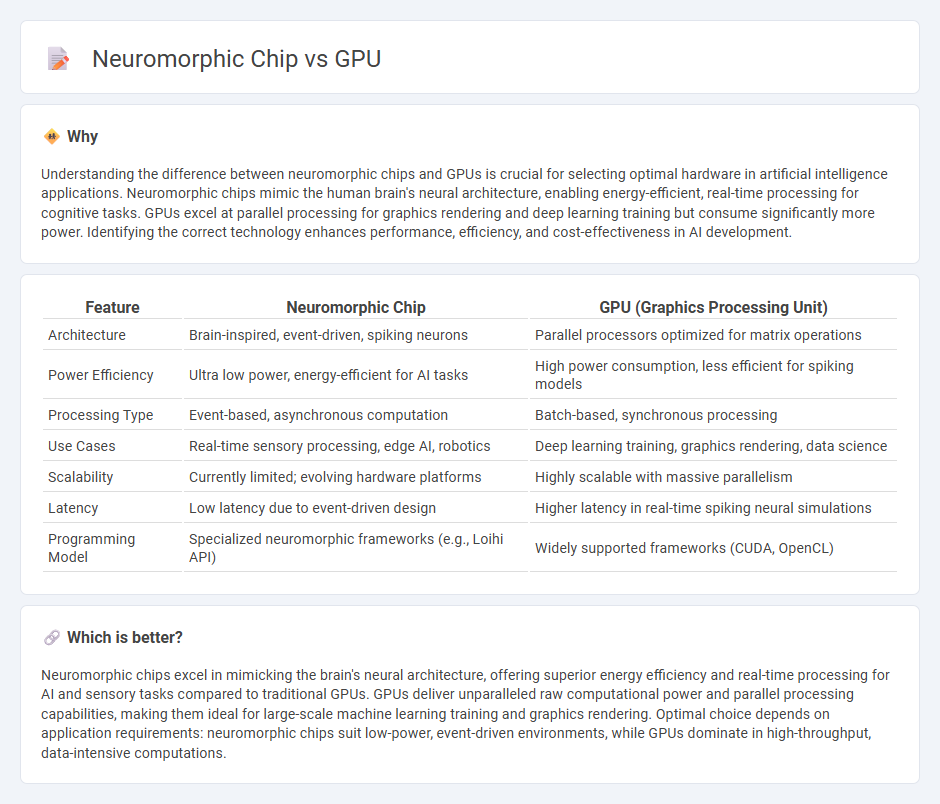

Understanding the difference between neuromorphic chips and GPUs is crucial for selecting optimal hardware in artificial intelligence applications. Neuromorphic chips mimic the human brain's neural architecture, enabling energy-efficient, real-time processing for cognitive tasks. GPUs excel at parallel processing for graphics rendering and deep learning training but consume significantly more power. Identifying the correct technology enhances performance, efficiency, and cost-effectiveness in AI development.

Comparison Table

| Feature | Neuromorphic Chip | GPU (Graphics Processing Unit) |

|---|---|---|

| Architecture | Brain-inspired, event-driven, spiking neurons | Parallel processors optimized for matrix operations |

| Power Efficiency | Ultra low power, energy-efficient for AI tasks | High power consumption, less efficient for spiking models |

| Processing Type | Event-based, asynchronous computation | Batch-based, synchronous processing |

| Use Cases | Real-time sensory processing, edge AI, robotics | Deep learning training, graphics rendering, data science |

| Scalability | Currently limited; evolving hardware platforms | Highly scalable with massive parallelism |

| Latency | Low latency due to event-driven design | Higher latency in real-time spiking neural simulations |

| Programming Model | Specialized neuromorphic frameworks (e.g., Loihi API) | Widely supported frameworks (CUDA, OpenCL) |

Which is better?

Neuromorphic chips excel in mimicking the brain's neural architecture, offering superior energy efficiency and real-time processing for AI and sensory tasks compared to traditional GPUs. GPUs deliver unparalleled raw computational power and parallel processing capabilities, making them ideal for large-scale machine learning training and graphics rendering. Optimal choice depends on application requirements: neuromorphic chips suit low-power, event-driven environments, while GPUs dominate in high-throughput, data-intensive computations.

Connection

Neuromorphic chips and GPUs both accelerate computing but differ in architecture; neuromorphic chips mimic brain-like neural networks for efficient AI inference, while GPUs handle parallel processing for large-scale data tasks. Integration of neuromorphic chips within GPU frameworks can enhance machine learning performance by combining energy-efficient spiking neural computations with traditional GPU versatility. This hybrid approach drives advancements in AI, robotics, and real-time data processing applications.

Key Terms

Parallel Processing

GPUs excel at parallel processing by leveraging thousands of cores to handle simultaneous computations, making them ideal for graphics rendering and deep learning tasks. Neuromorphic chips mimic the brain's neural architecture, enabling energy-efficient, event-driven parallel processing suited for real-time sensory data and adaptive learning. Explore how these technologies differ in architecture and application to uncover their unique strengths in parallel processing.

Spiking Neural Networks

Spiking Neural Networks (SNNs) operate using discrete spikes, making neuromorphic chips like Intel's Loihi or IBM's TrueNorth highly efficient for low-power, real-time sensory processing compared to traditional GPUs optimized for dense matrix computations in deep learning. While GPUs excel in parallel processing of artificial neural networks, neuromorphic chips mimic biological neural architectures, enabling event-driven computation that drastically reduces energy consumption in SNN deployments. Explore how neuromorphic hardware revolutionizes SNN applications and surpasses GPU constraints in energy efficiency and latency-sensitive tasks.

Energy Efficiency

Neuromorphic chips mimic the brain's architecture, consuming significantly less energy than GPUs by efficiently handling sparse data and parallel processing tasks. GPUs, designed for general-purpose parallel computation, often require higher power consumption for similar workloads, especially in AI and machine learning applications. Discover how neuromorphic technology can revolutionize energy-efficient computing in cutting-edge research.

Source and External Links

What is a graphics processing unit (GPU)? | Definition from TechTarget - A GPU is a specialized computer chip that renders graphics and images by performing rapid parallel processing calculations, originally designed for 2D and 3D rendering but now also widely used for AI, machine learning, video editing, and cryptocurrency mining; it differs from a graphics card, which is the full assembly that presents images to a display.

What Is a GPU? Graphics Processing Units Defined | Intel - GPUs are highly parallel processors originally created to accelerate 3D graphics rendering, now also essential for AI, high-performance computing, and complex video/video game rendering, complementing CPUs by specializing in graphics and parallel workloads.

Graphics processing unit - Wikipedia - A GPU is a specialized electronic circuit present in various devices, designed for digital image processing and accelerating computer graphics; its parallel structure also makes it effective for non-graphics tasks like neural network training and cryptocurrency mining, with energy efficiency and scalability being key design considerations.

dowidth.com

dowidth.com