Retrieval Augmented Generation (RAG) leverages external knowledge sources by integrating retrieval mechanisms with generative models, enhancing accuracy and relevancy compared to traditional Generative Pre-trained Transformers (GPT) that rely solely on learned knowledge. GPT models, such as GPT-4, excel in generating coherent and contextually relevant text based on extensive pre-training but may lack up-to-date information without external data access. Explore the differences and applications of RAG and GPT to understand their impact on advanced AI-driven text generation.

Why it is important

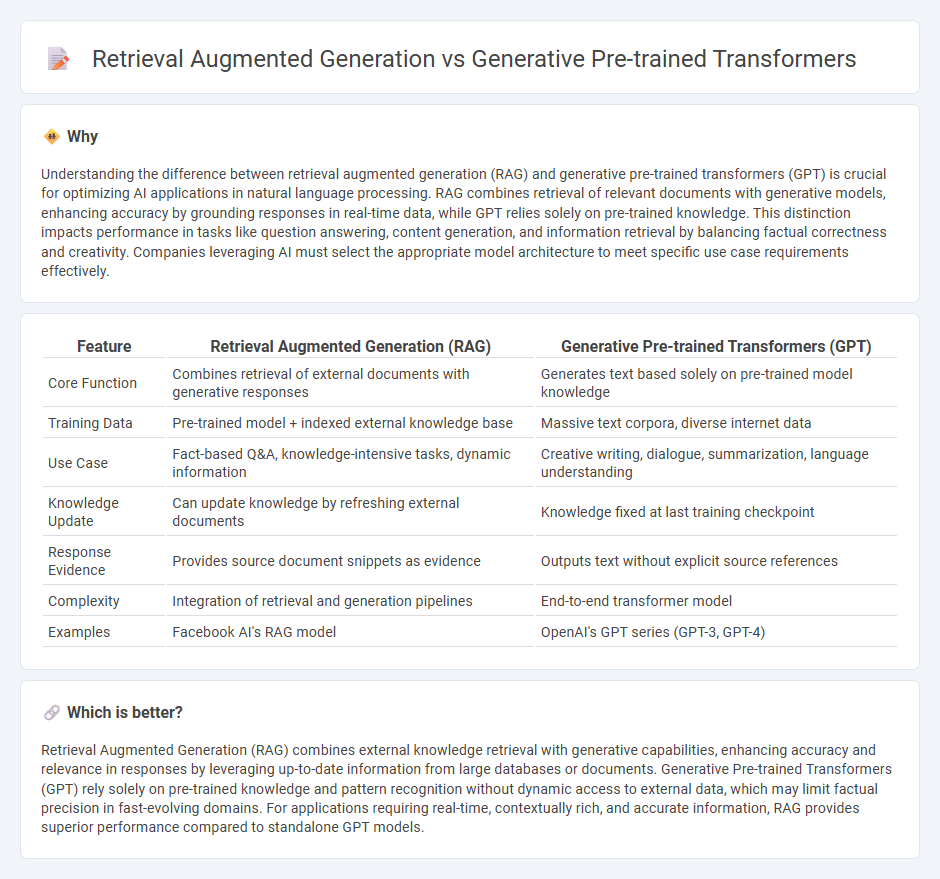

Understanding the difference between retrieval augmented generation (RAG) and generative pre-trained transformers (GPT) is crucial for optimizing AI applications in natural language processing. RAG combines retrieval of relevant documents with generative models, enhancing accuracy by grounding responses in real-time data, while GPT relies solely on pre-trained knowledge. This distinction impacts performance in tasks like question answering, content generation, and information retrieval by balancing factual correctness and creativity. Companies leveraging AI must select the appropriate model architecture to meet specific use case requirements effectively.

Comparison Table

| Feature | Retrieval Augmented Generation (RAG) | Generative Pre-trained Transformers (GPT) |

|---|---|---|

| Core Function | Combines retrieval of external documents with generative responses | Generates text based solely on pre-trained model knowledge |

| Training Data | Pre-trained model + indexed external knowledge base | Massive text corpora, diverse internet data |

| Use Case | Fact-based Q&A, knowledge-intensive tasks, dynamic information | Creative writing, dialogue, summarization, language understanding |

| Knowledge Update | Can update knowledge by refreshing external documents | Knowledge fixed at last training checkpoint |

| Response Evidence | Provides source document snippets as evidence | Outputs text without explicit source references |

| Complexity | Integration of retrieval and generation pipelines | End-to-end transformer model |

| Examples | Facebook AI's RAG model | OpenAI's GPT series (GPT-3, GPT-4) |

Which is better?

Retrieval Augmented Generation (RAG) combines external knowledge retrieval with generative capabilities, enhancing accuracy and relevance in responses by leveraging up-to-date information from large databases or documents. Generative Pre-trained Transformers (GPT) rely solely on pre-trained knowledge and pattern recognition without dynamic access to external data, which may limit factual precision in fast-evolving domains. For applications requiring real-time, contextually rich, and accurate information, RAG provides superior performance compared to standalone GPT models.

Connection

Retrieval Augmented Generation (RAG) integrates external knowledge retrieval with Generative Pre-trained Transformers (GPT) to enhance language model responses by grounding them in relevant data sources. GPT provides the generative capabilities while RAG uses retrieval mechanisms to access up-to-date or domain-specific information, improving accuracy and contextual relevance. This synergy enables advanced natural language understanding and generation by combining large-scale pre-trained models with dynamic information retrieval.

Key Terms

Pre-training

Generative Pre-trained Transformers (GPT) rely heavily on extensive unsupervised pre-training on vast textual datasets to develop deep language understanding, enabling them to predict and generate coherent text sequences efficiently. Retrieval-Augmented Generation (RAG) combines pre-trained language models with external knowledge retrieval systems, where pre-training focuses not only on language modeling but also on effectively integrating retrieved information to enhance response accuracy. Explore how advancements in pre-training strategies impact the performance and adaptability of both GPT and RAG models in real-world applications.

Retrieval

Generative Pre-trained Transformers (GPT) primarily generate text based on patterns learned during training, while Retrieval Augmented Generation (RAG) enhances responses by integrating external knowledge through document retrieval mechanisms. RAG models query relevant databases or corpora in real-time to provide contextually accurate, fact-based answers, improving reliability over standalone GPT frameworks. Explore how retrieval augmentation optimizes AI-driven content generation and accuracy in advanced applications.

Augmentation

Generative Pre-trained Transformers (GPT) rely solely on pre-trained language models to generate text, leveraging vast datasets during training without external data inputs. Retrieval Augmented Generation (RAG) enhances this process by integrating real-time document retrieval systems, providing relevant external information to improve accuracy and contextual relevance. Explore how augmentation techniques transform AI capabilities by deepening your understanding of RAG methodologies.

Source and External Links

Generative Pre-Trained Transformer (GPT) definition - GPT is a state-of-the-art language model developed by OpenAI that uses deep learning and transformer architecture to generate human-like natural language text by pre-training on large text datasets and fine-tuning on specific tasks.

What is GPT (generative pre-trained transformer)? - GPTs are large language models leveraging transformer architecture and generative pre-training on massive unlabeled datasets to learn patterns and generate contextual, human-like language with billions to trillions of parameters.

What is Generative Pre-trained Transformer (GPT)? - GPT models predict the next word in text sequences using a self-attention-based transformer architecture, pre-trained on extensive internet text and fine-tuned for tasks, powering conversational AI like ChatGPT for various natural language applications.

dowidth.com

dowidth.com