Spatial computing integrates real-world environments with digital content, enabling immersive experiences through technologies like augmented reality and 3D mapping. Computer vision focuses on interpreting visual data from cameras or sensors to identify objects, gestures, and actions for applications such as facial recognition and autonomous vehicles. Explore how these cutting-edge fields transform interaction and perception in technology-driven worlds.

Why it is important

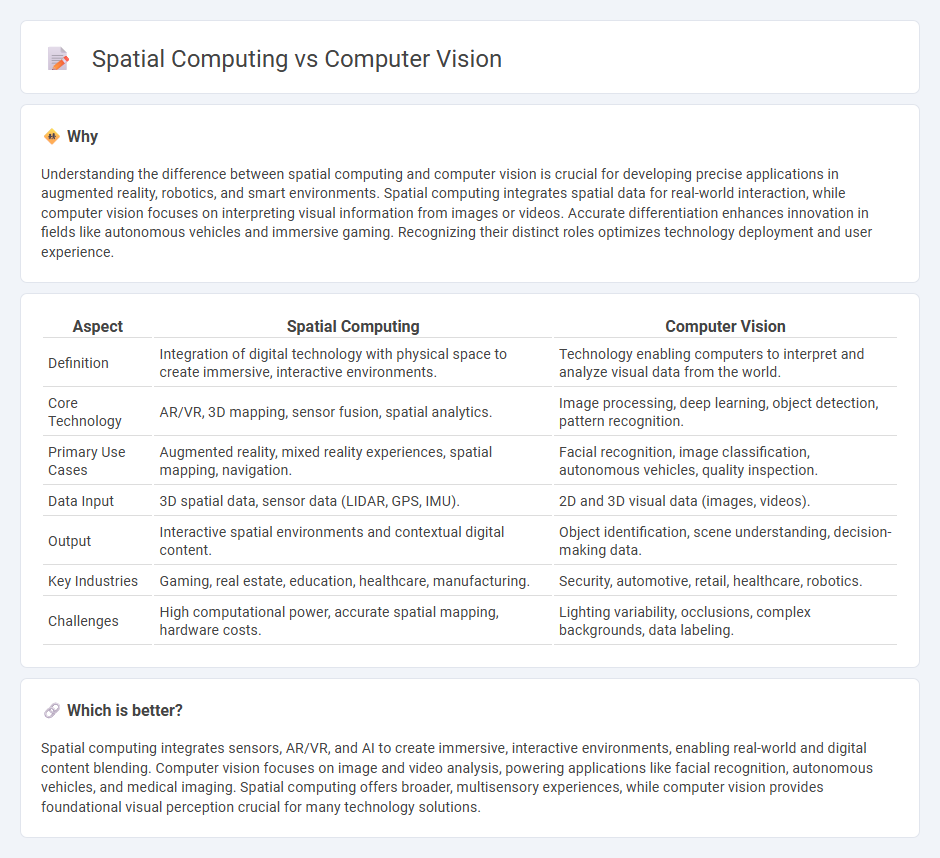

Understanding the difference between spatial computing and computer vision is crucial for developing precise applications in augmented reality, robotics, and smart environments. Spatial computing integrates spatial data for real-world interaction, while computer vision focuses on interpreting visual information from images or videos. Accurate differentiation enhances innovation in fields like autonomous vehicles and immersive gaming. Recognizing their distinct roles optimizes technology deployment and user experience.

Comparison Table

| Aspect | Spatial Computing | Computer Vision |

|---|---|---|

| Definition | Integration of digital technology with physical space to create immersive, interactive environments. | Technology enabling computers to interpret and analyze visual data from the world. |

| Core Technology | AR/VR, 3D mapping, sensor fusion, spatial analytics. | Image processing, deep learning, object detection, pattern recognition. |

| Primary Use Cases | Augmented reality, mixed reality experiences, spatial mapping, navigation. | Facial recognition, image classification, autonomous vehicles, quality inspection. |

| Data Input | 3D spatial data, sensor data (LIDAR, GPS, IMU). | 2D and 3D visual data (images, videos). |

| Output | Interactive spatial environments and contextual digital content. | Object identification, scene understanding, decision-making data. |

| Key Industries | Gaming, real estate, education, healthcare, manufacturing. | Security, automotive, retail, healthcare, robotics. |

| Challenges | High computational power, accurate spatial mapping, hardware costs. | Lighting variability, occlusions, complex backgrounds, data labeling. |

Which is better?

Spatial computing integrates sensors, AR/VR, and AI to create immersive, interactive environments, enabling real-world and digital content blending. Computer vision focuses on image and video analysis, powering applications like facial recognition, autonomous vehicles, and medical imaging. Spatial computing offers broader, multisensory experiences, while computer vision provides foundational visual perception crucial for many technology solutions.

Connection

Spatial computing integrates computer vision to interpret and analyze spatial data from the environment, enabling devices to understand and interact with physical spaces in real time. Computer vision processes visual inputs to identify objects, depth, and motion, providing critical spatial information for applications like augmented reality, robotics, and autonomous vehicles. The synergy between spatial computing and computer vision drives advancements in immersive experiences and intelligent systems by creating accurate spatial awareness and enhancing contextual understanding.

Key Terms

Image Recognition

Image recognition in computer vision enables machines to identify and classify objects, patterns, and features within images using advanced algorithms and deep learning. Spatial computing enhances this process by integrating 3D space awareness, allowing systems to interact with and understand their environment contextually for applications like augmented reality and robotics. Explore the latest advancements to understand how these technologies are transforming visual data interpretation.

3D Mapping

Computer vision enables accurate 3D mapping by interpreting visual data from cameras and sensors, facilitating object recognition and spatial understanding. Spatial computing integrates computer vision with augmented reality, sensor fusion, and cloud computing to create dynamic, interactive 3D maps in real-time environments. Explore the latest advancements to understand how these technologies transform spatial data applications.

Sensor Fusion

Sensor fusion in computer vision integrates data from multiple cameras and depth sensors to enhance object detection and scene understanding. Spatial computing expands this approach by combining inputs from LiDAR, inertial measurement units (IMUs), and GPS with visual data to create accurate 3D maps and real-time spatial awareness. Explore how sensor fusion drives innovations in augmented reality and autonomous systems by learning more about their distinct impacts.

Source and External Links

What is Computer Vision? - IBM - Computer vision is a field of AI that enables computers to analyze images and videos, using deep learning and convolutional neural networks to recognize and distinguish visual elements.

What is Computer Vision? - Image recognition AI/ML Explained - AWS - Computer vision systems use AI and machine learning to automatically identify and describe objects in images by learning from vast datasets, employing technologies like CNNs and RNNs for both static and sequential image analysis.

What Is Computer Vision? - Intel - Computer vision trains computers to see and interpret visual data like humans, using convolutional neural networks to analyze pixel patterns and classify objects after extensive training on large, labeled image sets.

dowidth.com

dowidth.com