Differentiable programming leverages gradient-based optimization to enable continuous learning and adaptation in neural networks, enhancing tasks like image recognition and natural language processing. Genetic programming mimics evolutionary processes to automatically generate algorithms and solve complex problems without explicit programming, excelling in optimization and symbolic regression. Explore these innovative paradigms to understand their impact on artificial intelligence and problem-solving methodology.

Why it is important

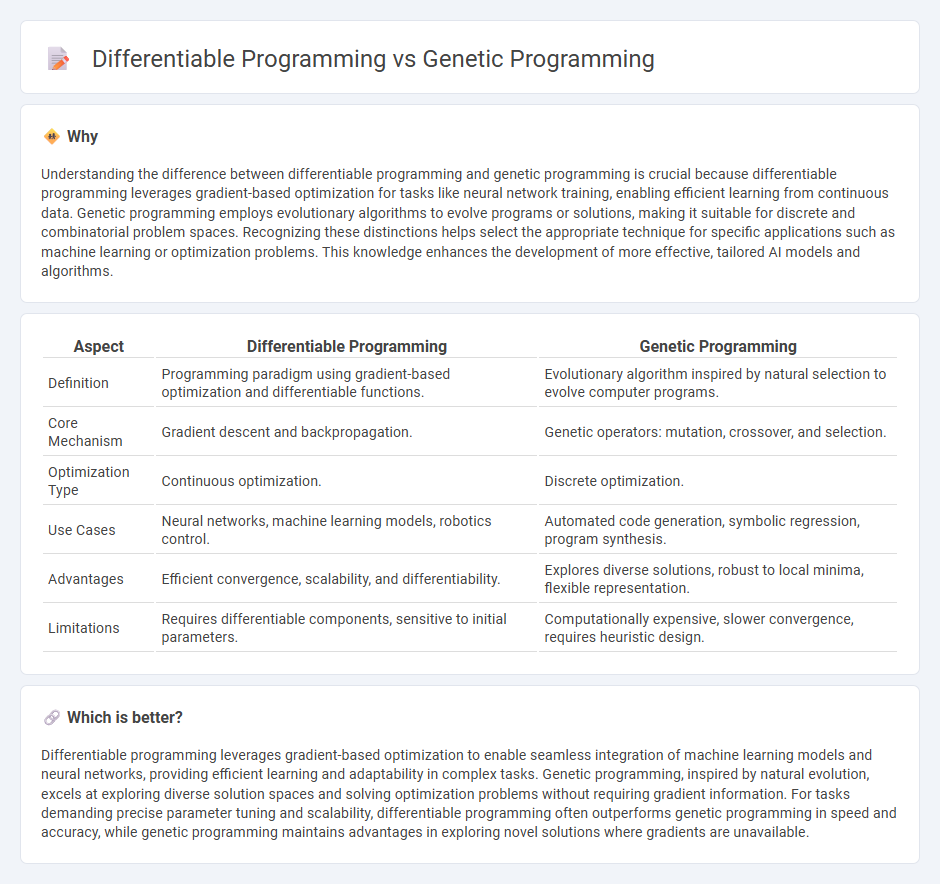

Understanding the difference between differentiable programming and genetic programming is crucial because differentiable programming leverages gradient-based optimization for tasks like neural network training, enabling efficient learning from continuous data. Genetic programming employs evolutionary algorithms to evolve programs or solutions, making it suitable for discrete and combinatorial problem spaces. Recognizing these distinctions helps select the appropriate technique for specific applications such as machine learning or optimization problems. This knowledge enhances the development of more effective, tailored AI models and algorithms.

Comparison Table

| Aspect | Differentiable Programming | Genetic Programming |

|---|---|---|

| Definition | Programming paradigm using gradient-based optimization and differentiable functions. | Evolutionary algorithm inspired by natural selection to evolve computer programs. |

| Core Mechanism | Gradient descent and backpropagation. | Genetic operators: mutation, crossover, and selection. |

| Optimization Type | Continuous optimization. | Discrete optimization. |

| Use Cases | Neural networks, machine learning models, robotics control. | Automated code generation, symbolic regression, program synthesis. |

| Advantages | Efficient convergence, scalability, and differentiability. | Explores diverse solutions, robust to local minima, flexible representation. |

| Limitations | Requires differentiable components, sensitive to initial parameters. | Computationally expensive, slower convergence, requires heuristic design. |

Which is better?

Differentiable programming leverages gradient-based optimization to enable seamless integration of machine learning models and neural networks, providing efficient learning and adaptability in complex tasks. Genetic programming, inspired by natural evolution, excels at exploring diverse solution spaces and solving optimization problems without requiring gradient information. For tasks demanding precise parameter tuning and scalability, differentiable programming often outperforms genetic programming in speed and accuracy, while genetic programming maintains advantages in exploring novel solutions where gradients are unavailable.

Connection

Differentiable programming integrates gradient-based optimization techniques into programming frameworks, enabling efficient learning and adaptation in complex models. Genetic programming simulates evolutionary processes by evolving computer programs through selection, mutation, and crossover, fostering innovation in problem-solving. Both approaches connect through their shared goal of optimizing program behavior, with differentiable programming providing precise gradient feedback and genetic programming exploring broader solution landscapes without explicit gradients.

Key Terms

Evolutionary Algorithms

Genetic programming utilizes Evolutionary Algorithms to evolve computer programs by mimicking natural selection, focusing on tree-like program structures that adapt over generations. Differentiable programming integrates gradient-based optimization within neural networks, enabling continuous parameter tuning rather than discrete structural changes typical in genetic programming. Explore deeper insights into how these paradigms revolutionize algorithmic learning by visiting our detailed analysis.

Gradient Descent

Genetic programming evolves solutions through natural selection-inspired algorithms, making it less reliant on gradient information, while differentiable programming leverages gradient descent to optimize models via backpropagation efficiently. Gradient descent is central to differentiable programming, enabling precise parameter tuning by minimizing loss functions in neural networks. Discover more about how these paradigms harness gradients for optimization.

Automatic Differentiation

Genetic programming evolves computer programs using biological evolution principles, optimizing solutions by mutation and selection, while differentiable programming leverages gradients computed through Automatic Differentiation (AD) to optimize functions efficiently. Automatic Differentiation enables precise gradient calculations essential for training neural networks in differentiable programming but is not inherently used in genetic programming, which relies on stochastic search rather than gradient descent. Explore deeper insights into how Automatic Differentiation transforms optimization in machine learning and computational models by learning more.

Source and External Links

Genetic programming - Genetic programming (GP) is an evolutionary algorithm in artificial intelligence that evolves populations of computer programs using selection, mutation, and crossover based on a fitness measure to solve problems.

Genetic Algorithms and Genetic Programming for Advanced Problem Solving - Genetic programming extends genetic algorithms by evolving programs or expressions through evolutionary operations inspired by natural selection and genetics for advanced problem solving.

genetic-programming.org-Home-Page - Genetic programming is an automated method to create computer programs from high-level problem statements, achieving human-competitive results and sometimes patentable inventions.

dowidth.com

dowidth.com