Edge AI processes data locally on devices such as smartphones, sensors, and IoT gadgets, reducing latency and enhancing real-time decision-making capabilities. Distributed AI involves multiple interconnected systems collaborating over networks to perform complex tasks, enabling scalability and resource sharing across various nodes. Explore the key differences and applications of Edge AI and Distributed AI to understand their impact on modern technology.

Why it is important

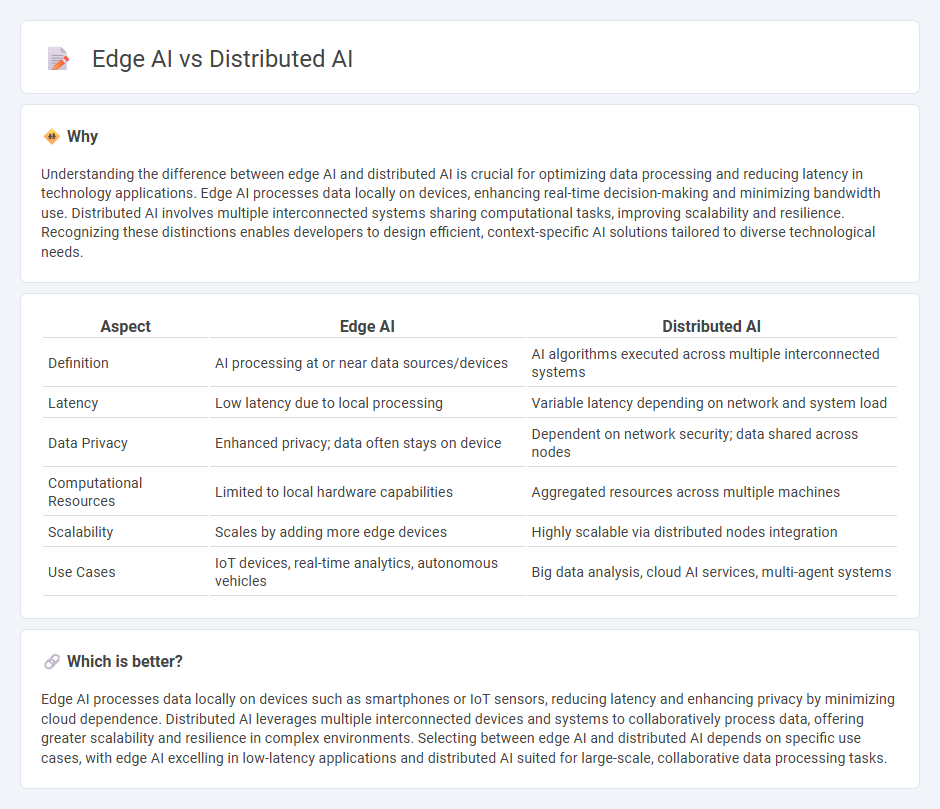

Understanding the difference between edge AI and distributed AI is crucial for optimizing data processing and reducing latency in technology applications. Edge AI processes data locally on devices, enhancing real-time decision-making and minimizing bandwidth use. Distributed AI involves multiple interconnected systems sharing computational tasks, improving scalability and resilience. Recognizing these distinctions enables developers to design efficient, context-specific AI solutions tailored to diverse technological needs.

Comparison Table

| Aspect | Edge AI | Distributed AI |

|---|---|---|

| Definition | AI processing at or near data sources/devices | AI algorithms executed across multiple interconnected systems |

| Latency | Low latency due to local processing | Variable latency depending on network and system load |

| Data Privacy | Enhanced privacy; data often stays on device | Dependent on network security; data shared across nodes |

| Computational Resources | Limited to local hardware capabilities | Aggregated resources across multiple machines |

| Scalability | Scales by adding more edge devices | Highly scalable via distributed nodes integration |

| Use Cases | IoT devices, real-time analytics, autonomous vehicles | Big data analysis, cloud AI services, multi-agent systems |

Which is better?

Edge AI processes data locally on devices such as smartphones or IoT sensors, reducing latency and enhancing privacy by minimizing cloud dependence. Distributed AI leverages multiple interconnected devices and systems to collaboratively process data, offering greater scalability and resilience in complex environments. Selecting between edge AI and distributed AI depends on specific use cases, with edge AI excelling in low-latency applications and distributed AI suited for large-scale, collaborative data processing tasks.

Connection

Edge AI processes data locally on devices, minimizing latency and enhancing real-time decision-making, while distributed AI spreads AI workloads across multiple nodes or systems. Both technologies leverage decentralized computing to improve scalability, efficiency, and resilience in AI applications. The integration of edge AI within distributed AI frameworks enables seamless collaboration between local devices and centralized servers, optimizing data processing and resource allocation.

Key Terms

Decentralization

Distributed AI spreads data processing across multiple nodes, enhancing scalability and fault tolerance by decentralizing workloads. Edge AI processes data locally on devices like sensors or smartphones, reducing latency and bandwidth use through real-time decision-making at the source. Explore how decentralization impacts system efficiency and privacy in both distributed and edge AI deployments.

Latency

Distributed AI processes data across multiple networked devices, which can introduce latency due to data transmission times but allows for scalability and resource pooling. Edge AI executes AI algorithms locally on devices near the data source, significantly reducing latency and enabling real-time decision-making essential for applications like autonomous vehicles and smart sensors. Explore more about how latency impacts AI deployment choices and optimizes performance by diving deeper into distributed and edge AI technologies.

Data locality

Distributed AI enhances data locality by processing information across multiple interconnected nodes, reducing latency and bandwidth usage compared to centralized cloud systems. Edge AI prioritizes data processing directly at or near the data source on devices like sensors or smartphones, ensuring faster decision-making and improved privacy. Explore the benefits and applications of data locality in distributed and edge AI to optimize your AI infrastructure.

Source and External Links

Distributed AI | Micron Technology Inc. - Distributed AI is a computing approach that centralizes data processing for security while decentralizing AI inference tasks across multiple nodes to improve efficiency and scalability.

Distributed Artificial Intelligence | HPE - Distributed Artificial Intelligence explores how multiple intelligent agents collaborate or compete to solve problems, focusing on communication, coordination, and distributed control.

Distributed AI: What it is and Why it Matters? | Clanx.ai - Distributed AI involves distributing AI computations across multiple nodes, enhancing scalability, robustness, and collaborative learning capabilities in intelligent systems.

dowidth.com

dowidth.com