Differential privacy offers a mathematically rigorous approach to protecting individual data by adding carefully calibrated noise, ensuring privacy while maintaining data utility. Data perturbation involves modifying original data through techniques like noise addition or data swapping to reduce re-identification risks but may compromise accuracy. Explore the nuances and applications of these privacy-preserving methods to understand their impact on secure data analysis.

Why it is important

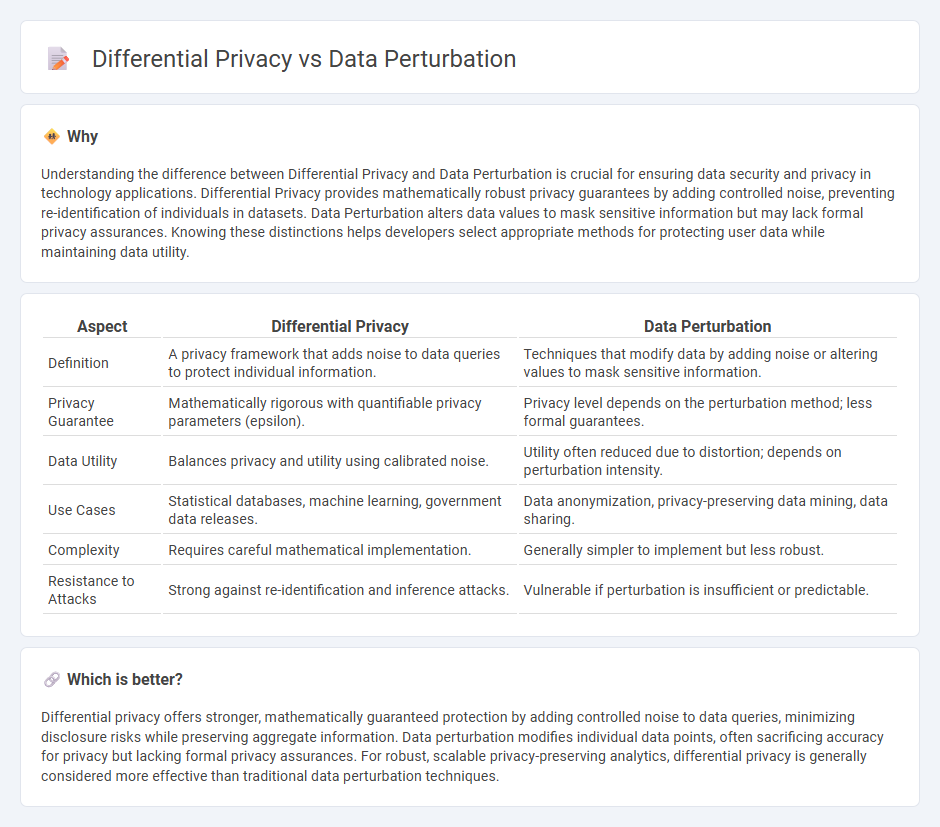

Understanding the difference between Differential Privacy and Data Perturbation is crucial for ensuring data security and privacy in technology applications. Differential Privacy provides mathematically robust privacy guarantees by adding controlled noise, preventing re-identification of individuals in datasets. Data Perturbation alters data values to mask sensitive information but may lack formal privacy assurances. Knowing these distinctions helps developers select appropriate methods for protecting user data while maintaining data utility.

Comparison Table

| Aspect | Differential Privacy | Data Perturbation |

|---|---|---|

| Definition | A privacy framework that adds noise to data queries to protect individual information. | Techniques that modify data by adding noise or altering values to mask sensitive information. |

| Privacy Guarantee | Mathematically rigorous with quantifiable privacy parameters (epsilon). | Privacy level depends on the perturbation method; less formal guarantees. |

| Data Utility | Balances privacy and utility using calibrated noise. | Utility often reduced due to distortion; depends on perturbation intensity. |

| Use Cases | Statistical databases, machine learning, government data releases. | Data anonymization, privacy-preserving data mining, data sharing. |

| Complexity | Requires careful mathematical implementation. | Generally simpler to implement but less robust. |

| Resistance to Attacks | Strong against re-identification and inference attacks. | Vulnerable if perturbation is insufficient or predictable. |

Which is better?

Differential privacy offers stronger, mathematically guaranteed protection by adding controlled noise to data queries, minimizing disclosure risks while preserving aggregate information. Data perturbation modifies individual data points, often sacrificing accuracy for privacy but lacking formal privacy assurances. For robust, scalable privacy-preserving analytics, differential privacy is generally considered more effective than traditional data perturbation techniques.

Connection

Differential privacy and data perturbation are connected through their shared goal of protecting individual privacy in datasets by introducing controlled noise. Differential privacy provides a formal mathematical guarantee that any single data point has a limited impact on the overall output, often achieved by perturbing the data or query results. This noise addition, or data perturbation, obscures specific values while preserving aggregate patterns, ensuring secure data analysis without compromising confidentiality.

Key Terms

Noise addition

Data perturbation involves modifying original data by adding noise to protect individual privacy, often sacrificing some accuracy for greater anonymity. Differential privacy rigorously quantifies privacy loss by ensuring that the probability of any output is nearly the same whether or not any single individual's data is included, typically using calibrated noise addition like Laplace or Gaussian mechanisms. Explore how nuanced noise calibration in differential privacy outperforms conventional data perturbation methods for robust privacy guarantees.

Privacy budget

Data perturbation techniques modify datasets by adding noise to obscure individual entries, providing a flexible level of privacy protection without strict quantification of privacy loss. Differential privacy introduces a formal privacy budget, epsilon, to mathematically bound the privacy loss, enabling precise measurement and control over data exposure risks. Explore how managing the privacy budget impacts data utility and security in various applications.

Randomization

Data perturbation leverages randomization techniques by adding noise or altering data values to mask sensitive information, ensuring individual data points are less identifiable. Differential privacy implements a rigorous mathematical framework that uses controlled random noise to provide quantifiable privacy guarantees, balancing data utility and privacy risk. Explore more on how randomization underpins privacy-enhancing technologies.

Source and External Links

Data perturbation definition - Glossary - NordVPN - Data perturbation is the intentional modification of sensitive information in a dataset by adding controlled noise, to protect privacy while keeping data analytically valuable, commonly used with differential privacy frameworks, employing methods like adding random noise, shuffling categorical data, or value swapping.

What is Data Perturbation? -- Definition by Techslang - Data perturbation protects sensitive information by adding noise through either the probability distribution approach, replacing data with samples from the same distribution, or the value distortion approach, which adds multiple noises randomly to database elements.

Data Perturbation - Glossary - DevX - Data perturbation is a privacy-preserving technique that introduces small purposeful modifications or noise in sensitive datasets, preserving data utility while preventing identification, with common methods including rotation and translation perturbation, widely used in healthcare, finance, and genomics.

dowidth.com

dowidth.com