Diffusion models simulate the gradual spread of information or features across data, enabling robust generation and transformation in machine learning tasks. Deep Belief Networks leverage layered probabilistic representations to capture complex data distributions through unsupervised learning. Explore the distinctive mechanisms and applications of diffusion models and deep belief networks to understand their roles in advancing artificial intelligence.

Why it is important

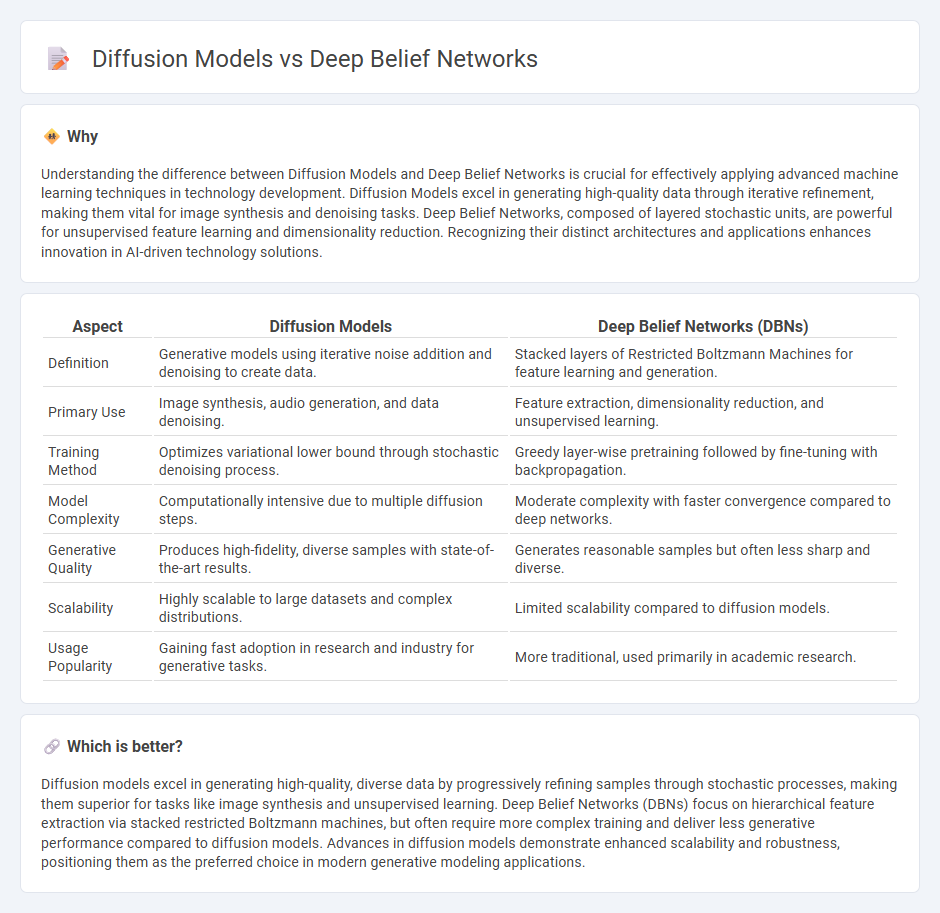

Understanding the difference between Diffusion Models and Deep Belief Networks is crucial for effectively applying advanced machine learning techniques in technology development. Diffusion Models excel in generating high-quality data through iterative refinement, making them vital for image synthesis and denoising tasks. Deep Belief Networks, composed of layered stochastic units, are powerful for unsupervised feature learning and dimensionality reduction. Recognizing their distinct architectures and applications enhances innovation in AI-driven technology solutions.

Comparison Table

| Aspect | Diffusion Models | Deep Belief Networks (DBNs) |

|---|---|---|

| Definition | Generative models using iterative noise addition and denoising to create data. | Stacked layers of Restricted Boltzmann Machines for feature learning and generation. |

| Primary Use | Image synthesis, audio generation, and data denoising. | Feature extraction, dimensionality reduction, and unsupervised learning. |

| Training Method | Optimizes variational lower bound through stochastic denoising process. | Greedy layer-wise pretraining followed by fine-tuning with backpropagation. |

| Model Complexity | Computationally intensive due to multiple diffusion steps. | Moderate complexity with faster convergence compared to deep networks. |

| Generative Quality | Produces high-fidelity, diverse samples with state-of-the-art results. | Generates reasonable samples but often less sharp and diverse. |

| Scalability | Highly scalable to large datasets and complex distributions. | Limited scalability compared to diffusion models. |

| Usage Popularity | Gaining fast adoption in research and industry for generative tasks. | More traditional, used primarily in academic research. |

Which is better?

Diffusion models excel in generating high-quality, diverse data by progressively refining samples through stochastic processes, making them superior for tasks like image synthesis and unsupervised learning. Deep Belief Networks (DBNs) focus on hierarchical feature extraction via stacked restricted Boltzmann machines, but often require more complex training and deliver less generative performance compared to diffusion models. Advances in diffusion models demonstrate enhanced scalability and robustness, positioning them as the preferred choice in modern generative modeling applications.

Connection

Diffusion models and Deep Belief Networks (DBNs) are connected through their shared foundation in probabilistic graphical models and unsupervised learning techniques. Both approaches leverage layered architectures to capture complex data distributions, enabling efficient representation and generation of high-dimensional data. Diffusion models extend DBNs by modeling data transformation over time, enhancing generative capabilities in applications like image synthesis and signal processing.

Key Terms

Layer-wise Pretraining (Deep Belief Networks)

Layer-wise pretraining in Deep Belief Networks (DBNs) involves training each layer individually using unsupervised learning techniques like Restricted Boltzmann Machines, which enhances feature extraction and stabilizes the training of deep architectures. Diffusion models, in contrast, do not rely on layer-wise pretraining but utilize a step-by-step denoising process to generate data, making them effective in generative tasks but structurally different from DBNs. Explore the detailed mechanisms and applications of these models to understand their distinct approaches to learning and data synthesis.

Markov Process (Diffusion Models)

Diffusion models utilize a Markov process to iteratively refine data representations by applying a sequence of stochastic transitions, effectively modeling complex data distributions through a gradual denoising mechanism. In contrast, Deep Belief Networks rely on layered Restricted Boltzmann Machines to capture hierarchical representations without explicit Markovian dynamics. Explore the fundamental differences in generative capabilities and inference procedures between these models to understand their applications and performance.

Generative Modeling

Deep Belief Networks (DBNs) are probabilistic generative models composed of multiple layers of stochastic, latent variables that capture hierarchical representations of data, enabling effective unsupervised learning and feature extraction. Diffusion models utilize a process of iterative noise addition and denoising through score-based generative modeling, achieving state-of-the-art results in high-fidelity image synthesis by modeling complex data distributions with continuous-time diffusion processes. Explore the distinct advantages and applications of Deep Belief Networks and Diffusion models in generative modeling to understand their impact on modern machine learning.

Source and External Links

Deep Belief Networks (DBNs) explained - Deep Belief Networks are stacks of Restricted Boltzmann Machines (RBMs) forming deep architectures that learn data patterns through layers, useful for both supervised and unsupervised tasks and offering faster training than traditional neural networks.

Deep Belief Network (DBN) in Deep Learning - DBNs learn data representations layer-by-layer using RBMs in a pre-training phase followed by fine-tuning with backpropagation for supervised tasks, making them effective in scenarios with scant labeled data.

Deep belief network - DBNs are generative graphical models with multiple layers of latent variables connected between but not within layers, enabling probabilistic input reconstruction and feature learning that can be refined afterward for classification.

dowidth.com

dowidth.com