Differentiable programming integrates gradient-based optimization directly within programming languages, enabling seamless development of adaptive models by treating programs as differentiable functions. Automatic differentiation is a computational technique that efficiently computes exact derivatives of functions expressed as computer programs, crucial for training machine learning models with gradient descent. Explore the distinctions and synergies between these approaches to unlock advanced capabilities in AI and scientific computing.

Why it is important

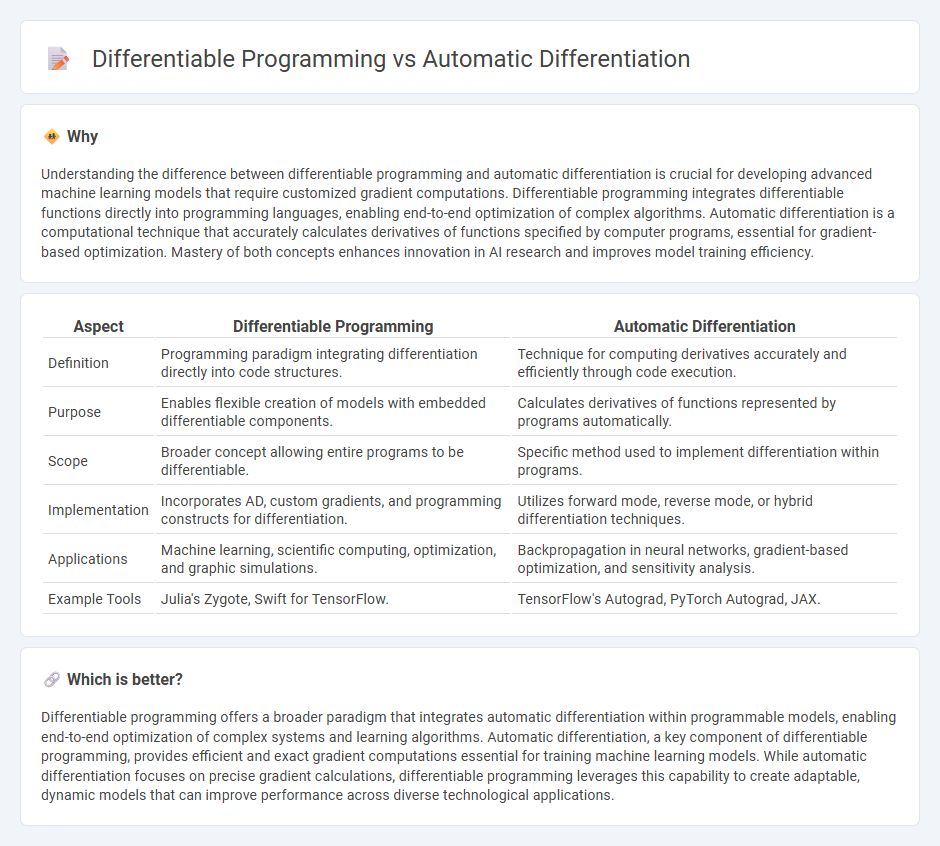

Understanding the difference between differentiable programming and automatic differentiation is crucial for developing advanced machine learning models that require customized gradient computations. Differentiable programming integrates differentiable functions directly into programming languages, enabling end-to-end optimization of complex algorithms. Automatic differentiation is a computational technique that accurately calculates derivatives of functions specified by computer programs, essential for gradient-based optimization. Mastery of both concepts enhances innovation in AI research and improves model training efficiency.

Comparison Table

| Aspect | Differentiable Programming | Automatic Differentiation |

|---|---|---|

| Definition | Programming paradigm integrating differentiation directly into code structures. | Technique for computing derivatives accurately and efficiently through code execution. |

| Purpose | Enables flexible creation of models with embedded differentiable components. | Calculates derivatives of functions represented by programs automatically. |

| Scope | Broader concept allowing entire programs to be differentiable. | Specific method used to implement differentiation within programs. |

| Implementation | Incorporates AD, custom gradients, and programming constructs for differentiation. | Utilizes forward mode, reverse mode, or hybrid differentiation techniques. |

| Applications | Machine learning, scientific computing, optimization, and graphic simulations. | Backpropagation in neural networks, gradient-based optimization, and sensitivity analysis. |

| Example Tools | Julia's Zygote, Swift for TensorFlow. | TensorFlow's Autograd, PyTorch Autograd, JAX. |

Which is better?

Differentiable programming offers a broader paradigm that integrates automatic differentiation within programmable models, enabling end-to-end optimization of complex systems and learning algorithms. Automatic differentiation, a key component of differentiable programming, provides efficient and exact gradient computations essential for training machine learning models. While automatic differentiation focuses on precise gradient calculations, differentiable programming leverages this capability to create adaptable, dynamic models that can improve performance across diverse technological applications.

Connection

Differentiable programming integrates automatic differentiation techniques to compute precise gradients efficiently within complex models, enabling seamless gradient-based optimization. Automatic differentiation systematically applies the chain rule to compute derivatives of functions expressed as computer programs, making it foundational for differentiable programming frameworks. This synergy accelerates innovation in machine learning, physics simulations, and neural network training by providing exact gradient calculations critical for optimization algorithms.

Key Terms

Computational Graph

Automatic differentiation relies on constructing a computational graph to systematically apply the chain rule, efficiently computing exact derivatives of complex functions by breaking them into elementary operations. Differentiable programming extends this concept by embedding automatic differentiation within general-purpose programming languages, enabling seamless gradient calculations over arbitrary control flow and data structures through dynamic computational graphs. Explore deeper insights into how computational graphs power these techniques and their impact on machine learning frameworks.

Gradient Calculation

Automatic differentiation computes exact gradients efficiently by applying the chain rule algorithmically through computational graphs, enabling precise gradient calculation in neural networks and optimization problems. Differentiable programming expands this concept by integrating gradient computation into programming languages and models, allowing gradients to flow through complex control structures and custom functions seamlessly. Explore how these approaches enhance machine learning model training and enable advanced optimization techniques.

Symbolic vs. Programmatic Differentiation

Symbolic differentiation generates exact derivative expressions by manipulating mathematical symbols, ideal for closed-form solutions but often leads to expression swell and inefficiency in complex functions. Programmatic differentiation, central to differentiable programming, computes derivatives through chain rule application at runtime by tracing execution paths, offering scalability and flexibility for machine learning models. Explore deeper distinctions and practical implementations between these approaches to enhance your understanding of modern computational differentiation techniques.

Source and External Links

Automatic differentiation - Wikipedia - Automatic differentiation (AD) is a set of techniques to compute exact partial derivatives of functions defined by computer programs efficiently and accurately by repeatedly applying the chain rule to elementary arithmetic operations and functions, without symbolic representation or numerical approximation errors.

Automatic Differentiation Background - MATLAB & Simulink - AD evaluates derivatives numerically using symbolic differentiation rules but computes derivatives at numeric values rather than symbolic expressions; in machine learning, reverse mode AD is commonly used for efficiently computing gradients of scalar functions like loss in deep learning.

Automatic Differentiation in Machine Learning: a Survey - AD techniques generalize beyond symbolic differentiation, enabling computation of accurate derivatives through computer code with minimal changes, handling loops, branching, and recursion; it underpins machine learning methods such as backpropagation and has wide application across engineering and sciences.

dowidth.com

dowidth.com