Edge AI processes data locally on devices like smartphones and IoT sensors, enabling real-time decision making with reduced latency and enhanced privacy. In contrast, AI inference in the cloud relies on centralized servers to perform complex computations, offering scalable resources and continuous model updates but often with increased latency and potential data transfer concerns. Explore the distinct advantages and use cases of Edge AI versus cloud-based AI inference to understand their impact on modern technology.

Why it is important

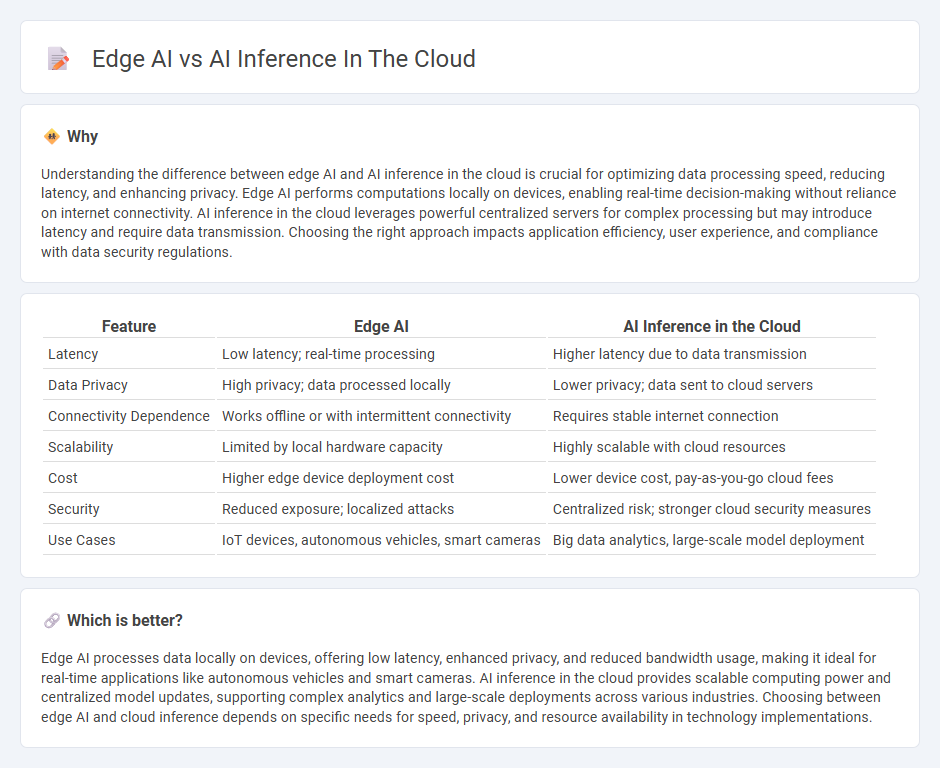

Understanding the difference between edge AI and AI inference in the cloud is crucial for optimizing data processing speed, reducing latency, and enhancing privacy. Edge AI performs computations locally on devices, enabling real-time decision-making without reliance on internet connectivity. AI inference in the cloud leverages powerful centralized servers for complex processing but may introduce latency and require data transmission. Choosing the right approach impacts application efficiency, user experience, and compliance with data security regulations.

Comparison Table

| Feature | Edge AI | AI Inference in the Cloud |

|---|---|---|

| Latency | Low latency; real-time processing | Higher latency due to data transmission |

| Data Privacy | High privacy; data processed locally | Lower privacy; data sent to cloud servers |

| Connectivity Dependence | Works offline or with intermittent connectivity | Requires stable internet connection |

| Scalability | Limited by local hardware capacity | Highly scalable with cloud resources |

| Cost | Higher edge device deployment cost | Lower device cost, pay-as-you-go cloud fees |

| Security | Reduced exposure; localized attacks | Centralized risk; stronger cloud security measures |

| Use Cases | IoT devices, autonomous vehicles, smart cameras | Big data analytics, large-scale model deployment |

Which is better?

Edge AI processes data locally on devices, offering low latency, enhanced privacy, and reduced bandwidth usage, making it ideal for real-time applications like autonomous vehicles and smart cameras. AI inference in the cloud provides scalable computing power and centralized model updates, supporting complex analytics and large-scale deployments across various industries. Choosing between edge AI and cloud inference depends on specific needs for speed, privacy, and resource availability in technology implementations.

Connection

Edge AI processes data locally on devices to ensure low latency and real-time decision-making, while AI inference in the cloud provides scalable computational power and access to centralized data resources. These two technologies complement each other by enabling seamless data synchronization and workload distribution between edge devices and cloud infrastructure. Integration of edge AI with cloud-based AI inference enhances efficiency, scalability, and accuracy in applications such as autonomous vehicles, smart cities, and industrial IoT.

Key Terms

Latency

Cloud-based AI inference often experiences higher latency due to data transmission delays between edge devices and remote servers, impacting real-time decision-making processes. Edge AI minimizes latency by performing inference locally on devices, enabling faster response times crucial in applications like autonomous vehicles and industrial automation. Explore detailed comparisons and performance metrics to understand the latency benefits of edge AI over cloud inference.

Bandwidth

Cloud AI inference often requires substantial bandwidth for data transmission between devices and remote servers, which can lead to latency and higher operational costs. Edge AI processes data locally on devices, significantly reducing bandwidth usage and enhancing real-time responsiveness. Explore the advantages of each approach to optimize your bandwidth and system performance.

Data Privacy

Cloud AI inference offers scalable computing power but poses increased data privacy risks due to data transmission and centralized storage vulnerabilities. Edge AI processes data locally on devices, minimizing exposure and enhancing data privacy by limiting sensitive information from leaving the device. Explore further to understand how edge AI balances performance and privacy in real-time applications.

Source and External Links

What is AI inference? How it works and examples | Google Cloud - AI inference in the cloud involves running trained AI models on platforms like Google Cloud's Vertex AI to deliver real-time or batch predictions on new data, supporting use cases such as product recommendations, fraud detection, and generative AI tasks.

Cloud Inference: 3 Powerful Reasons to Use in 2025 - Cloud inference runs machine learning models on scalable cloud infrastructures rather than local devices, providing the computational power needed for processing complex models efficiently and enabling broad deployment across industries.

Distributed AI Inferencing -- The Next Generation of Computing - Delivering AI inference in the cloud is evolving toward distributed architectures to minimize latency, enhance responsiveness, improve security and scalability, and reduce bandwidth costs by moving inference closer to users.

dowidth.com

dowidth.com