Deep learning forecasting leverages neural networks to model complex, nonlinear financial data patterns in accounting, enhancing accuracy in revenue and expense predictions. Random forest employs ensemble learning with decision trees, offering robust performance in identifying trends and anomalies in financial statements. Explore how these advanced techniques revolutionize accounting forecasts and decision-making processes.

Why it is important

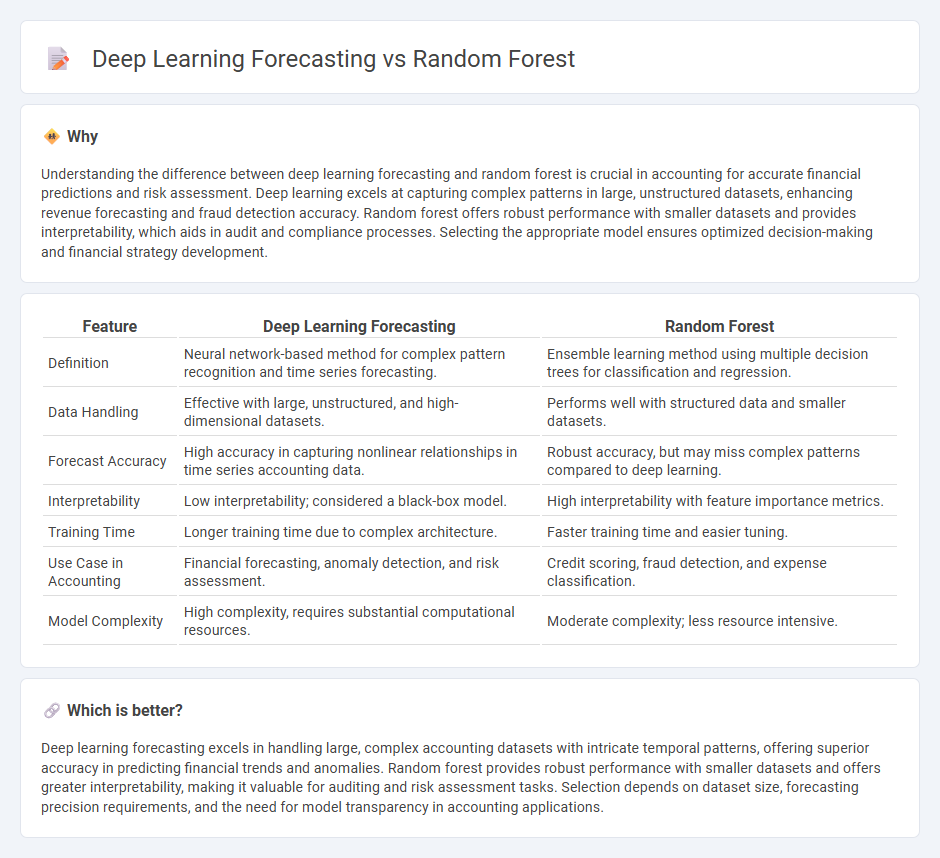

Understanding the difference between deep learning forecasting and random forest is crucial in accounting for accurate financial predictions and risk assessment. Deep learning excels at capturing complex patterns in large, unstructured datasets, enhancing revenue forecasting and fraud detection accuracy. Random forest offers robust performance with smaller datasets and provides interpretability, which aids in audit and compliance processes. Selecting the appropriate model ensures optimized decision-making and financial strategy development.

Comparison Table

| Feature | Deep Learning Forecasting | Random Forest |

|---|---|---|

| Definition | Neural network-based method for complex pattern recognition and time series forecasting. | Ensemble learning method using multiple decision trees for classification and regression. |

| Data Handling | Effective with large, unstructured, and high-dimensional datasets. | Performs well with structured data and smaller datasets. |

| Forecast Accuracy | High accuracy in capturing nonlinear relationships in time series accounting data. | Robust accuracy, but may miss complex patterns compared to deep learning. |

| Interpretability | Low interpretability; considered a black-box model. | High interpretability with feature importance metrics. |

| Training Time | Longer training time due to complex architecture. | Faster training time and easier tuning. |

| Use Case in Accounting | Financial forecasting, anomaly detection, and risk assessment. | Credit scoring, fraud detection, and expense classification. |

| Model Complexity | High complexity, requires substantial computational resources. | Moderate complexity; less resource intensive. |

Which is better?

Deep learning forecasting excels in handling large, complex accounting datasets with intricate temporal patterns, offering superior accuracy in predicting financial trends and anomalies. Random forest provides robust performance with smaller datasets and offers greater interpretability, making it valuable for auditing and risk assessment tasks. Selection depends on dataset size, forecasting precision requirements, and the need for model transparency in accounting applications.

Connection

Deep learning forecasting and Random Forest are connected through their application in predictive analytics within accounting, enabling more accurate financial forecasting and risk assessment. Deep learning models capture complex, non-linear relationships in large accounting datasets, while Random Forest offers robust ensemble-based predictions by aggregating multiple decision trees. Integrating both methods enhances the precision and reliability of accounting forecasts, supporting better decision-making in financial planning and auditing.

Key Terms

Model accuracy

Random forest models excel in forecasting by handling high-dimensional data with lower computational cost and reduced overfitting risk, often achieving strong accuracy in structured datasets. Deep learning methods, particularly recurrent neural networks and LSTM, capture complex temporal patterns and nonlinear relationships, delivering superior accuracy in large-scale and unstructured time series data. Explore detailed comparisons and case studies to understand which approach best suits your forecasting needs.

Feature importance

Random forest excels in forecasting by providing clear feature importance scores that quantify the contribution of each variable, enhancing model interpretability. Deep learning models, while often delivering superior predictive accuracy, rely on complex architectures that obscure feature effects, requiring specialized methods like SHAP or LIME for interpretability. Explore detailed comparisons and practical applications to understand which method suits your forecasting needs best.

Overfitting

Random forest models reduce overfitting by averaging multiple decision trees, enhancing prediction stability for forecasting tasks. Deep learning networks, while powerful, often require extensive data and regularization techniques to prevent overfitting due to their complex architectures. Explore more about balancing model complexity and forecast accuracy in advanced predictive analytics.

Source and External Links

Random Forest Classification with Scikit-Learn - This tutorial provides a comprehensive introduction to random forest classification using Python, focusing on the ensemble machine learning algorithm that aggregates the predictions of multiple decision trees.

Random Forest - Random forests are an ensemble learning method used for classification, regression, and other tasks, averaging the predictions of multiple decision trees to reduce overfitting.

What Is Random Forest? - Random forest is a machine learning algorithm that combines the output of multiple decision trees to reach a single result, handling both classification and regression problems efficiently.

dowidth.com

dowidth.com