Differential privacy enhances data protection by injecting controlled noise, ensuring individual information remains confidential during analysis. Secure multi-party computation allows multiple parties to collaboratively compute functions over their inputs without revealing the inputs themselves. Explore the nuances and applications of these advanced privacy-preserving technologies to understand their impact on data security.

Why it is important

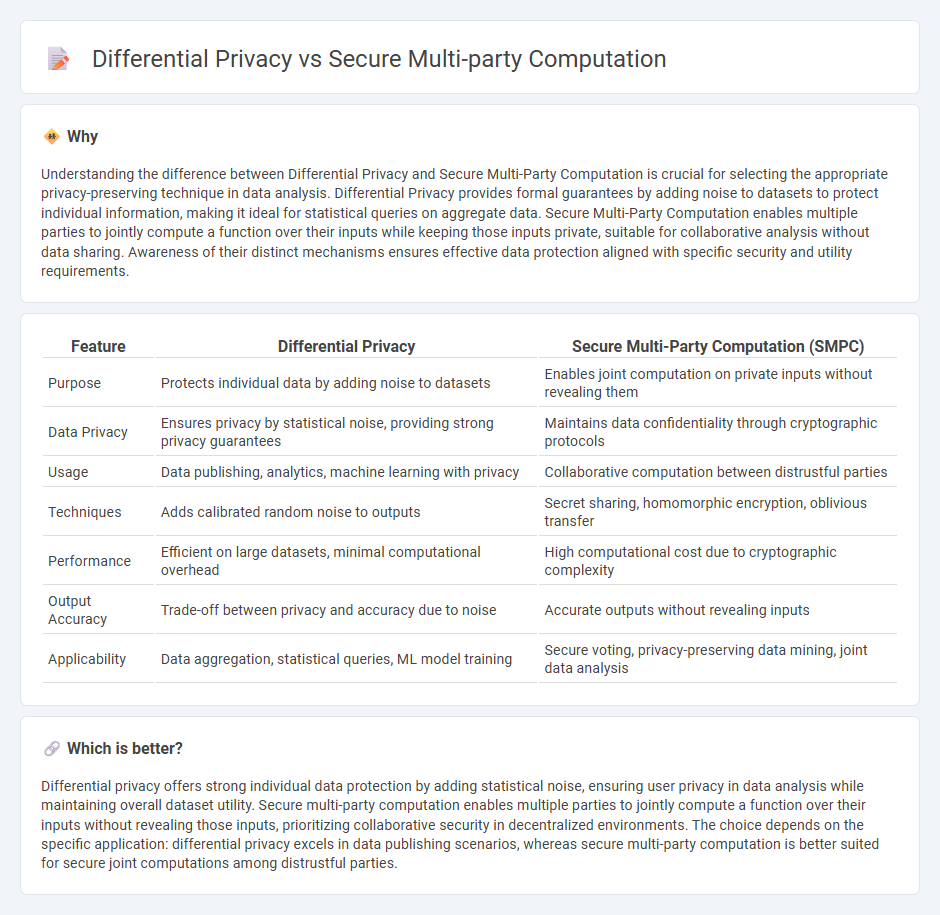

Understanding the difference between Differential Privacy and Secure Multi-Party Computation is crucial for selecting the appropriate privacy-preserving technique in data analysis. Differential Privacy provides formal guarantees by adding noise to datasets to protect individual information, making it ideal for statistical queries on aggregate data. Secure Multi-Party Computation enables multiple parties to jointly compute a function over their inputs while keeping those inputs private, suitable for collaborative analysis without data sharing. Awareness of their distinct mechanisms ensures effective data protection aligned with specific security and utility requirements.

Comparison Table

| Feature | Differential Privacy | Secure Multi-Party Computation (SMPC) |

|---|---|---|

| Purpose | Protects individual data by adding noise to datasets | Enables joint computation on private inputs without revealing them |

| Data Privacy | Ensures privacy by statistical noise, providing strong privacy guarantees | Maintains data confidentiality through cryptographic protocols |

| Usage | Data publishing, analytics, machine learning with privacy | Collaborative computation between distrustful parties |

| Techniques | Adds calibrated random noise to outputs | Secret sharing, homomorphic encryption, oblivious transfer |

| Performance | Efficient on large datasets, minimal computational overhead | High computational cost due to cryptographic complexity |

| Output Accuracy | Trade-off between privacy and accuracy due to noise | Accurate outputs without revealing inputs |

| Applicability | Data aggregation, statistical queries, ML model training | Secure voting, privacy-preserving data mining, joint data analysis |

Which is better?

Differential privacy offers strong individual data protection by adding statistical noise, ensuring user privacy in data analysis while maintaining overall dataset utility. Secure multi-party computation enables multiple parties to jointly compute a function over their inputs without revealing those inputs, prioritizing collaborative security in decentralized environments. The choice depends on the specific application: differential privacy excels in data publishing scenarios, whereas secure multi-party computation is better suited for secure joint computations among distrustful parties.

Connection

Differential privacy and secure multi-party computation (SMPC) both enhance data privacy by enabling collaborative analysis without exposing individual data points. Differential privacy injects controlled noise to obscure sensitive information while preserving aggregate insights, whereas SMPC allows multiple parties to jointly compute functions over their inputs without revealing them. Their integration facilitates secure, privacy-preserving data analytics across distributed datasets in sectors like healthcare and finance.

Key Terms

Encryption

Secure multi-party computation (SMPC) allows multiple parties to jointly compute a function over their inputs while keeping those inputs private through encryption techniques like homomorphic encryption or secret sharing. Differential privacy, on the other hand, adds noise to the data or query results to provide privacy guarantees without necessarily encrypting the information. To explore the detailed encryption mechanisms and privacy trade-offs between these approaches, discover more insights here.

Noise injection

Secure multi-party computation (SMPC) enables multiple parties to collaboratively compute a function over their inputs while keeping those inputs private, often avoiding explicit noise injection by relying on cryptographic protocols. Differential privacy, however, ensures data privacy by injecting calibrated noise into query results to mask individual contributions, quantifying privacy loss through parameters like epsilon and delta. Explore the nuances of noise injection techniques and privacy guarantees to better understand their applications and trade-offs.

Trust assumptions

Secure multi-party computation (SMPC) enables multiple parties to jointly compute a function over their private inputs without revealing those inputs, relying on cryptographic protocols that assume semi-honest or malicious adversaries. Differential privacy (DP) ensures individual data protection by adding noise to query outputs, assuming a trusted data curator or analyst who implements the privacy mechanism. Explore the core trust assumptions and practical implications of SMPC and differential privacy to better understand their roles in data security frameworks.

Source and External Links

What is Secure Multiparty Computation? - GeeksforGeeks - Secure Multiparty Computation (SMPC) is a cryptographic technique enabling multiple parties to jointly compute functions on private data without revealing the data itself, preserving privacy while allowing collaboration.

Secure multi-party computation - Wikipedia - SMPC is a subfield of cryptography focused on developing protocols that allow multiple parties to compute a function over their inputs while keeping those inputs private from each other.

Secure Multi-Party Computation - Chainlink - SMPC allows multiple parties to securely compute a function using private data, providing enhanced security, data privacy, and regulatory compliance without exposing sensitive information.

dowidth.com

dowidth.com