Edge inference processes data locally on devices such as smartphones or IoT sensors, enabling faster response times and reduced latency compared to cloud inference, which relies on centralized servers for data analysis. Cloud inference offers scalable computing power and extensive model updates but may suffer from network delays and data privacy concerns. Explore the distinct advantages of edge and cloud inference to determine the best solution for your technology needs.

Why it is important

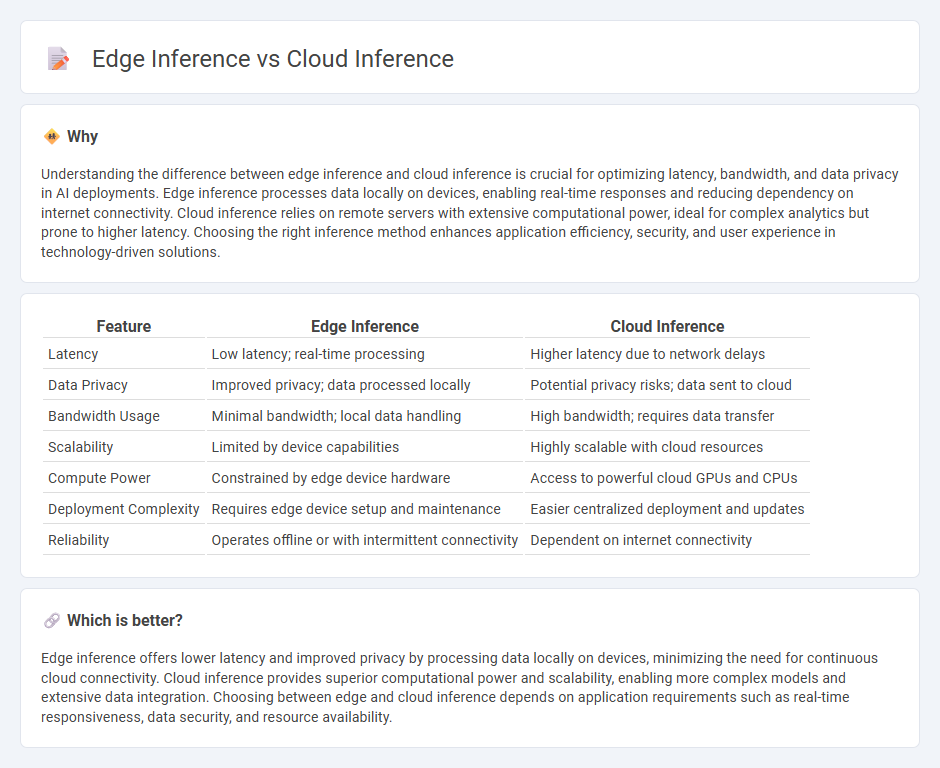

Understanding the difference between edge inference and cloud inference is crucial for optimizing latency, bandwidth, and data privacy in AI deployments. Edge inference processes data locally on devices, enabling real-time responses and reducing dependency on internet connectivity. Cloud inference relies on remote servers with extensive computational power, ideal for complex analytics but prone to higher latency. Choosing the right inference method enhances application efficiency, security, and user experience in technology-driven solutions.

Comparison Table

| Feature | Edge Inference | Cloud Inference |

|---|---|---|

| Latency | Low latency; real-time processing | Higher latency due to network delays |

| Data Privacy | Improved privacy; data processed locally | Potential privacy risks; data sent to cloud |

| Bandwidth Usage | Minimal bandwidth; local data handling | High bandwidth; requires data transfer |

| Scalability | Limited by device capabilities | Highly scalable with cloud resources |

| Compute Power | Constrained by edge device hardware | Access to powerful cloud GPUs and CPUs |

| Deployment Complexity | Requires edge device setup and maintenance | Easier centralized deployment and updates |

| Reliability | Operates offline or with intermittent connectivity | Dependent on internet connectivity |

Which is better?

Edge inference offers lower latency and improved privacy by processing data locally on devices, minimizing the need for continuous cloud connectivity. Cloud inference provides superior computational power and scalability, enabling more complex models and extensive data integration. Choosing between edge and cloud inference depends on application requirements such as real-time responsiveness, data security, and resource availability.

Connection

Edge inference processes data locally on devices such as IoT sensors or smartphones, reducing latency and bandwidth usage, while cloud inference relies on powerful remote servers for complex computations and model updates. These two inference methods are connected through hybrid architectures that enable seamless data flow, where edge devices pre-process or filter data before sending relevant information to the cloud for deeper analysis. This synergy enhances real-time decision-making and optimizes computational resources across distributed environments.

Key Terms

Latency

Cloud inference involves processing data on centralized servers, which can introduce latency due to network transmission delays. Edge inference performs computations locally on devices, significantly reducing latency and enabling real-time decision-making in applications like autonomous vehicles and IoT sensors. Explore further to understand how latency impacts performance across diverse use cases.

Bandwidth

Cloud inference utilizes centralized servers, requiring significant bandwidth to transmit large volumes of raw data for processing, which can lead to latency and increased operational costs. Edge inference processes data locally on devices or nearby edge servers, substantially reducing bandwidth usage by sending only processed insights or critical data to the cloud. Explore the key differences in bandwidth efficiency between cloud and edge inference to optimize your AI deployment strategies.

Data Privacy

Cloud inference processes data on centralized servers, exposing sensitive information to potential breaches during transmission. Edge inference executes AI models locally on devices, minimizing data exposure and enhancing privacy by keeping information within the user's environment. Explore in-depth comparisons to understand which inference method best safeguards your data privacy needs.

Source and External Links

Cloud Inference: 3 Powerful Reasons to Use in 2025 - Cloud inference enables running machine learning models on remote cloud platforms, providing scalable, high-performance computing resources without the need for expensive local hardware.

What is AI inference? How it works and examples - AI inference in the cloud involves using powerful data center servers to process large datasets and complex models, offering immense scalability and computational power for tasks like large-scale document categorization and financial analysis.

Akamai Sharpens Its AI Edge with Launch of Akamai Cloud Inference - Akamai's cloud inference solution delivers AI applications closer to end users, significantly improving throughput and reducing latency while cutting costs compared to traditional hyperscale infrastructure.

dowidth.com

dowidth.com