Generative Adversarial Networks (GANs) harness a dual-network system to create realistic data by pitting a generator against a discriminator, excelling in high-quality image synthesis and data augmentation. Restricted Mixture Models (RMMs) use probabilistic framework combining multiple restricted components to capture complex data distributions with interpretable clustering capabilities. Explore further to understand their distinct applications and performance in advanced machine learning scenarios.

Why it is important

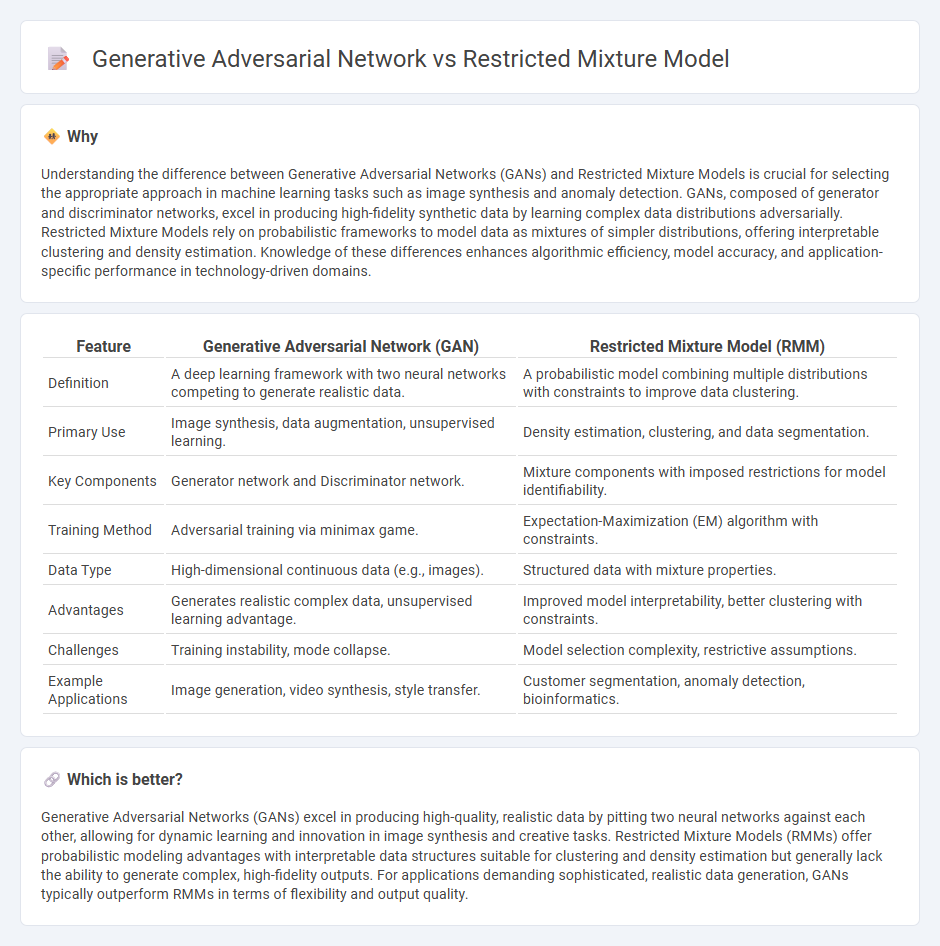

Understanding the difference between Generative Adversarial Networks (GANs) and Restricted Mixture Models is crucial for selecting the appropriate approach in machine learning tasks such as image synthesis and anomaly detection. GANs, composed of generator and discriminator networks, excel in producing high-fidelity synthetic data by learning complex data distributions adversarially. Restricted Mixture Models rely on probabilistic frameworks to model data as mixtures of simpler distributions, offering interpretable clustering and density estimation. Knowledge of these differences enhances algorithmic efficiency, model accuracy, and application-specific performance in technology-driven domains.

Comparison Table

| Feature | Generative Adversarial Network (GAN) | Restricted Mixture Model (RMM) |

|---|---|---|

| Definition | A deep learning framework with two neural networks competing to generate realistic data. | A probabilistic model combining multiple distributions with constraints to improve data clustering. |

| Primary Use | Image synthesis, data augmentation, unsupervised learning. | Density estimation, clustering, and data segmentation. |

| Key Components | Generator network and Discriminator network. | Mixture components with imposed restrictions for model identifiability. |

| Training Method | Adversarial training via minimax game. | Expectation-Maximization (EM) algorithm with constraints. |

| Data Type | High-dimensional continuous data (e.g., images). | Structured data with mixture properties. |

| Advantages | Generates realistic complex data, unsupervised learning advantage. | Improved model interpretability, better clustering with constraints. |

| Challenges | Training instability, mode collapse. | Model selection complexity, restrictive assumptions. |

| Example Applications | Image generation, video synthesis, style transfer. | Customer segmentation, anomaly detection, bioinformatics. |

Which is better?

Generative Adversarial Networks (GANs) excel in producing high-quality, realistic data by pitting two neural networks against each other, allowing for dynamic learning and innovation in image synthesis and creative tasks. Restricted Mixture Models (RMMs) offer probabilistic modeling advantages with interpretable data structures suitable for clustering and density estimation but generally lack the ability to generate complex, high-fidelity outputs. For applications demanding sophisticated, realistic data generation, GANs typically outperform RMMs in terms of flexibility and output quality.

Connection

Generative Adversarial Networks (GANs) and Restricted Mixture Models (RMMs) both focus on probabilistic data generation, where GANs use a game-theoretic approach between generator and discriminator to produce realistic samples. RMMs incorporate constraints into mixture models to enhance component identification and improve data representation, which can complement the adversarial learning process in GANs. Integrating RMMs into GAN frameworks can lead to more structured and interpretable generative models with better control over data distribution complexities.

Key Terms

Probability Distribution

Restricted mixture models estimate complex probability distributions by combining multiple simpler probabilistic components, enabling interpretability and efficient parameter estimation through expectation-maximization algorithms. Generative adversarial networks (GANs) synthesize data by training two neural networks adversarially, implicitly capturing high-dimensional probability distributions without explicit likelihood functions. Explore detailed comparisons to understand their strengths and applications in modeling probability distributions.

Generator-Discriminator

The generator-discriminator dynamics in restricted mixture models (RMM) rely on probabilistic mixture components to capture data distributions, whereas generative adversarial networks (GANs) use a neural network generator to produce synthetic data and a discriminator network to distinguish generated samples from real data. RMMs focus on combining multiple simpler distributions under constraints for better interpretability, while GANs leverage adversarial training to improve data synthesis quality and diversity without explicit density estimation. Explore further distinctions and applications of generator-discriminator mechanisms in advanced generative modeling frameworks.

Latent Variables

Restricted mixture models use latent variables to represent discrete cluster memberships, facilitating probabilistic data segmentation through explicit component assignments. Generative adversarial networks (GANs) utilize latent variables as continuous noise inputs, enabling the generation of realistic synthetic data by learning complex data distributions without explicit latent inference. Explore how the treatment and optimization of latent variables in these models impact their applications in machine learning.

Source and External Links

Implicit Mixtures of Restricted Boltzmann Machines - Describes a mixture model with components as Restricted Boltzmann Machines (RBMs), where mixing proportions are implicitly defined via an energy function rather than explicit parameters, and learning is tractably achieved using contrastive divergence, as demonstrated on MNIST and NORB datasets.

A Note on MAR, Identifying Restrictions, Model Comparison, and ... - Examines identification conditions and restrictions in pattern mixture models for missing data, showing that under monotone dropout, missing-at-random (MAR) conditions are equivalent to specific constraints on model parameters and are as restrictive as missing-completely-at-random (MCAR) under certain scenarios.

Gaussian Mixture Model Explained | Built In - Explains that Gaussian mixture models are used in unsupervised learning for soft clustering, with the expectation-maximization algorithm estimating the probability of each data point belonging to a cluster by iteratively updating model parameters.

dowidth.com

dowidth.com