Generative fill leverages advanced neural networks to seamlessly complete missing portions of images by understanding surrounding context and textures. GAN-based image editing uses generative adversarial networks to manipulate or enhance images by training two models--the generator and discriminator--in a competitive framework for realistic outcomes. Explore the evolving technologies behind image generation and editing to understand their unique capabilities and applications.

Why it is important

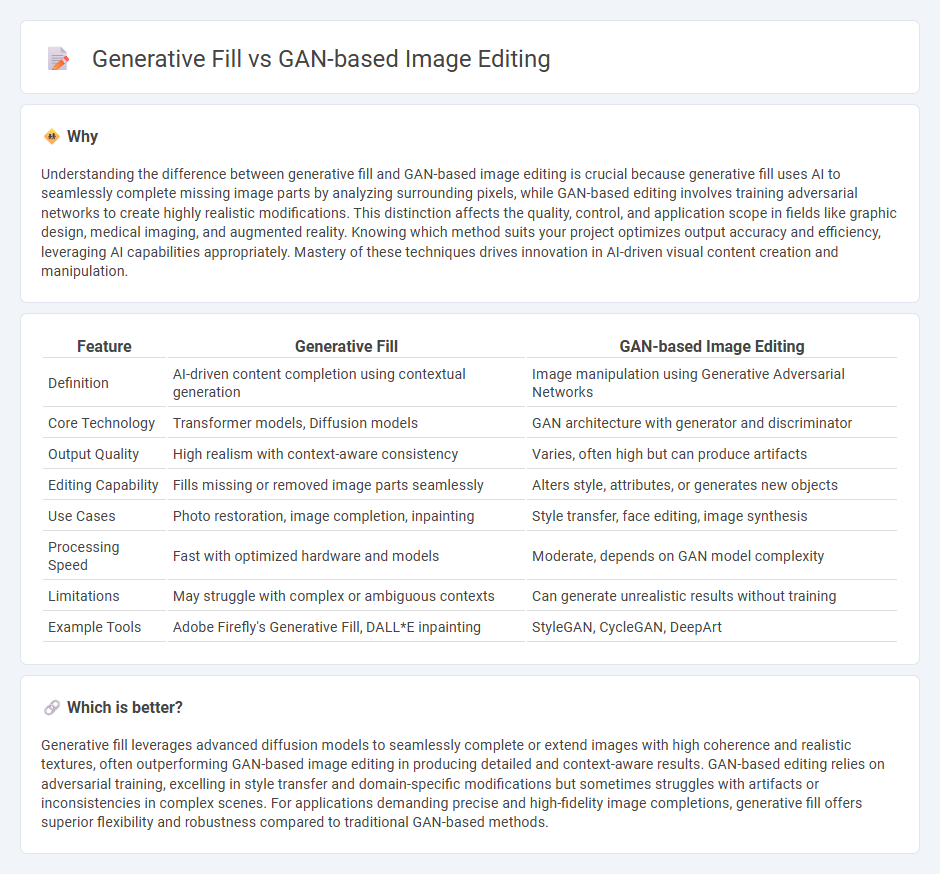

Understanding the difference between generative fill and GAN-based image editing is crucial because generative fill uses AI to seamlessly complete missing image parts by analyzing surrounding pixels, while GAN-based editing involves training adversarial networks to create highly realistic modifications. This distinction affects the quality, control, and application scope in fields like graphic design, medical imaging, and augmented reality. Knowing which method suits your project optimizes output accuracy and efficiency, leveraging AI capabilities appropriately. Mastery of these techniques drives innovation in AI-driven visual content creation and manipulation.

Comparison Table

| Feature | Generative Fill | GAN-based Image Editing |

|---|---|---|

| Definition | AI-driven content completion using contextual generation | Image manipulation using Generative Adversarial Networks |

| Core Technology | Transformer models, Diffusion models | GAN architecture with generator and discriminator |

| Output Quality | High realism with context-aware consistency | Varies, often high but can produce artifacts |

| Editing Capability | Fills missing or removed image parts seamlessly | Alters style, attributes, or generates new objects |

| Use Cases | Photo restoration, image completion, inpainting | Style transfer, face editing, image synthesis |

| Processing Speed | Fast with optimized hardware and models | Moderate, depends on GAN model complexity |

| Limitations | May struggle with complex or ambiguous contexts | Can generate unrealistic results without training |

| Example Tools | Adobe Firefly's Generative Fill, DALL*E inpainting | StyleGAN, CycleGAN, DeepArt |

Which is better?

Generative fill leverages advanced diffusion models to seamlessly complete or extend images with high coherence and realistic textures, often outperforming GAN-based image editing in producing detailed and context-aware results. GAN-based editing relies on adversarial training, excelling in style transfer and domain-specific modifications but sometimes struggles with artifacts or inconsistencies in complex scenes. For applications demanding precise and high-fidelity image completions, generative fill offers superior flexibility and robustness compared to traditional GAN-based methods.

Connection

Generative fill utilizes GAN-based image editing techniques to intelligently synthesize missing or altered regions in images, enhancing visual coherence and realism. GANs, or Generative Adversarial Networks, consist of generator and discriminator models that collaboratively improve image quality through iterative training. This synergy allows for advanced applications such as seamless photo restoration, content creation, and AI-driven graphic design.

Key Terms

Latent Space Manipulation

GAN-based image editing leverages latent space manipulation to modify images by adjusting the underlying vector representations, enabling precise alterations such as changing facial expressions or object attributes while preserving image realism. Generative fill expands on this by using latent space to seamlessly synthesize missing parts or extend images, often filling gaps with contextually relevant content generated through learned data distributions. Explore how latent space manipulation techniques revolutionize image editing by offering enhanced control and creativity in digital content creation.

Inpainting Algorithms

GAN-based image editing leverages Generative Adversarial Networks to produce high-quality, context-aware modifications by training a generator-discriminator duo to refine inpainted regions realistically. Generative fill techniques enhance inpainting algorithms by employing transformer architectures and large-scale diffusion models to predict missing image content with greater accuracy and consistency. Explore the latest advancements in inpainting algorithms to understand how these approaches redefine image synthesis and restoration.

Conditional Generation

GAN-based image editing leverages conditional generation to manipulate images by learning complex mappings between input conditions and visual outputs, enabling precise control over features like style, texture, or objects. Generative fill techniques apply conditional generation to seamlessly complete or replace missing image regions by conditioning on surrounding context, ensuring coherent and realistic results. Explore further to understand how conditional generation enhances both GAN-based editing and generative fill methods.

Source and External Links

EditGAN: High-Precision Semantic Image Editing - EditGAN is a GAN-based image editing framework that enables high-precision edits by modifying semantic segmentation masks and optimizing latent codes, requiring very few labeled examples and allowing direct application of learned edits on other images for interactive use.

EditGAN - Research at NVIDIA - EditGAN is the first GAN-driven image editing system offering real-time, high-precision semantic editing with minimal annotated data, compositional editing, and applicability to GAN-generated and real images without relying on external classifiers.

Image Editing using GAN - GitHub Pages - This resource explains using GAN variants like DCGAN for image editing by learning image features and manipulating latent vectors to generate images with desired attributes, using datasets like CelebA for face attribute editing.

dowidth.com

dowidth.com