Diffusion models generate high-quality images by iteratively refining random noise through a probabilistic process, contrasting with Convolutional Neural Networks (CNNs) that apply layered filters to extract spatial features for tasks like image recognition. CNNs excel in structured data processing with strong spatial hierarchies, while diffusion models offer superior generative capabilities in image synthesis and denoising. Explore the differences in architecture and applications to understand how these technologies are shaping modern AI advancements.

Why it is important

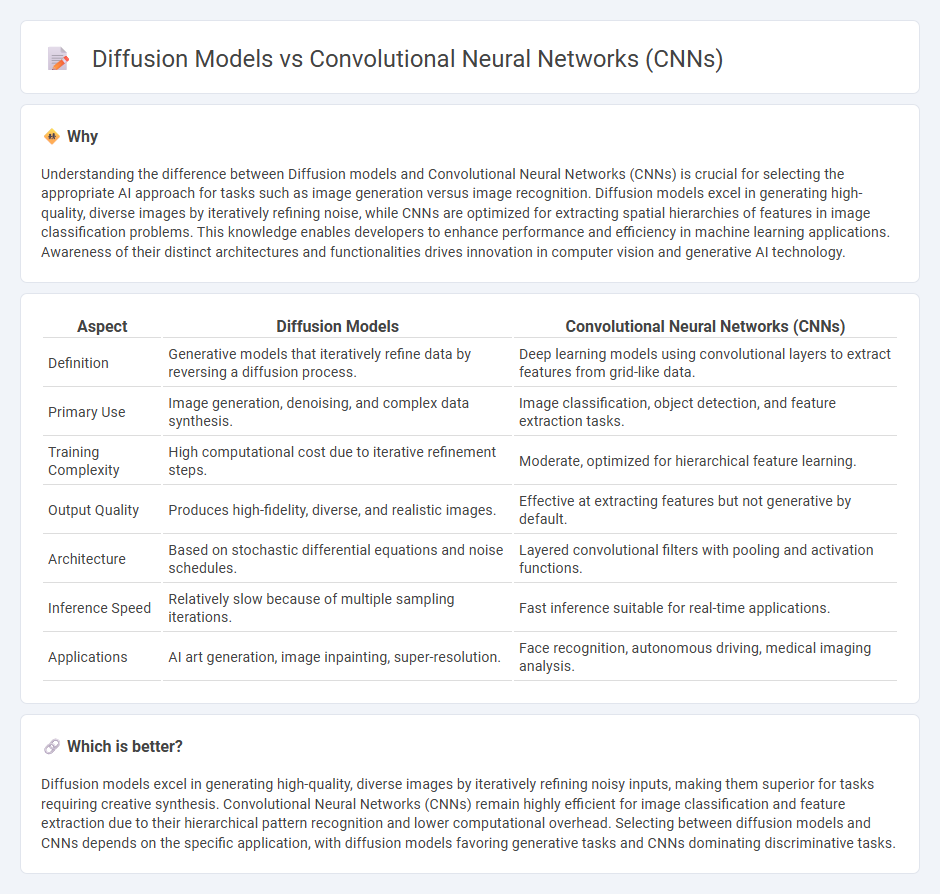

Understanding the difference between Diffusion models and Convolutional Neural Networks (CNNs) is crucial for selecting the appropriate AI approach for tasks such as image generation versus image recognition. Diffusion models excel in generating high-quality, diverse images by iteratively refining noise, while CNNs are optimized for extracting spatial hierarchies of features in image classification problems. This knowledge enables developers to enhance performance and efficiency in machine learning applications. Awareness of their distinct architectures and functionalities drives innovation in computer vision and generative AI technology.

Comparison Table

| Aspect | Diffusion Models | Convolutional Neural Networks (CNNs) |

|---|---|---|

| Definition | Generative models that iteratively refine data by reversing a diffusion process. | Deep learning models using convolutional layers to extract features from grid-like data. |

| Primary Use | Image generation, denoising, and complex data synthesis. | Image classification, object detection, and feature extraction tasks. |

| Training Complexity | High computational cost due to iterative refinement steps. | Moderate, optimized for hierarchical feature learning. |

| Output Quality | Produces high-fidelity, diverse, and realistic images. | Effective at extracting features but not generative by default. |

| Architecture | Based on stochastic differential equations and noise schedules. | Layered convolutional filters with pooling and activation functions. |

| Inference Speed | Relatively slow because of multiple sampling iterations. | Fast inference suitable for real-time applications. |

| Applications | AI art generation, image inpainting, super-resolution. | Face recognition, autonomous driving, medical imaging analysis. |

Which is better?

Diffusion models excel in generating high-quality, diverse images by iteratively refining noisy inputs, making them superior for tasks requiring creative synthesis. Convolutional Neural Networks (CNNs) remain highly efficient for image classification and feature extraction due to their hierarchical pattern recognition and lower computational overhead. Selecting between diffusion models and CNNs depends on the specific application, with diffusion models favoring generative tasks and CNNs dominating discriminative tasks.

Connection

Diffusion models leverage iterative processes to generate high-quality data samples, often enhancing image synthesis tasks where Convolutional Neural Networks (CNNs) excel due to their spatial feature extraction capabilities. CNNs serve as essential components within diffusion models by accurately capturing hierarchical patterns in visual data, which guides the diffusion process in refining outputs. The integration of CNN architectures within diffusion frameworks results in improved performance for applications like image generation, restoration, and denoising.

Key Terms

Feature Extraction

Convolutional Neural Networks (CNNs) excel at feature extraction by utilizing convolutional layers that capture spatial hierarchies and local patterns in image data, making them highly effective for tasks like object recognition and image classification. Diffusion models, on the other hand, employ iterative noise removal processes that generate data by reversing diffusion, focusing on the global structure and semantics rather than explicit feature maps. Explore the detailed differences and applications of CNNs and diffusion models to understand their respective feature extraction capabilities.

Generative Modeling

Convolutional Neural Networks (CNNs) excel in generative modeling by efficiently capturing spatial hierarchies and patterns through stacked convolutional layers, making them ideal for image synthesis and style transfer tasks. Diffusion models leverage iterative noise reduction processes to generate high-quality, diverse samples by modeling complex data distributions, often surpassing CNNs in producing photorealistic images. Explore the latest advancements in generative modeling techniques to understand how CNNs and diffusion models transform applications in AI-driven creativity.

Image Synthesis

Convolutional Neural Networks (CNNs) excel in image synthesis by leveraging hierarchical feature extraction and spatial locality, enabling efficient generation of high-resolution images with detailed textures. Diffusion models, based on iterative denoising processes, have recently gained prominence for producing highly diverse and realistic images through probabilistic sampling, outperforming CNNs in capturing complex data distributions. Explore the latest advancements to understand how these architectures transform image synthesis technologies.

Source and External Links

An Introduction to Convolutional Neural Networks (CNNs) - A Convolutional Neural Network (CNN) is a specialized deep learning algorithm primarily used for image classification, object detection, and segmentation, capable of automatically extracting features without manual intervention.

What Is a Convolutional Neural Network? - CNNs are deep learning architectures designed to learn directly from data, using multiple layers of convolution, activation, and pooling to detect increasingly complex patterns in images and other data types.

Convolutional neural network - CNNs are feedforward neural networks that learn features via optimized filters, excelling in tasks like image recognition and medical image analysis while requiring less manual preprocessing than traditional methods.

dowidth.com

dowidth.com