Synthetic media detection techniques analyze visual, auditory, and textual content to identify manipulated or AI-generated data, leveraging machine learning algorithms to distinguish between authentic and fabricated material. Adversarial attacks exploit vulnerabilities in these detection systems by subtly altering inputs to deceive algorithms, posing significant challenges to media authenticity verification. Explore cutting-edge strategies and research developments to stay informed about advancements in synthetic media detection and adversarial defense.

Why it is important

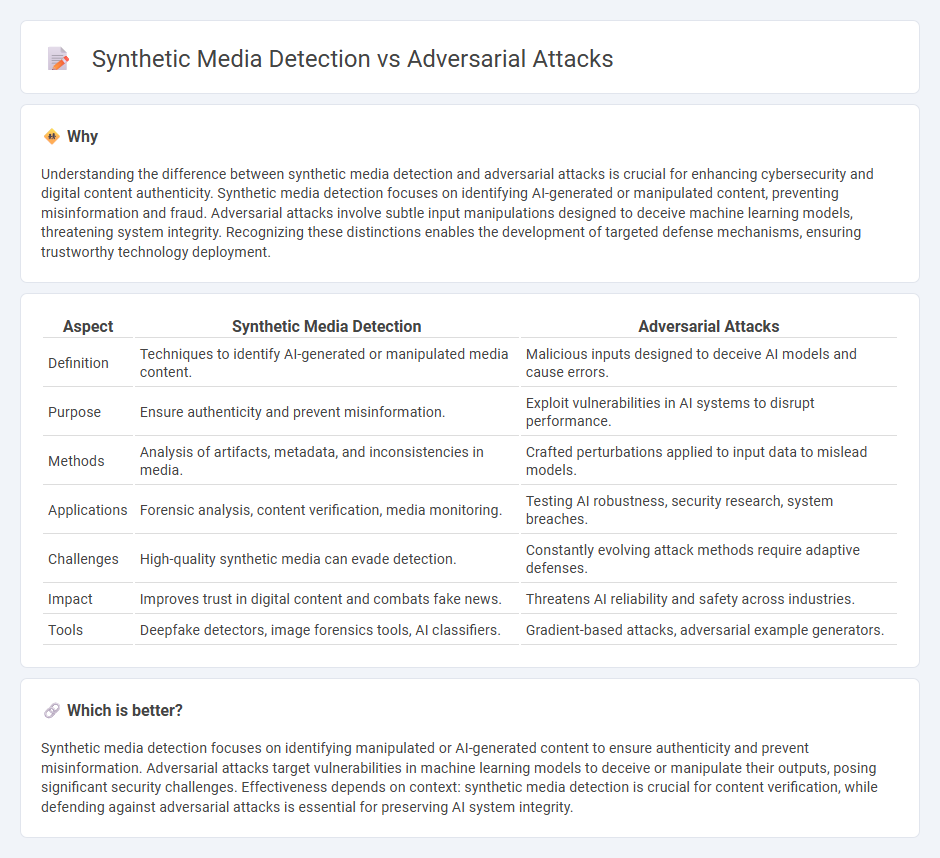

Understanding the difference between synthetic media detection and adversarial attacks is crucial for enhancing cybersecurity and digital content authenticity. Synthetic media detection focuses on identifying AI-generated or manipulated content, preventing misinformation and fraud. Adversarial attacks involve subtle input manipulations designed to deceive machine learning models, threatening system integrity. Recognizing these distinctions enables the development of targeted defense mechanisms, ensuring trustworthy technology deployment.

Comparison Table

| Aspect | Synthetic Media Detection | Adversarial Attacks |

|---|---|---|

| Definition | Techniques to identify AI-generated or manipulated media content. | Malicious inputs designed to deceive AI models and cause errors. |

| Purpose | Ensure authenticity and prevent misinformation. | Exploit vulnerabilities in AI systems to disrupt performance. |

| Methods | Analysis of artifacts, metadata, and inconsistencies in media. | Crafted perturbations applied to input data to mislead models. |

| Applications | Forensic analysis, content verification, media monitoring. | Testing AI robustness, security research, system breaches. |

| Challenges | High-quality synthetic media can evade detection. | Constantly evolving attack methods require adaptive defenses. |

| Impact | Improves trust in digital content and combats fake news. | Threatens AI reliability and safety across industries. |

| Tools | Deepfake detectors, image forensics tools, AI classifiers. | Gradient-based attacks, adversarial example generators. |

Which is better?

Synthetic media detection focuses on identifying manipulated or AI-generated content to ensure authenticity and prevent misinformation. Adversarial attacks target vulnerabilities in machine learning models to deceive or manipulate their outputs, posing significant security challenges. Effectiveness depends on context: synthetic media detection is crucial for content verification, while defending against adversarial attacks is essential for preserving AI system integrity.

Connection

Synthetic media detection relies on identifying manipulated or AI-generated content, which becomes increasingly challenging due to adversarial attacks designed to deceive detection algorithms. Adversarial attacks exploit vulnerabilities in machine learning models by introducing subtle perturbations that cause synthetic media detectors to misclassify or fail entirely. Enhancing robustness against these attacks is crucial for improving the reliability of synthetic media detection systems in fields such as cybersecurity and digital forensics.

Key Terms

Deepfake

Adversarial attacks manipulate deepfake detection models by introducing subtle perturbations that cause misclassification, undermining the reliability of synthetic media detection. Deepfake detection systems rely on neural network architectures sensitive to such adversarial inputs, necessitating robust defense mechanisms like adversarial training and anomaly detection. Explore advanced strategies and emerging research to enhance resilience against adversarial attacks in synthetic media detection.

Robustness

Adversarial attacks exploit vulnerabilities in synthetic media detection models by introducing subtle perturbations that cause misclassification, challenging the robustness of current AI systems. Enhancing detection accuracy requires developing algorithms capable of resisting these manipulations while maintaining high performance on genuine data. Explore advanced techniques to strengthen synthetic media defenses and improve resilience against adversarial threats.

Machine Learning Explainability

Adversarial attacks exploit vulnerabilities in machine learning models by introducing subtle perturbations that mislead synthetic media detection systems, undermining their reliability. Explainability techniques enhance the interpretability of these models, revealing how decisions are made and highlighting weaknesses that adversarial methods target. Explore deeper insights into machine learning explainability to improve robustness against adversarial threats in synthetic media detection.

Source and External Links

What Is Adversarial AI in Machine Learning? - Palo Alto Networks - Adversarial attacks are carefully crafted manipulations of input data designed to deceive AI systems, causing them to make incorrect predictions or decisions by exploiting model vulnerabilities, often through steps like understanding the target, creating adversarial inputs, and then exploiting the system.

Attack Methods: What Is Adversarial Machine Learning? - Viso Suite - Adversarial attacks aim to fool machine learning models by introducing malicious inputs or poisoning training data, classified mainly as poisoning, evasion, or model extraction attacks to degrade model performance or steal sensitive information.

Adversarial Attack: Definition and protection against this threat - Adversarial attacks manipulate AI models with data altered to mislead systems, demonstrated through examples like 3D-printed objects impacting image recognition and patterns that deceive facial recognition, highlighting the broad vulnerability of AI applications.

dowidth.com

dowidth.com