Diffusion models generate data by iteratively refining random noise to produce high-quality images, while transformers utilize attention mechanisms to process information sequentially for tasks like natural language processing and image recognition. Both architectures have revolutionized AI applications, with diffusion models excelling in image synthesis and transformers dominating language understanding and generation. Explore deeper to understand their unique strengths and applications.

Why it is important

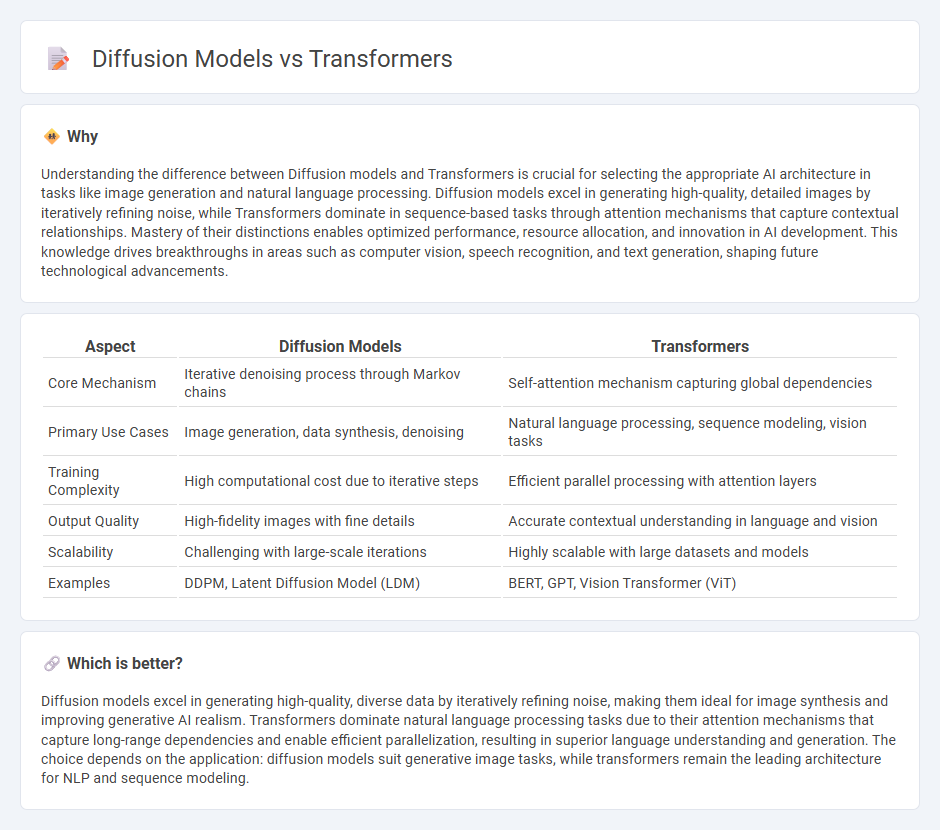

Understanding the difference between Diffusion models and Transformers is crucial for selecting the appropriate AI architecture in tasks like image generation and natural language processing. Diffusion models excel in generating high-quality, detailed images by iteratively refining noise, while Transformers dominate in sequence-based tasks through attention mechanisms that capture contextual relationships. Mastery of their distinctions enables optimized performance, resource allocation, and innovation in AI development. This knowledge drives breakthroughs in areas such as computer vision, speech recognition, and text generation, shaping future technological advancements.

Comparison Table

| Aspect | Diffusion Models | Transformers |

|---|---|---|

| Core Mechanism | Iterative denoising process through Markov chains | Self-attention mechanism capturing global dependencies |

| Primary Use Cases | Image generation, data synthesis, denoising | Natural language processing, sequence modeling, vision tasks |

| Training Complexity | High computational cost due to iterative steps | Efficient parallel processing with attention layers |

| Output Quality | High-fidelity images with fine details | Accurate contextual understanding in language and vision |

| Scalability | Challenging with large-scale iterations | Highly scalable with large datasets and models |

| Examples | DDPM, Latent Diffusion Model (LDM) | BERT, GPT, Vision Transformer (ViT) |

Which is better?

Diffusion models excel in generating high-quality, diverse data by iteratively refining noise, making them ideal for image synthesis and improving generative AI realism. Transformers dominate natural language processing tasks due to their attention mechanisms that capture long-range dependencies and enable efficient parallelization, resulting in superior language understanding and generation. The choice depends on the application: diffusion models suit generative image tasks, while transformers remain the leading architecture for NLP and sequence modeling.

Connection

Diffusion models generate data by progressively refining random noise, while Transformers excel in capturing long-range dependencies through self-attention mechanisms. Combining these approaches enhances image and signal generation by leveraging Transformers' ability to model complex patterns within the iterative denoising process of diffusion models. This synergy improves the quality and coherence of generated outputs in applications like text-to-image synthesis and audio generation.

Key Terms

Attention Mechanism

Transformers leverage a self-attention mechanism that computes pairwise relationships between all input tokens, enabling efficient context-awareness and parallel processing in natural language and vision tasks. Diffusion models incorporate attention to focus on relevant features while iteratively denoising data through stochastic processes, enhancing generative capabilities in image synthesis. Explore further to understand how attention shapes the strengths of these models in diverse AI applications.

Latent Space

Transformers leverage attention mechanisms to model dependencies in latent space through positional embeddings and self-attention layers, enabling efficient sequence representation and long-range context capture. Diffusion models iteratively refine latent representations by reversing a noise process, effectively learning complex data distributions within latent space for high-quality generative outputs. Explore deeper insights into latent space manipulation and model performance comparisons for advanced generative modeling techniques.

Denoising Process

Transformers utilize self-attention mechanisms to model long-range dependencies, enabling effective denoising by capturing contextual relationships across entire data sequences. Diffusion models iteratively remove noise through a Markov chain, reconstructing data by reversing a gradual noising process guided by learned denoising score functions. Explore in-depth comparisons of these methods and their denoising capabilities to deepen your understanding of modern generative modeling techniques.

Source and External Links

Transformers - Transformers is a media franchise created by Hasbro and Takara Tomy focusing primarily on the conflict between the heroic Autobots and evil Decepticons, featuring various storylines including the fusion of human "Kiss Players" with robots in later continuities.

Transformers (film series) - The live-action Transformers film series, directed by Michael Bay, began in 2007 and follows the battle on Earth between Autobots and Decepticons, with Steven Spielberg as executive producer and significant input on the story focusing on a human perspective.

Transformers (2007) - IMDb - The first live-action Transformers movie depicts the ancient struggle between Autobots and Decepticons coming to Earth, featuring a teenager as key to the ultimate power, with a cast including Shia LaBeouf and Megan Fox.

dowidth.com

dowidth.com