Neuromorphic chips mimic the brain's neural architecture to enhance cognitive computing efficiency, offering low power consumption and real-time learning capabilities compared to traditional processors. Tensor Processing Units (TPUs) specialize in accelerating machine learning workloads, particularly in neural network training and inference with high throughput and precision. Explore how these cutting-edge technologies redefine artificial intelligence performance and energy efficiency.

Why it is important

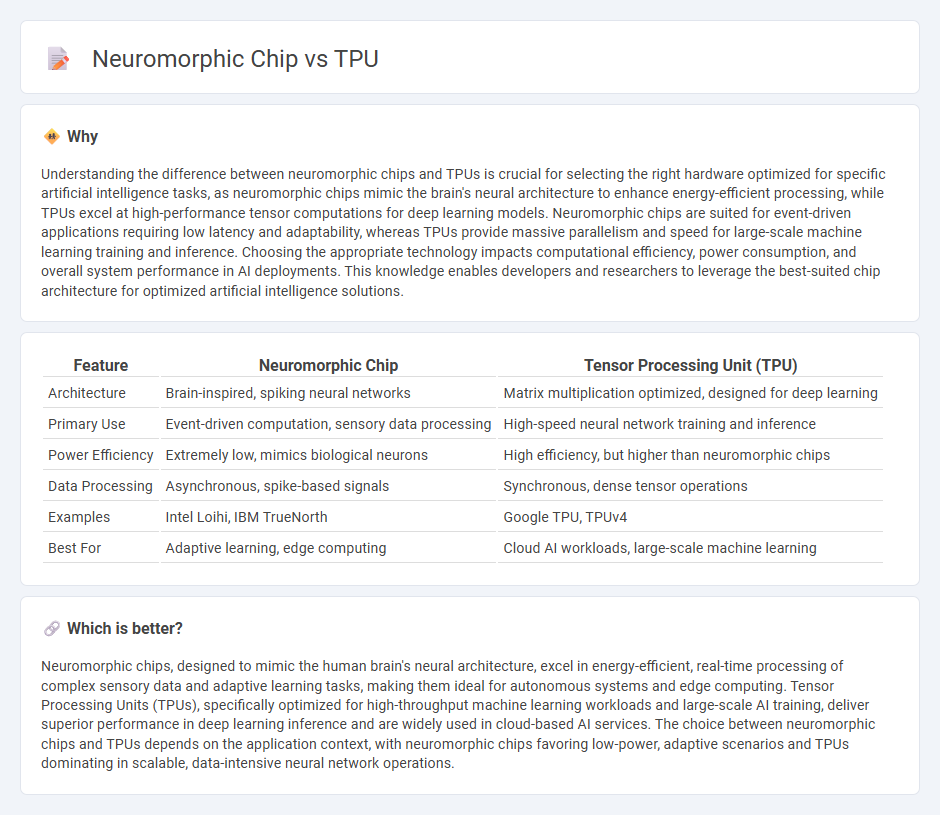

Understanding the difference between neuromorphic chips and TPUs is crucial for selecting the right hardware optimized for specific artificial intelligence tasks, as neuromorphic chips mimic the brain's neural architecture to enhance energy-efficient processing, while TPUs excel at high-performance tensor computations for deep learning models. Neuromorphic chips are suited for event-driven applications requiring low latency and adaptability, whereas TPUs provide massive parallelism and speed for large-scale machine learning training and inference. Choosing the appropriate technology impacts computational efficiency, power consumption, and overall system performance in AI deployments. This knowledge enables developers and researchers to leverage the best-suited chip architecture for optimized artificial intelligence solutions.

Comparison Table

| Feature | Neuromorphic Chip | Tensor Processing Unit (TPU) |

|---|---|---|

| Architecture | Brain-inspired, spiking neural networks | Matrix multiplication optimized, designed for deep learning |

| Primary Use | Event-driven computation, sensory data processing | High-speed neural network training and inference |

| Power Efficiency | Extremely low, mimics biological neurons | High efficiency, but higher than neuromorphic chips |

| Data Processing | Asynchronous, spike-based signals | Synchronous, dense tensor operations |

| Examples | Intel Loihi, IBM TrueNorth | Google TPU, TPUv4 |

| Best For | Adaptive learning, edge computing | Cloud AI workloads, large-scale machine learning |

Which is better?

Neuromorphic chips, designed to mimic the human brain's neural architecture, excel in energy-efficient, real-time processing of complex sensory data and adaptive learning tasks, making them ideal for autonomous systems and edge computing. Tensor Processing Units (TPUs), specifically optimized for high-throughput machine learning workloads and large-scale AI training, deliver superior performance in deep learning inference and are widely used in cloud-based AI services. The choice between neuromorphic chips and TPUs depends on the application context, with neuromorphic chips favoring low-power, adaptive scenarios and TPUs dominating in scalable, data-intensive neural network operations.

Connection

Neuromorphic chips and Tensor Processing Units (TPUs) both enhance artificial intelligence by optimizing computational efficiency and power consumption, but they do so through different architectures: neuromorphic chips mimic brain synapses using spiking neural networks, while TPUs accelerate deep learning models with matrix multiplication hardware. These technologies converge in advanced AI hardware design, enabling faster neural network processing and improving machine learning performance. Their complementary roles enable more energy-efficient AI systems in applications like robotics and natural language processing.

Key Terms

Parallel Processing

TPUs (Tensor Processing Units) excel in parallel processing by optimizing matrix computations critical for deep learning tasks, enabling accelerated AI model training with high throughput and low latency. Neuromorphic chips mimic neuronal architectures using spiking neural networks, offering energy-efficient and massively parallel event-driven processing suited for real-time sensory data interpretation. Explore how the distinct parallel processing paradigms of TPUs and neuromorphic chips impact AI efficiency and scalability.

Synaptic Emulation

Synaptic emulation in TPUs relies on matrix multiplication and efficient data flow to accelerate neural network training, while neuromorphic chips mimic biological synapses through spiking neural networks for energy-efficient computation. Neuromorphic architectures leverage event-driven communication and plasticity mechanisms to emulate synaptic behavior more naturally compared to the fixed-functional units in TPUs. Explore the advancements in synaptic emulation to understand the future of AI hardware innovation.

Matrix Multiplication

Tensor Processing Units (TPUs) are specialized hardware designed to accelerate matrix multiplication essential for deep learning, employing systolic arrays to optimize large-scale tensor operations efficiently. Neuromorphic chips mimic neural architectures with event-driven computation, offering energy-efficient sparse matrix multiplications but often lagging in raw throughput compared to TPUs for dense matrix tasks. Explore detailed performance benchmarks and architectural comparisons to understand which technology excels in specific neural network applications.

Source and External Links

Tensor Processing Unit - A custom AI accelerator ASIC developed by Google specifically for neural network machine learning, offering high performance for training and inference in large-scale AI models.

Cloud Tensor Processing Units (TPUs) - Google Cloud TPUs are optimized hardware accelerators available as a cloud service for efficiently training and running complex deep learning models, with specialized features for matrix operations and model scaling.

TPU rubber for 3D printed & Molded parts - Thermoplastic Polyurethane (TPU) is a flexible, durable elastomer used in 3D printing and manufacturing, known for its rubber-like properties and resistance to abrasion and oils.

dowidth.com

dowidth.com