Neural Radiance Fields (NeRF) leverage deep learning to reconstruct highly detailed 3D scenes by synthesizing novel views from input images, offering superior accuracy in capturing complex lighting and geometry compared to traditional photogrammetry. Photogrammetry relies on overlapping photographs and geometric computation to create 3D models but often struggles with reflective surfaces and intricate textures. Discover how these cutting-edge technologies transform 3D reconstruction and shape the future of digital imaging.

Why it is important

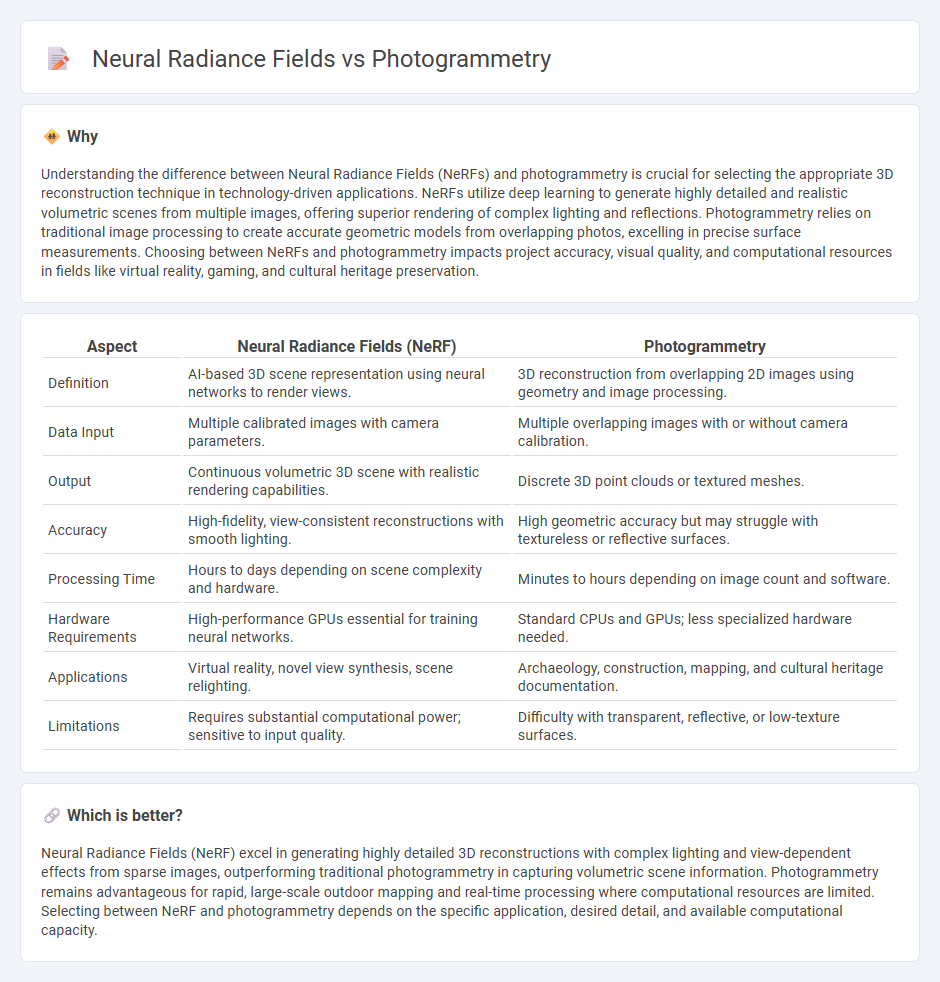

Understanding the difference between Neural Radiance Fields (NeRFs) and photogrammetry is crucial for selecting the appropriate 3D reconstruction technique in technology-driven applications. NeRFs utilize deep learning to generate highly detailed and realistic volumetric scenes from multiple images, offering superior rendering of complex lighting and reflections. Photogrammetry relies on traditional image processing to create accurate geometric models from overlapping photos, excelling in precise surface measurements. Choosing between NeRFs and photogrammetry impacts project accuracy, visual quality, and computational resources in fields like virtual reality, gaming, and cultural heritage preservation.

Comparison Table

| Aspect | Neural Radiance Fields (NeRF) | Photogrammetry |

|---|---|---|

| Definition | AI-based 3D scene representation using neural networks to render views. | 3D reconstruction from overlapping 2D images using geometry and image processing. |

| Data Input | Multiple calibrated images with camera parameters. | Multiple overlapping images with or without camera calibration. |

| Output | Continuous volumetric 3D scene with realistic rendering capabilities. | Discrete 3D point clouds or textured meshes. |

| Accuracy | High-fidelity, view-consistent reconstructions with smooth lighting. | High geometric accuracy but may struggle with textureless or reflective surfaces. |

| Processing Time | Hours to days depending on scene complexity and hardware. | Minutes to hours depending on image count and software. |

| Hardware Requirements | High-performance GPUs essential for training neural networks. | Standard CPUs and GPUs; less specialized hardware needed. |

| Applications | Virtual reality, novel view synthesis, scene relighting. | Archaeology, construction, mapping, and cultural heritage documentation. |

| Limitations | Requires substantial computational power; sensitive to input quality. | Difficulty with transparent, reflective, or low-texture surfaces. |

Which is better?

Neural Radiance Fields (NeRF) excel in generating highly detailed 3D reconstructions with complex lighting and view-dependent effects from sparse images, outperforming traditional photogrammetry in capturing volumetric scene information. Photogrammetry remains advantageous for rapid, large-scale outdoor mapping and real-time processing where computational resources are limited. Selecting between NeRF and photogrammetry depends on the specific application, desired detail, and available computational capacity.

Connection

Neural radiance fields (NeRF) and photogrammetry are connected through their shared use in 3D reconstruction and computer vision. Photogrammetry captures multiple images of a scene to create accurate textured 3D models, while NeRF uses neural networks to synthesize novel views from these multi-view images by modeling volumetric scene properties. This synergy enhances detailed scene representation and realistic rendering by combining photogrammetry's geometric data with NeRF's continuous volumetric modeling.

Key Terms

3D Reconstruction

Photogrammetry creates 3D reconstructions by analyzing multiple overlapping images to generate dense point clouds and textured meshes with high geometric accuracy. Neural Radiance Fields (NeRF) use deep learning to synthesize novel views and reconstruct 3D scenes by encoding volumetric scene representation, offering superior rendering of complex lighting and reflections. Explore the advancements in 3D reconstruction techniques to understand their applications and benefits.

Multi-view Imaging

Photogrammetry reconstructs 3D models by triangulating multiple overlapping images, relying heavily on feature matching and camera calibration for multi-view imaging. Neural Radiance Fields (NeRF) perform volumetric scene representation using deep learning, synthesizing novel views by optimizing radiance and density values across viewpoints without explicit geometry extraction. Explore the latest research to understand how these methods revolutionize multi-view imaging and 3D reconstruction.

Volumetric Rendering

Photogrammetry reconstructs 3D scenes by triangulating multiple images to generate textured meshes, offering precise geometric accuracy but limited flexibility in lighting and view synthesis. Neural Radiance Fields (NeRFs) leverage deep learning to represent volumetric scenes as continuous radiance functions, enabling photorealistic rendering with dynamic lighting and view-dependent effects. Explore the latest advancements in volumetric rendering to understand how these technologies are revolutionizing 3D visualization.

Source and External Links

Photogrammetry - Wikipedia - Photogrammetry is the science and technology of obtaining reliable information about physical objects and the environment from photographs.

What is photogrammetry? - Artec 3D - Photogrammetry measures dimensions accurately by using multiple overlapping photos taken from different positions and angles to infer physical dimensions.

Photogrammetry Software | Photos to 3D Scans - Autodesk - Photogrammetry software reconstructs detailed 3D models from 2D images by analyzing overlapping pictures, matching features, triangulating points, and georeferencing to integrate with GIS and design tools.

dowidth.com

dowidth.com