Retrieval augmented generation (RAG) enhances language models by combining pre-trained transformers with external knowledge bases, enabling more accurate and contextually relevant responses without extensive retraining. Fine-tuning adjusts the model's weights on specific datasets to specialize performance in targeted tasks, but often requires significant computational resources and time. Explore how these distinct approaches impact the future of AI-driven content generation and knowledge extraction.

Why it is important

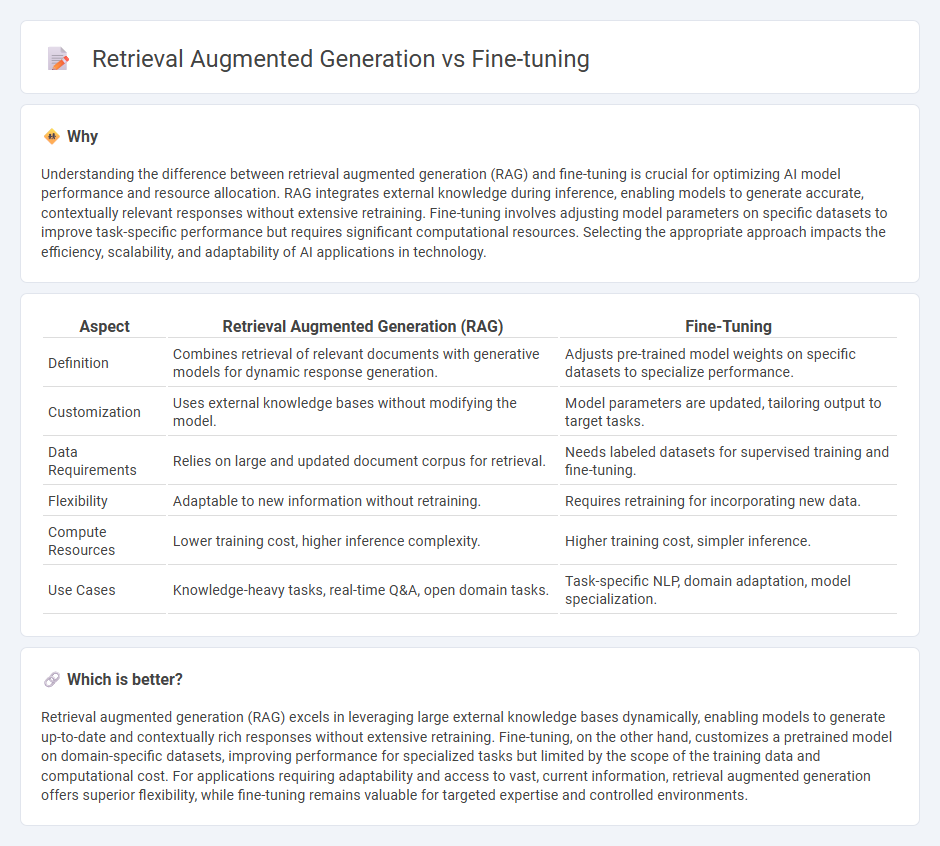

Understanding the difference between retrieval augmented generation (RAG) and fine-tuning is crucial for optimizing AI model performance and resource allocation. RAG integrates external knowledge during inference, enabling models to generate accurate, contextually relevant responses without extensive retraining. Fine-tuning involves adjusting model parameters on specific datasets to improve task-specific performance but requires significant computational resources. Selecting the appropriate approach impacts the efficiency, scalability, and adaptability of AI applications in technology.

Comparison Table

| Aspect | Retrieval Augmented Generation (RAG) | Fine-Tuning |

|---|---|---|

| Definition | Combines retrieval of relevant documents with generative models for dynamic response generation. | Adjusts pre-trained model weights on specific datasets to specialize performance. |

| Customization | Uses external knowledge bases without modifying the model. | Model parameters are updated, tailoring output to target tasks. |

| Data Requirements | Relies on large and updated document corpus for retrieval. | Needs labeled datasets for supervised training and fine-tuning. |

| Flexibility | Adaptable to new information without retraining. | Requires retraining for incorporating new data. |

| Compute Resources | Lower training cost, higher inference complexity. | Higher training cost, simpler inference. |

| Use Cases | Knowledge-heavy tasks, real-time Q&A, open domain tasks. | Task-specific NLP, domain adaptation, model specialization. |

Which is better?

Retrieval augmented generation (RAG) excels in leveraging large external knowledge bases dynamically, enabling models to generate up-to-date and contextually rich responses without extensive retraining. Fine-tuning, on the other hand, customizes a pretrained model on domain-specific datasets, improving performance for specialized tasks but limited by the scope of the training data and computational cost. For applications requiring adaptability and access to vast, current information, retrieval augmented generation offers superior flexibility, while fine-tuning remains valuable for targeted expertise and controlled environments.

Connection

Retrieval-augmented generation (RAG) integrates external knowledge sources with language models to improve response accuracy by retrieving relevant information during generation. Fine-tuning enhances this process by adapting pre-trained models to specific domains or tasks, optimizing performance on retrieval and generation components. Together, RAG and fine-tuning create more context-aware, precise AI systems by combining dynamic knowledge retrieval with task-specific model adjustments.

Key Terms

Model Adaptation

Fine-tuning involves updating a pre-trained model's weights on domain-specific data to enhance task performance and adapt the model's internal representations. Retrieval-augmented generation (RAG) integrates external knowledge sources dynamically during inference, allowing models to access relevant information without altering their parameters. Explore these model adaptation techniques in depth to determine the best approach for your AI applications.

Knowledge Retrieval

Fine-tuning involves updating a pre-trained language model on domain-specific data to improve responses by embedding relevant knowledge directly into model parameters. Retrieval Augmented Generation (RAG) combines large language models with external knowledge bases or document retrieval systems, enabling dynamic access to up-to-date or extensive information during generation. Explore these techniques in depth to understand their strengths in optimizing knowledge retrieval for AI applications.

Training Data

Fine-tuning involves updating a pre-trained model's weights using a specific labeled dataset tailored to a particular task, enhancing its performance within that domain. Retrieval-augmented generation (RAG) supplements the model with external, dynamically queried data without altering the model itself, leveraging large-scale knowledge bases or corpora to provide context-aware responses. Explore more to understand how these approaches impact training efficiency and application scope.

Source and External Links

Fine-Tuning LLMs: A Guide With Examples - DataCamp - Fine-tuning involves taking a pre-trained model and further training it on domain-specific datasets using approaches like supervised fine-tuning, few-shot learning, transfer learning, and domain-specific fine-tuning to adapt the model to specialized tasks or industries.

What is Fine-Tuning? | IBM - Fine-tuning is the process of adapting a pre-trained model for specific use cases by continuing training on task-relevant data, leveraging prior learning to reduce computational resources and labeled data needed for customization.

Fine-tuning (deep learning) - Wikipedia - In deep learning, fine-tuning modifies a pre-trained neural network's parameters with new data, often freezing early layers and training later layers to specialize, and can be combined with reinforcement learning, as seen in models like ChatGPT.

dowidth.com

dowidth.com