Biosignal decoding involves interpreting neural or physiological signals to translate brain activity into actionable data, enabling advancements in neural prosthetics and brain-computer interfaces. Audio synthesis, by contrast, focuses on generating sound waves through algorithms and electronic instruments to create music or speech artificially. Explore the latest innovations bridging biosignal decoding and audio synthesis to understand their transformative impact on technology.

Why it is important

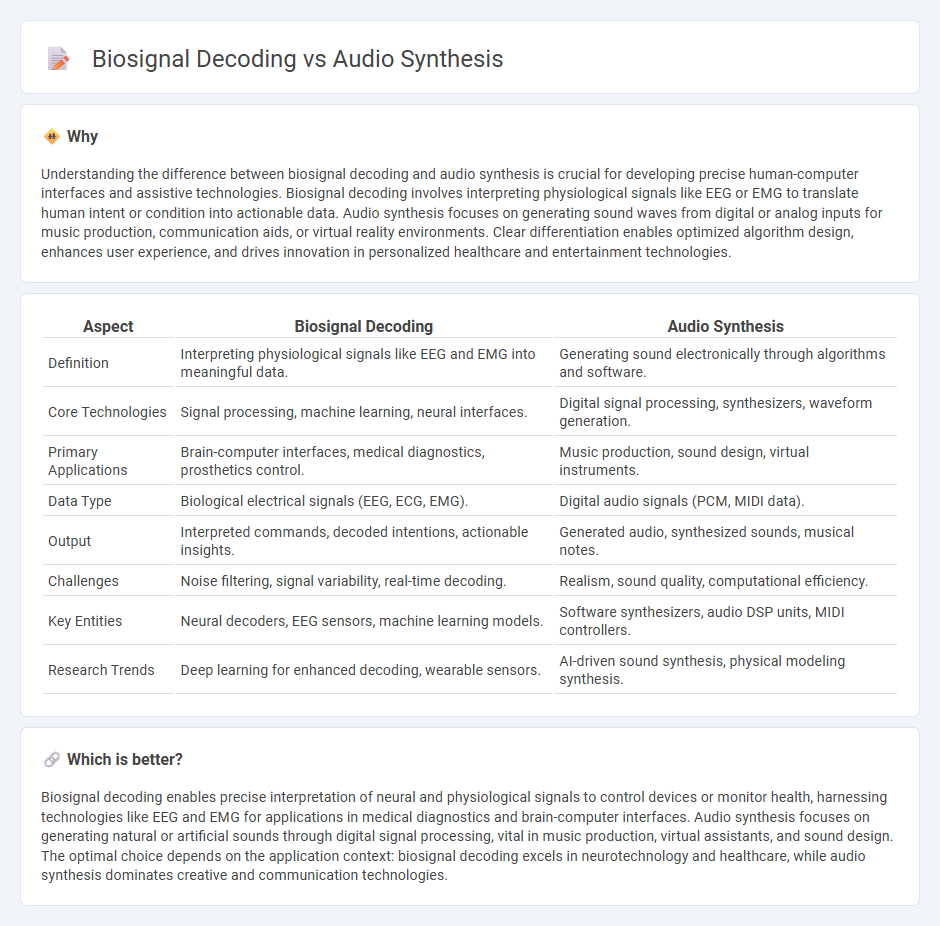

Understanding the difference between biosignal decoding and audio synthesis is crucial for developing precise human-computer interfaces and assistive technologies. Biosignal decoding involves interpreting physiological signals like EEG or EMG to translate human intent or condition into actionable data. Audio synthesis focuses on generating sound waves from digital or analog inputs for music production, communication aids, or virtual reality environments. Clear differentiation enables optimized algorithm design, enhances user experience, and drives innovation in personalized healthcare and entertainment technologies.

Comparison Table

| Aspect | Biosignal Decoding | Audio Synthesis |

|---|---|---|

| Definition | Interpreting physiological signals like EEG and EMG into meaningful data. | Generating sound electronically through algorithms and software. |

| Core Technologies | Signal processing, machine learning, neural interfaces. | Digital signal processing, synthesizers, waveform generation. |

| Primary Applications | Brain-computer interfaces, medical diagnostics, prosthetics control. | Music production, sound design, virtual instruments. |

| Data Type | Biological electrical signals (EEG, ECG, EMG). | Digital audio signals (PCM, MIDI data). |

| Output | Interpreted commands, decoded intentions, actionable insights. | Generated audio, synthesized sounds, musical notes. |

| Challenges | Noise filtering, signal variability, real-time decoding. | Realism, sound quality, computational efficiency. |

| Key Entities | Neural decoders, EEG sensors, machine learning models. | Software synthesizers, audio DSP units, MIDI controllers. |

| Research Trends | Deep learning for enhanced decoding, wearable sensors. | AI-driven sound synthesis, physical modeling synthesis. |

Which is better?

Biosignal decoding enables precise interpretation of neural and physiological signals to control devices or monitor health, harnessing technologies like EEG and EMG for applications in medical diagnostics and brain-computer interfaces. Audio synthesis focuses on generating natural or artificial sounds through digital signal processing, vital in music production, virtual assistants, and sound design. The optimal choice depends on the application context: biosignal decoding excels in neurotechnology and healthcare, while audio synthesis dominates creative and communication technologies.

Connection

Biosignal decoding translates physiological signals such as EEG or EMG into digital data, providing real-time neural or muscular information. Audio synthesis uses this decoded data to generate or modulate sound, enabling applications like brain-computer interfaces and adaptive music systems. This connection drives innovation in neurotechnology, enhancing human-computer interaction through seamless integration of biosignals and sound output.

Key Terms

**Audio Synthesis:**

Audio synthesis involves generating artificial sounds using algorithms and digital signal processing techniques, enabling the creation of music, speech, and environmental sounds with high fidelity. Techniques such as granular synthesis, wavetable synthesis, and neural network-based models like WaveNet provide precise control over timbre, pitch, and duration for realistic or synthetic audio production. Explore advanced methods and applications in audio synthesis to enhance your sound design expertise.

Oscillator

Oscillator technology plays a critical role in both audio synthesis and biosignal decoding, where it generates periodic waveforms crucial for sound production and neurological signal interpretation. In audio synthesis, oscillators produce fundamental tones and modulate sound characteristics, while in biosignal decoding, they help decode rhythmic biological patterns such as EEG or ECG signals. Explore further to understand how oscillator design innovations enhance both auditory creativity and biomedical analysis.

Waveform

Waveform analysis plays a crucial role in both audio synthesis and biosignal decoding, where accurate representation of signal patterns is essential. In audio synthesis, waveform manipulation enables realistic sound generation, while in biosignal decoding, it facilitates precise interpretation of physiological signals like EEG or ECG. Explore further to understand how waveform processing advances these technologies.

Source and External Links

Synthesizer - Synthesizers generate audio through various methods like subtractive synthesis, additive synthesis, and frequency modulation.

Sound Synthesis: Part 1 - This article explores the basics of sound synthesis, including the role of oscillators and filters in creating complex sounds.

Sound synthesis 101 - A guide that covers the basics of synthesizers and how they are used to generate and manipulate sounds through various synthesis techniques.

dowidth.com

dowidth.com