Self-supervised learning leverages unlabeled data to create predictive models by generating its own training signals, significantly reducing the need for manual annotation. Active learning strategically selects the most informative data points for labeling, optimizing model performance with minimal labeled datasets. Explore the nuances and applications of self-supervised versus active learning to uncover which approach best suits your AI challenges.

Why it is important

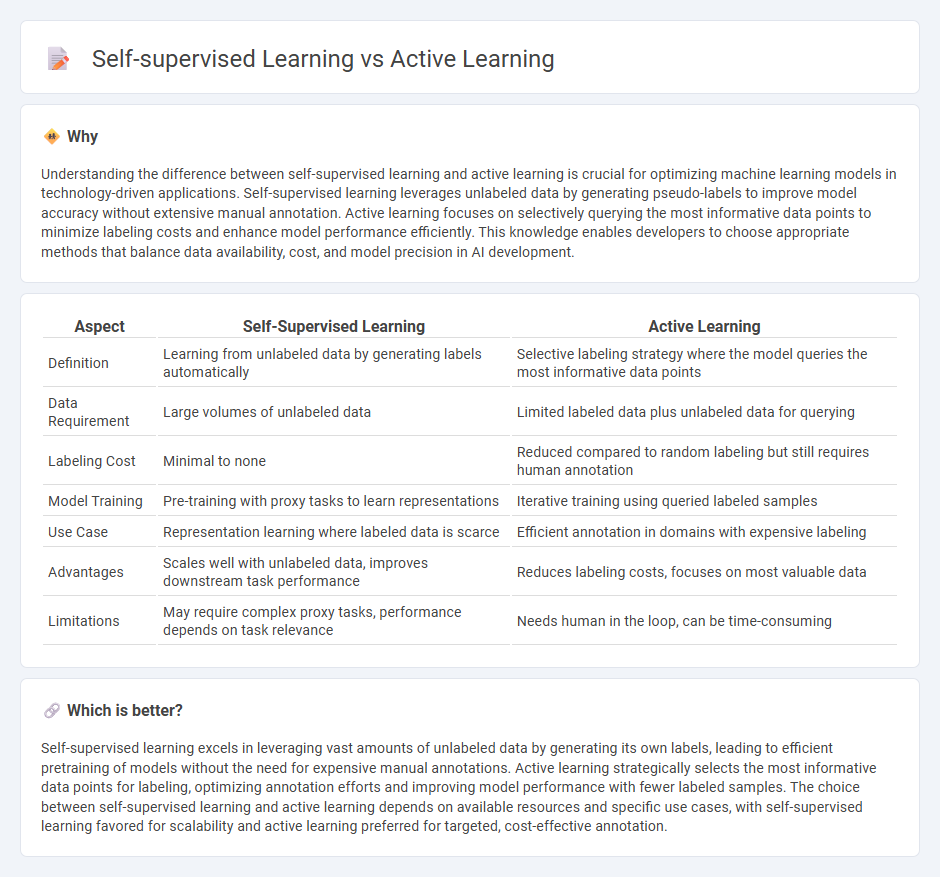

Understanding the difference between self-supervised learning and active learning is crucial for optimizing machine learning models in technology-driven applications. Self-supervised learning leverages unlabeled data by generating pseudo-labels to improve model accuracy without extensive manual annotation. Active learning focuses on selectively querying the most informative data points to minimize labeling costs and enhance model performance efficiently. This knowledge enables developers to choose appropriate methods that balance data availability, cost, and model precision in AI development.

Comparison Table

| Aspect | Self-Supervised Learning | Active Learning |

|---|---|---|

| Definition | Learning from unlabeled data by generating labels automatically | Selective labeling strategy where the model queries the most informative data points |

| Data Requirement | Large volumes of unlabeled data | Limited labeled data plus unlabeled data for querying |

| Labeling Cost | Minimal to none | Reduced compared to random labeling but still requires human annotation |

| Model Training | Pre-training with proxy tasks to learn representations | Iterative training using queried labeled samples |

| Use Case | Representation learning where labeled data is scarce | Efficient annotation in domains with expensive labeling |

| Advantages | Scales well with unlabeled data, improves downstream task performance | Reduces labeling costs, focuses on most valuable data |

| Limitations | May require complex proxy tasks, performance depends on task relevance | Needs human in the loop, can be time-consuming |

Which is better?

Self-supervised learning excels in leveraging vast amounts of unlabeled data by generating its own labels, leading to efficient pretraining of models without the need for expensive manual annotations. Active learning strategically selects the most informative data points for labeling, optimizing annotation efforts and improving model performance with fewer labeled samples. The choice between self-supervised learning and active learning depends on available resources and specific use cases, with self-supervised learning favored for scalability and active learning preferred for targeted, cost-effective annotation.

Connection

Self-supervised learning leverages unlabeled data by creating pseudo-labels from the data itself, enabling models to learn useful representations without extensive manual annotation. Active learning enhances this process by selectively querying the most informative samples for labeling, thereby improving model accuracy with fewer labeled examples. Combining these approaches optimizes data efficiency and model performance in machine learning tasks.

Key Terms

Labeled Data

Active learning strategically selects the most informative labeled data points to improve model performance with fewer annotations, reducing labeling costs significantly. Self-supervised learning leverages large amounts of unlabeled data by generating pseudo-labels or pretext tasks, minimizing reliance on scarce labeled datasets. Discover how these approaches optimize data efficiency and model accuracy in diverse applications.

Representation Learning

Active learning selectively queries the most informative data points to enhance representation learning efficiency while minimizing labeling costs. Self-supervised learning leverages intrinsic data patterns to generate supervisory signals, enabling robust feature extraction without manual annotations. Explore how these approaches advance representation learning by improving model performance and reducing dependency on labeled datasets.

Human Annotation

Active learning leverages human annotation efficiently by selecting the most informative data points for labeling, reducing the annotation burden while improving model performance. Self-supervised learning minimizes dependency on human labels by using inherent data structures to generate supervisory signals, enabling models to learn representations from unlabeled data. Explore the distinct impacts of human annotation in these learning paradigms to optimize your machine learning workflow.

Source and External Links

Active learning - Wikipedia - Active learning is a teaching method where students are actively involved in the learning process through activities such as reading, writing, discussing, problem-solving, and higher-order thinking tasks, which improves academic outcomes and critical skills.

Active Learning | Center for Educational Innovation - Active learning comprises diverse teaching strategies that engage all students in activities like problem solving, discussions, and reflection during class, making lecture formats more effective by promoting active participation and understanding.

Active Learning - Center for Teaching Innovation - Active learning involves students in thinking, discussing, problem-solving, and creating in class with timely feedback, enhancing learning by processing material through multiple modes and fostering motivation, understanding, and community.

dowidth.com

dowidth.com