AI ethics evaluation focuses on identifying and addressing moral concerns related to artificial intelligence systems, emphasizing transparency, fairness, and accountability principles. Responsible AI governance establishes structured policies and regulatory frameworks that ensure AI deployment aligns with societal values and legal standards. Explore how integrating both approaches strengthens trust and mitigates risks in AI implementation.

Why it is important

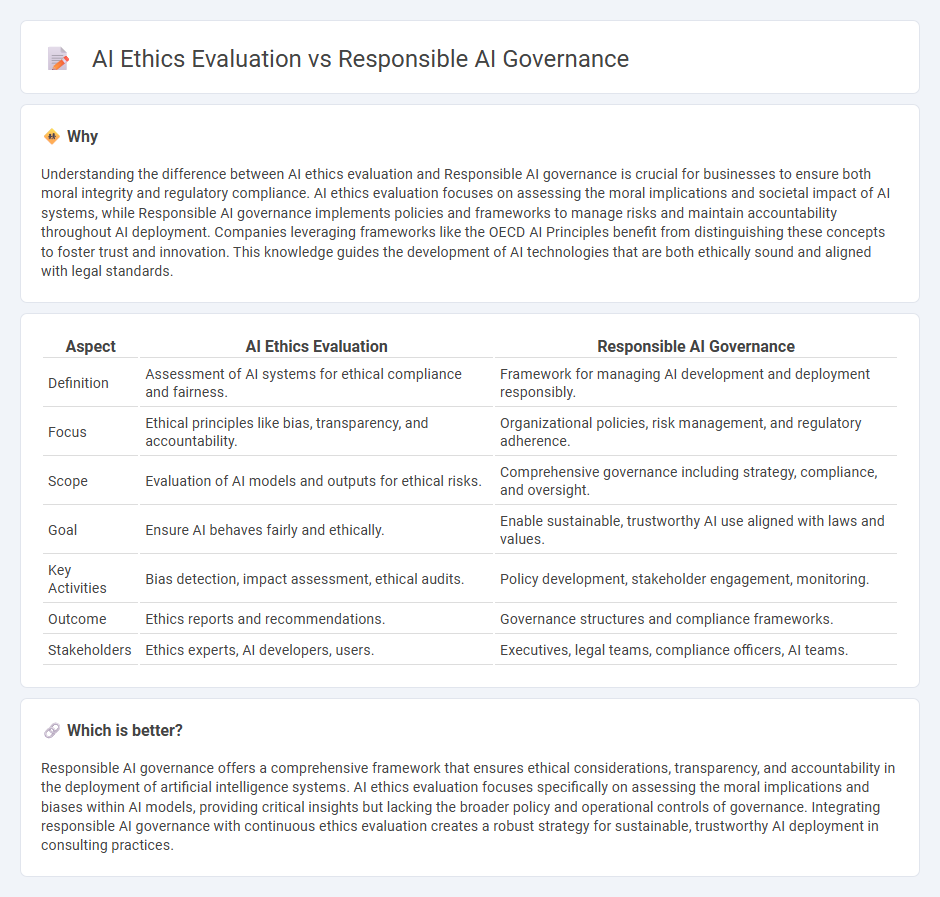

Understanding the difference between AI ethics evaluation and Responsible AI governance is crucial for businesses to ensure both moral integrity and regulatory compliance. AI ethics evaluation focuses on assessing the moral implications and societal impact of AI systems, while Responsible AI governance implements policies and frameworks to manage risks and maintain accountability throughout AI deployment. Companies leveraging frameworks like the OECD AI Principles benefit from distinguishing these concepts to foster trust and innovation. This knowledge guides the development of AI technologies that are both ethically sound and aligned with legal standards.

Comparison Table

| Aspect | AI Ethics Evaluation | Responsible AI Governance |

|---|---|---|

| Definition | Assessment of AI systems for ethical compliance and fairness. | Framework for managing AI development and deployment responsibly. |

| Focus | Ethical principles like bias, transparency, and accountability. | Organizational policies, risk management, and regulatory adherence. |

| Scope | Evaluation of AI models and outputs for ethical risks. | Comprehensive governance including strategy, compliance, and oversight. |

| Goal | Ensure AI behaves fairly and ethically. | Enable sustainable, trustworthy AI use aligned with laws and values. |

| Key Activities | Bias detection, impact assessment, ethical audits. | Policy development, stakeholder engagement, monitoring. |

| Outcome | Ethics reports and recommendations. | Governance structures and compliance frameworks. |

| Stakeholders | Ethics experts, AI developers, users. | Executives, legal teams, compliance officers, AI teams. |

Which is better?

Responsible AI governance offers a comprehensive framework that ensures ethical considerations, transparency, and accountability in the deployment of artificial intelligence systems. AI ethics evaluation focuses specifically on assessing the moral implications and biases within AI models, providing critical insights but lacking the broader policy and operational controls of governance. Integrating responsible AI governance with continuous ethics evaluation creates a robust strategy for sustainable, trustworthy AI deployment in consulting practices.

Connection

AI ethics evaluation plays a crucial role in responsible AI governance by ensuring that artificial intelligence systems align with moral principles such as fairness, transparency, and accountability. Responsible AI governance frameworks incorporate continuous ethics assessments to mitigate risks related to bias, privacy, and decision-making errors. Integrating AI ethics evaluation into governance policies fosters trust and compliance while guiding sustainable deployment of AI technologies across industries.

Key Terms

Accountability

Responsible AI governance ensures accountability by implementing transparent frameworks, robust oversight mechanisms, and clear compliance standards within organizations. AI ethics evaluation emphasizes identifying and mitigating biases, ensuring fairness, and upholding moral principles throughout AI development and deployment. Explore comprehensive strategies to enhance accountability in artificial intelligence systems.

Bias Assessment

Responsible AI governance integrates comprehensive bias assessment frameworks to ensure fair, transparent, and accountable AI deployment across sectors, emphasizing compliance with legal standards and societal norms. AI ethics evaluation centers on identifying, understanding, and mitigating bias by scrutinizing data sources, model training processes, and outcome impacts to uphold principles of justice and human dignity. Explore detailed methodologies and case studies to deepen your understanding of effective bias assessment in AI governance and ethics.

Compliance Framework

Responsible AI governance emphasizes establishing comprehensive compliance frameworks that ensure AI systems adhere to legal, regulatory, and organizational standards. AI ethics evaluation prioritizes assessing the moral implications and societal impact of AI technologies through frameworks that guide ethical decision-making and stakeholder accountability. Explore how integrating compliance frameworks with ethical evaluations can create robust AI governance models.

Source and External Links

What Is AI Governance? - AI governance involves establishing accountability structures, defining roles and responsibilities, creating AI governance committees, developing policies, and conducting training to ensure AI systems are ethical, safe, and respect human rights.

What is AI Governance? - Responsible AI governance ensures AI is fair, transparent, and respects rights, involving collective responsibility among executives, developers, and users supported by monitoring, compliance, and risk mitigation strategies.

What is AI Governance? | IBM - AI governance encompasses multidisciplinary oversight with tools like real-time dashboards, health metrics, automated bias detection, alerts, custom KPIs, and audit trails to maintain ethical and effective AI operations.

dowidth.com

dowidth.com