Consulting in AI ethics evaluation focuses on assessing the moral implications, fairness, and societal impact of artificial intelligence systems to ensure responsible deployment. Trustworthiness analysis examines the reliability, security, and transparency of AI models, aiming to build user confidence and regulatory compliance. Explore more about how consulting bridges ethical principles and trust to optimize AI outcomes.

Why it is important

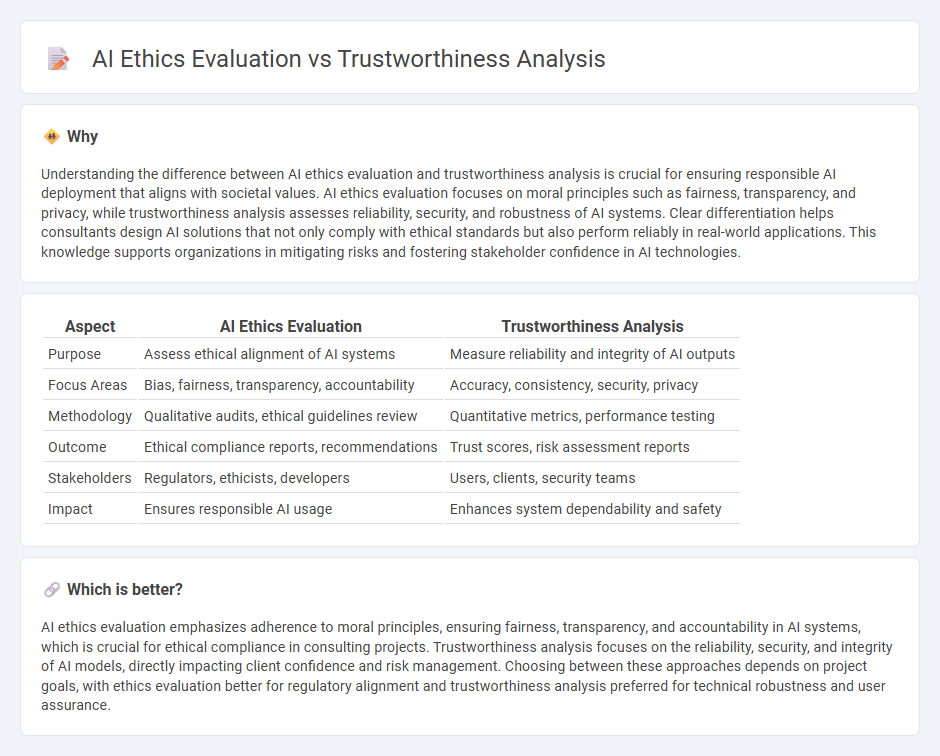

Understanding the difference between AI ethics evaluation and trustworthiness analysis is crucial for ensuring responsible AI deployment that aligns with societal values. AI ethics evaluation focuses on moral principles such as fairness, transparency, and privacy, while trustworthiness analysis assesses reliability, security, and robustness of AI systems. Clear differentiation helps consultants design AI solutions that not only comply with ethical standards but also perform reliably in real-world applications. This knowledge supports organizations in mitigating risks and fostering stakeholder confidence in AI technologies.

Comparison Table

| Aspect | AI Ethics Evaluation | Trustworthiness Analysis |

|---|---|---|

| Purpose | Assess ethical alignment of AI systems | Measure reliability and integrity of AI outputs |

| Focus Areas | Bias, fairness, transparency, accountability | Accuracy, consistency, security, privacy |

| Methodology | Qualitative audits, ethical guidelines review | Quantitative metrics, performance testing |

| Outcome | Ethical compliance reports, recommendations | Trust scores, risk assessment reports |

| Stakeholders | Regulators, ethicists, developers | Users, clients, security teams |

| Impact | Ensures responsible AI usage | Enhances system dependability and safety |

Which is better?

AI ethics evaluation emphasizes adherence to moral principles, ensuring fairness, transparency, and accountability in AI systems, which is crucial for ethical compliance in consulting projects. Trustworthiness analysis focuses on the reliability, security, and integrity of AI models, directly impacting client confidence and risk management. Choosing between these approaches depends on project goals, with ethics evaluation better for regulatory alignment and trustworthiness analysis preferred for technical robustness and user assurance.

Connection

AI ethics evaluation and trustworthiness analysis are interconnected through their focus on ensuring responsible AI deployment by assessing fairness, transparency, and accountability. Effective consulting in these areas involves analyzing AI systems for bias mitigation, compliance with ethical standards, and reliability in decision-making processes. This synergy supports organizations in building trustworthy AI solutions that align with societal values and regulatory requirements.

Key Terms

Transparency

Trustworthiness analysis emphasizes transparent data usage and clear algorithmic decision-making processes to build user confidence in AI systems. AI ethics evaluation examines transparency by assessing the openness of AI operations and the accessibility of information to stakeholders to ensure accountable and fair AI deployment. Explore further to understand how transparency shapes responsible AI design and governance.

Bias detection

Trustworthiness analysis emphasizes the systematic identification and mitigation of biases in AI models to ensure fairness and reliability across diverse datasets and user groups. AI ethics evaluation incorporates bias detection as a core component, assessing the moral implications and societal impact of biased outcomes within AI systems. Explore comprehensive methodologies to deepen understanding of bias detection in AI ethics and trustworthiness frameworks.

Accountability

Trustworthiness analysis emphasizes the reliability and transparency of AI systems, ensuring that they perform as intended and any failures can be traced back to responsible parties. AI ethics evaluation centers on the moral implications of AI deployment, prioritizing fairness, human rights, and societal impact in decision-making processes. Explore deeper insights into how accountability shapes both trustworthiness and ethical standards in AI development.

Source and External Links

Visual analysis of trustworthiness studies: based on the ABI model - Trustworthiness is studied as a key predictor of trust, conceptualized by Mayer et al. (1995) into three factors: ability, benevolence, and integrity; this model is applied to human and artificial intelligence interaction to analyze trustworthiness in social and clinical AI contexts.

Trustworthiness of Qualitative Data - Section 3 - In qualitative research, trustworthiness is established through four criteria: credibility, transferability, dependability, and confirmability, involving methods like triangulation, member checking, thick description, audit trails, and reflexivity to ensure reliable and valid findings.

Understanding and Using Trustworthiness in Qualitative Research - Trustworthiness in qualitative research is critically based on Lincoln and Guba's four criteria: credibility, transferability, dependability, and confirmability, with credibility enhanced by triangulation and peer scrutiny to verify the coherence of findings with reality.

dowidth.com

dowidth.com