Consulting services in AI ethics evaluation focus on ensuring that artificial intelligence systems operate fairly, transparently, and without bias, addressing societal impacts and regulatory compliance. Model explainability assessment emphasizes understanding and interpreting the decision-making processes of AI models to enhance trust and accountability in automated systems. Explore our expert strategies to balance ethical considerations with model transparency in your AI initiatives.

Why it is important

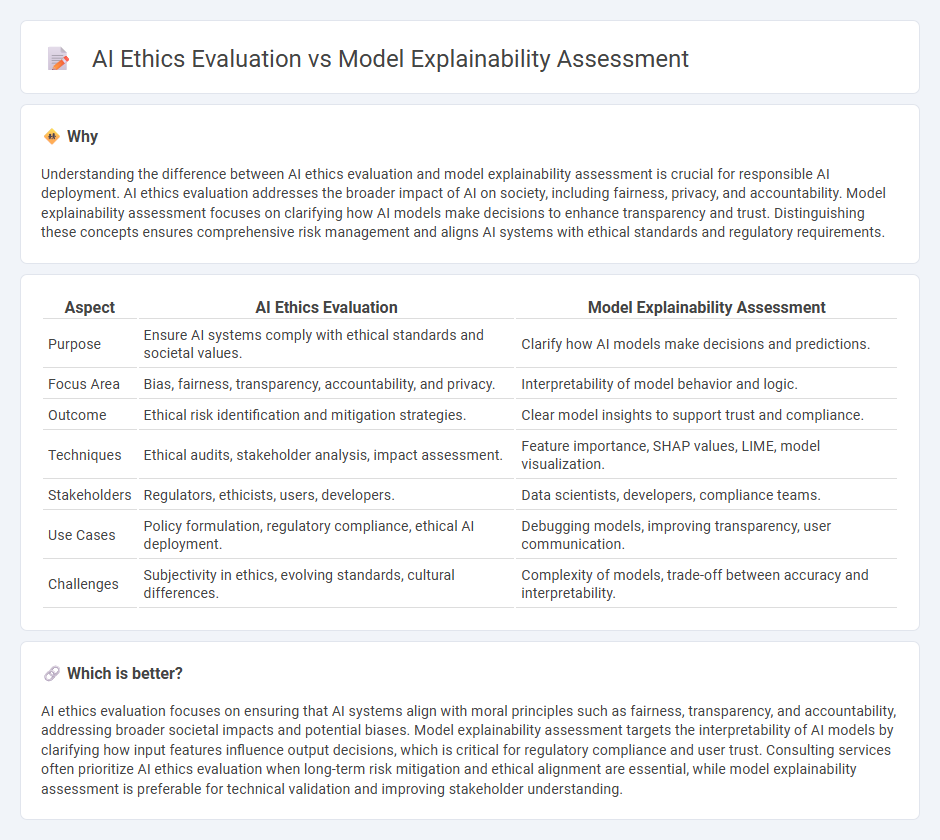

Understanding the difference between AI ethics evaluation and model explainability assessment is crucial for responsible AI deployment. AI ethics evaluation addresses the broader impact of AI on society, including fairness, privacy, and accountability. Model explainability assessment focuses on clarifying how AI models make decisions to enhance transparency and trust. Distinguishing these concepts ensures comprehensive risk management and aligns AI systems with ethical standards and regulatory requirements.

Comparison Table

| Aspect | AI Ethics Evaluation | Model Explainability Assessment |

|---|---|---|

| Purpose | Ensure AI systems comply with ethical standards and societal values. | Clarify how AI models make decisions and predictions. |

| Focus Area | Bias, fairness, transparency, accountability, and privacy. | Interpretability of model behavior and logic. |

| Outcome | Ethical risk identification and mitigation strategies. | Clear model insights to support trust and compliance. |

| Techniques | Ethical audits, stakeholder analysis, impact assessment. | Feature importance, SHAP values, LIME, model visualization. |

| Stakeholders | Regulators, ethicists, users, developers. | Data scientists, developers, compliance teams. |

| Use Cases | Policy formulation, regulatory compliance, ethical AI deployment. | Debugging models, improving transparency, user communication. |

| Challenges | Subjectivity in ethics, evolving standards, cultural differences. | Complexity of models, trade-off between accuracy and interpretability. |

Which is better?

AI ethics evaluation focuses on ensuring that AI systems align with moral principles such as fairness, transparency, and accountability, addressing broader societal impacts and potential biases. Model explainability assessment targets the interpretability of AI models by clarifying how input features influence output decisions, which is critical for regulatory compliance and user trust. Consulting services often prioritize AI ethics evaluation when long-term risk mitigation and ethical alignment are essential, while model explainability assessment is preferable for technical validation and improving stakeholder understanding.

Connection

AI ethics evaluation and model explainability assessment are interconnected through their focus on transparency and accountability in artificial intelligence systems. Effective model explainability facilitates ethical AI practices by making decision processes interpretable, which helps identify and mitigate biases, ensuring adherence to ethical standards. This synergy enhances trustworthiness and supports regulatory compliance in AI consulting projects.

Key Terms

Transparency

Model explainability assessment enhances transparency by clarifying how AI systems generate outputs, enabling users to understand decision-making processes and identify potential biases. AI ethics evaluation encompasses transparency but also addresses fairness, accountability, and privacy to ensure responsible AI deployment. Explore deeper insights into how transparency bridges technical and ethical AI considerations.

Fairness

Model explainability assessment ensures transparency by clarifying how AI systems make decisions, which directly supports identifying and mitigating biases that impact fairness. AI ethics evaluation encompasses broader principles, including fairness, accountability, and social impact, ensuring AI systems align with ethical standards and do not perpetuate discrimination. Explore further to understand how integrating both approaches promotes equitable and responsible AI deployment.

Accountability

Model explainability assessment enhances transparency by clarifying AI decision-making processes, which is crucial for ensuring accountability in automated systems. AI ethics evaluation encompasses broader principles, including fairness, bias mitigation, and responsible use, directly addressing ethical responsibilities and societal impacts. Explore how integrating model explainability with AI ethics evaluation strengthens accountability frameworks and promotes trust in AI technologies.

Source and External Links

Model Explainability: Understanding the Inner Workings - Alooba - Model explainability is the ability to understand, interpret, and analyze how machine learning models make decisions, focusing on feature importance and local explanations to clarify why a model predicted a specific outcome for particular instances.

A Quick Guide to Model Explainability - Experian Insights - Model explainability involves understanding model outputs both globally (general factors influencing predictions) and locally (specific predictions for individual cases), allowing stakeholders to comprehend feature importance and conditions leading to outcomes.

Model Explainability - Amazon SageMaker AI - AWS Documentation - Tools like Amazon SageMaker Clarify provide scalable, model-agnostic explanations (using SHAP values and partial dependence plots) to help developers and stakeholders understand and trust machine learning model predictions both before and after deployment.

dowidth.com

dowidth.com