Consulting in AI ethics evaluation focuses on ensuring AI systems align with moral principles, transparency, and fairness to prevent bias and uphold human rights. In contrast, AI risk assessment emphasizes identifying and mitigating potential technical and operational hazards that could disrupt system performance or cause harm. Explore our comprehensive services to understand how combining ethics evaluation with risk assessment enhances responsible AI deployment.

Why it is important

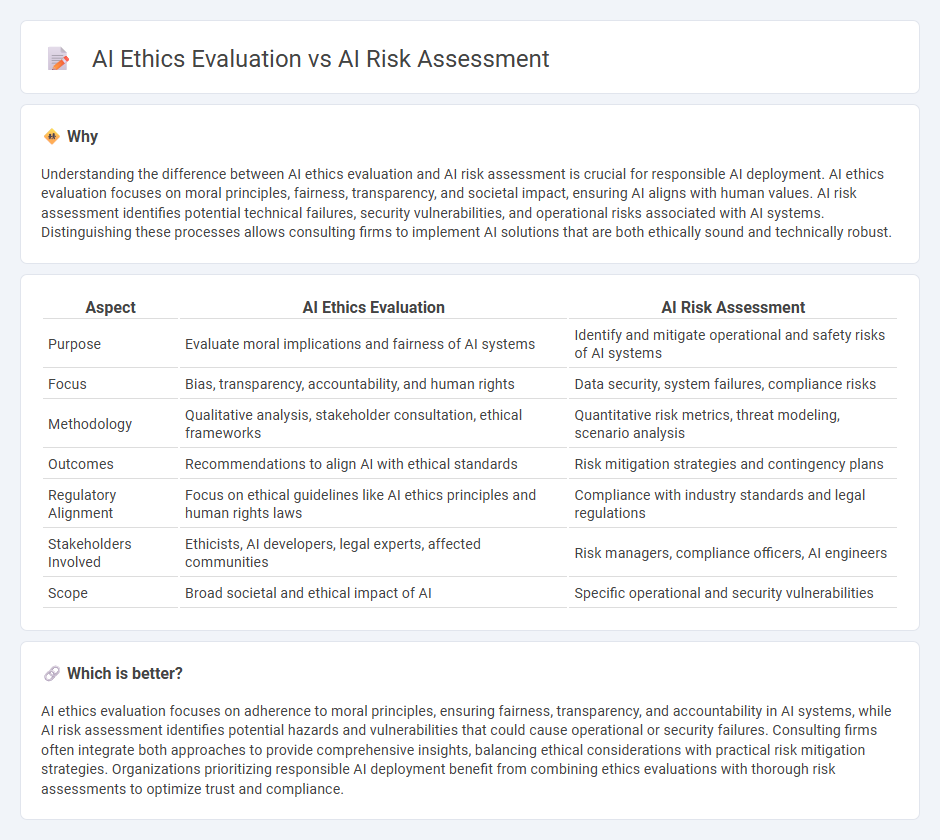

Understanding the difference between AI ethics evaluation and AI risk assessment is crucial for responsible AI deployment. AI ethics evaluation focuses on moral principles, fairness, transparency, and societal impact, ensuring AI aligns with human values. AI risk assessment identifies potential technical failures, security vulnerabilities, and operational risks associated with AI systems. Distinguishing these processes allows consulting firms to implement AI solutions that are both ethically sound and technically robust.

Comparison Table

| Aspect | AI Ethics Evaluation | AI Risk Assessment |

|---|---|---|

| Purpose | Evaluate moral implications and fairness of AI systems | Identify and mitigate operational and safety risks of AI systems |

| Focus | Bias, transparency, accountability, and human rights | Data security, system failures, compliance risks |

| Methodology | Qualitative analysis, stakeholder consultation, ethical frameworks | Quantitative risk metrics, threat modeling, scenario analysis |

| Outcomes | Recommendations to align AI with ethical standards | Risk mitigation strategies and contingency plans |

| Regulatory Alignment | Focus on ethical guidelines like AI ethics principles and human rights laws | Compliance with industry standards and legal regulations |

| Stakeholders Involved | Ethicists, AI developers, legal experts, affected communities | Risk managers, compliance officers, AI engineers |

| Scope | Broad societal and ethical impact of AI | Specific operational and security vulnerabilities |

Which is better?

AI ethics evaluation focuses on adherence to moral principles, ensuring fairness, transparency, and accountability in AI systems, while AI risk assessment identifies potential hazards and vulnerabilities that could cause operational or security failures. Consulting firms often integrate both approaches to provide comprehensive insights, balancing ethical considerations with practical risk mitigation strategies. Organizations prioritizing responsible AI deployment benefit from combining ethics evaluations with thorough risk assessments to optimize trust and compliance.

Connection

AI ethics evaluation and AI risk assessment are interconnected processes that ensure responsible AI deployment by identifying potential ethical concerns and associated risks. AI ethics evaluation focuses on principles such as fairness, transparency, and accountability, while AI risk assessment quantifies the likelihood and impact of those ethical risks on stakeholders. Integrating both approaches enables organizations to develop AI systems that are not only compliant with ethical standards but also resilient to operational, legal, and reputational risks.

Key Terms

Bias Detection

AI risk assessment prioritizes identifying potential harm, including bias detection in algorithms to prevent unfair outcomes affecting marginalized groups. AI ethics evaluation emphasizes moral principles, scrutinizing bias in data collection, model training, and application to ensure equity and transparency. Explore further to understand the nuanced approaches in bias detection within AI governance frameworks.

Compliance

AI risk assessment centers on identifying and mitigating potential threats related to data security, privacy breaches, and algorithmic bias to ensure adherence to regulatory standards like GDPR and CCPA. AI ethics evaluation emphasizes the moral implications of AI deployment, focusing on fairness, transparency, accountability, and the societal impact beyond mere legal compliance. Explore further to understand how integrating both approaches strengthens responsible AI governance and aligns with evolving compliance requirements.

Accountability

AI risk assessment systematically identifies potential threats and vulnerabilities in AI systems, emphasizing mitigation strategies to prevent harm. AI ethics evaluation centers on moral principles and the societal impact of AI, ensuring fairness, transparency, and respect for human rights. Explore how integrating both approaches enhances accountability in AI development and deployment.

Source and External Links

AI Risk Management: A Comprehensive Guide 101 - SentinelOne - AI risk management is a systematic approach to identifying, assessing, and mitigating risks across the AI lifecycle, using both technical and contextual evaluations, scenario planning, and mixed quantitative-qualitative metrics.

frontier ai risk assessment | nvidia - AI models are categorized by risk level based on their capabilities, use case, and autonomy, with risk scores (1-5) assigned according to the highest risk factor among these criteria.

AI Risk Assessment - Official Site of the Penn State AI Hub - Penn State's AI risk assessment process evaluates new AI features before release, considering security, ethics, accessibility, privacy, research protections, and academic integrity, with results and mitigation strategies published transparently.

dowidth.com

dowidth.com